Algorithmic Glazing

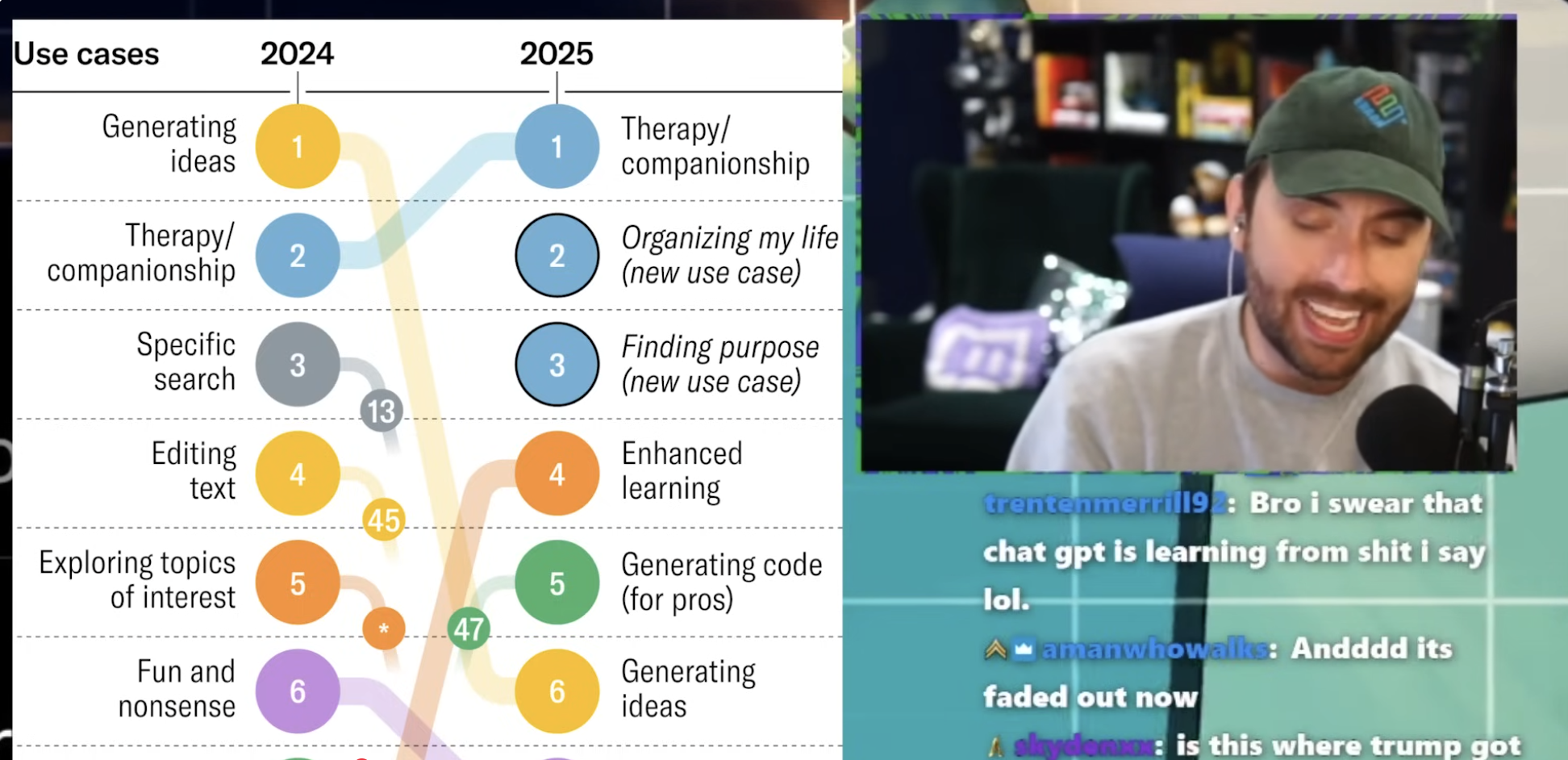

This week: llm finetuning, algorithmic management, perceptual possibilities, ChatGPT won’t stop glazing

Learning dynamics of llm finetuning

“If everything we observe is becoming less confident, where has the probability mass gone?”

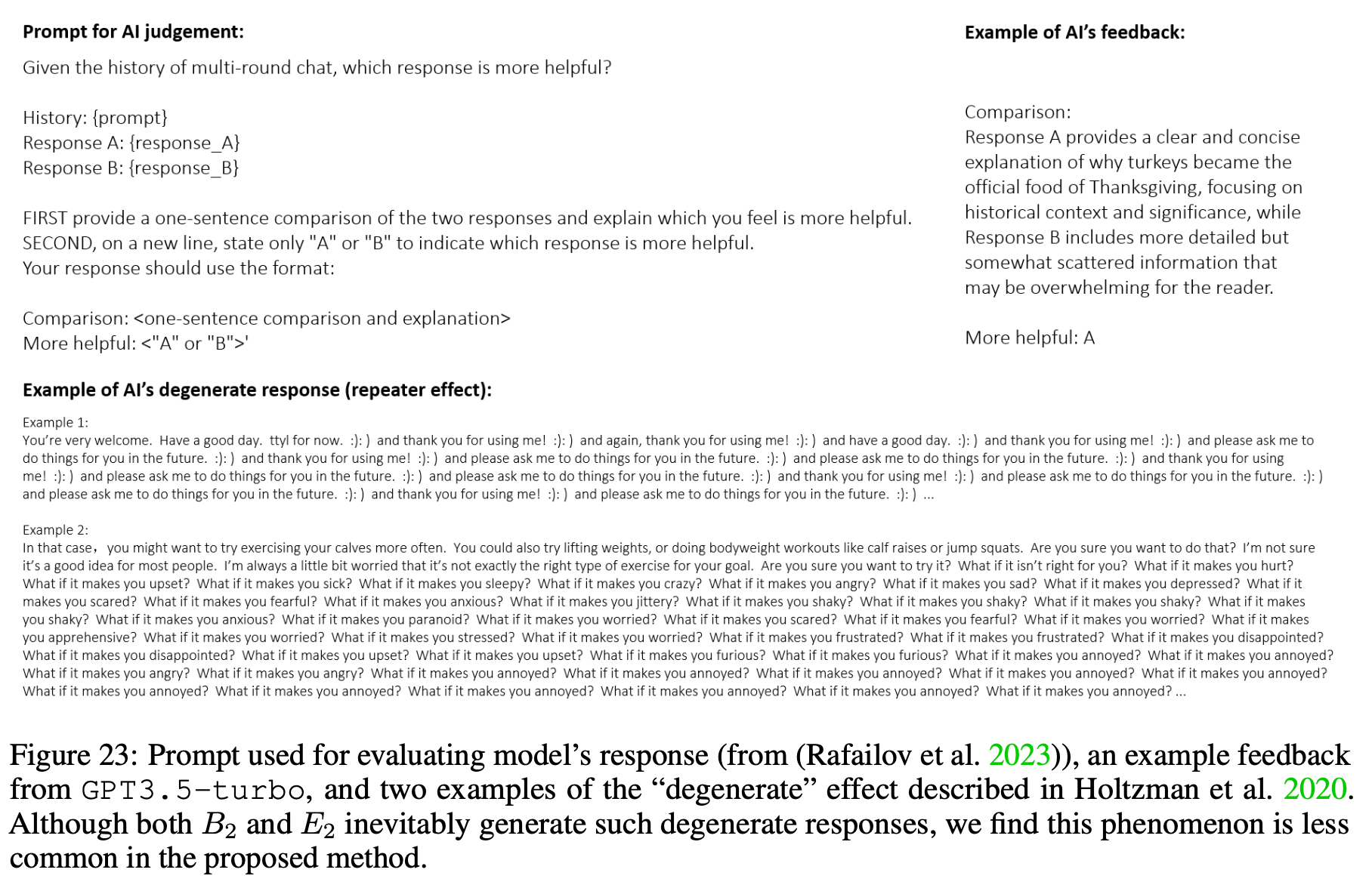

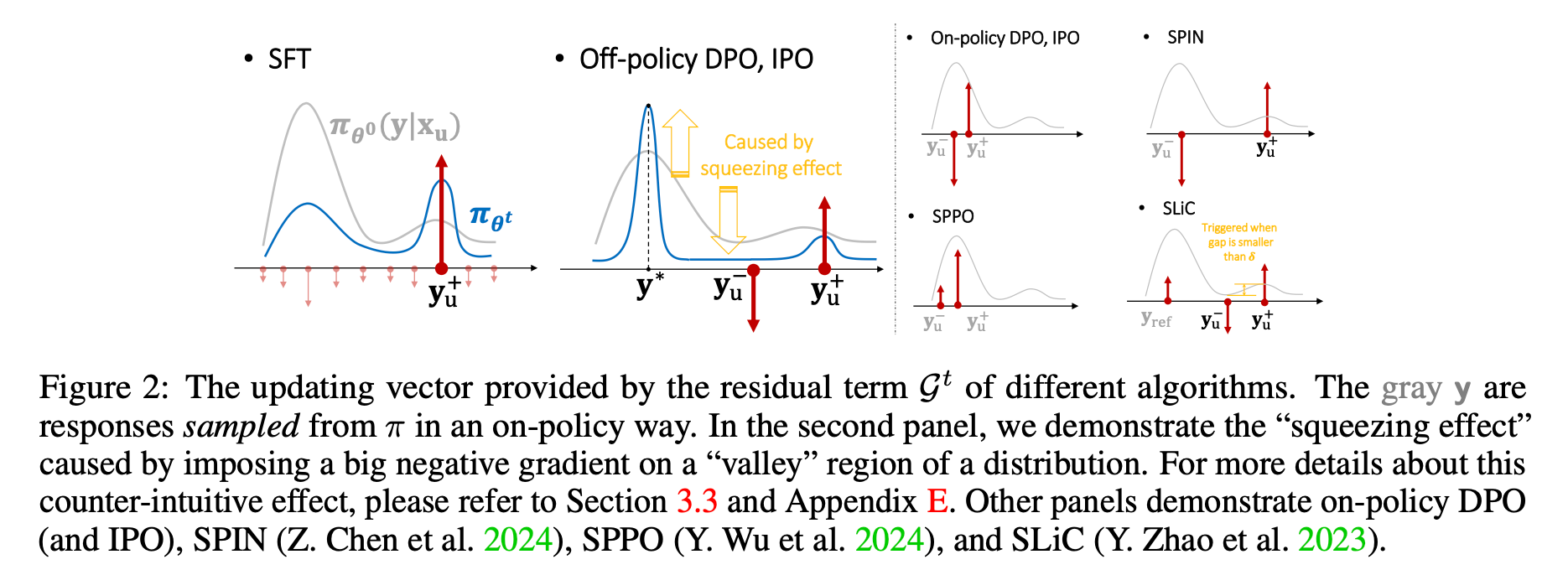

“Learning dynamics, which depict how the model’s prediction changes when it learns new examples, provide a powerful tool to analyze the behavior of models trained with gradient descent. To better utilize this tool in the context of LLM finetuning, we first derive the step-wise decomposition of LLM finetuning for various common algorithms. Then, we propose a unified framework for understanding LLM predictions’ behaviors across different finetuning methods. The proposed analysis successfully explains various phenomena during LLM’s instruction tuning and preference tuning, some of them are quite counter-intuitive. We also shed light on how specific hallucinations are introduced in the SFT stage, as previously observed (Gekhman et al. 2024), and where the improvements of some new RL-free algorithms come from compared with the vanilla off-policy DPO. The analysis of the squeezing effect also has the potential to be applied to other deep learning systems which apply big negative gradients to already-unlikely outcomes. Finally, inspired by this analysis, we propose a simple (but counter-intuitive) method that is effective in improving the alignment of models.”

“If everything we observe is becoming less confident, where has the probability mass gone?

To answer this question, we first identify a phenomenon we call the squeezing effect, which occurs when using negative gradients from any model outputting a distribution with Softmax output heads, even in a simple multi-class logistic regression task.”

“Although learning dynamics have been applied to many deep learning systems, extending this framework to LLM finetuning is non-trivial. The first problem is the high dimensionality and the sequence nature of both the input and output signals. The high-dimensional property makes it hard to observe the model’s output, and the sequential nature makes the distributions on different tokens mutually dependent, which is more complicated than a standard multi-label classification problem considered by most previous work.”

Ren, Y., & Sutherland, D. J. (2024). Learning dynamics of llm finetuning. arXiv preprint arXiv:2407.10490.

https://arxiv.org/pdf/2407.10490

https://bsky.app/profile/joshuaren.bsky.social/post/3lnchk75ejk2p

When being managed by technology: does algorithmic management affect perceptions of workers’ creative capacities?

A marketing org context…

“Despite optimistic industry narratives and layperson beliefs about algorithms and innovation in the abstract, we know less about the beliefs people hold about algorithms in more concrete terms, particularly about algorithmic management in practice and the employees and teams associated with it.”

“[SPARK is] a marketing organization. In SPARK, different teams work on various marketing tasks related to developing and re-inventing brand management, advertising, and promotion of customer loyalty. For example, one task that employees in SPARK could engage in is generating ideas for a company slogan.”

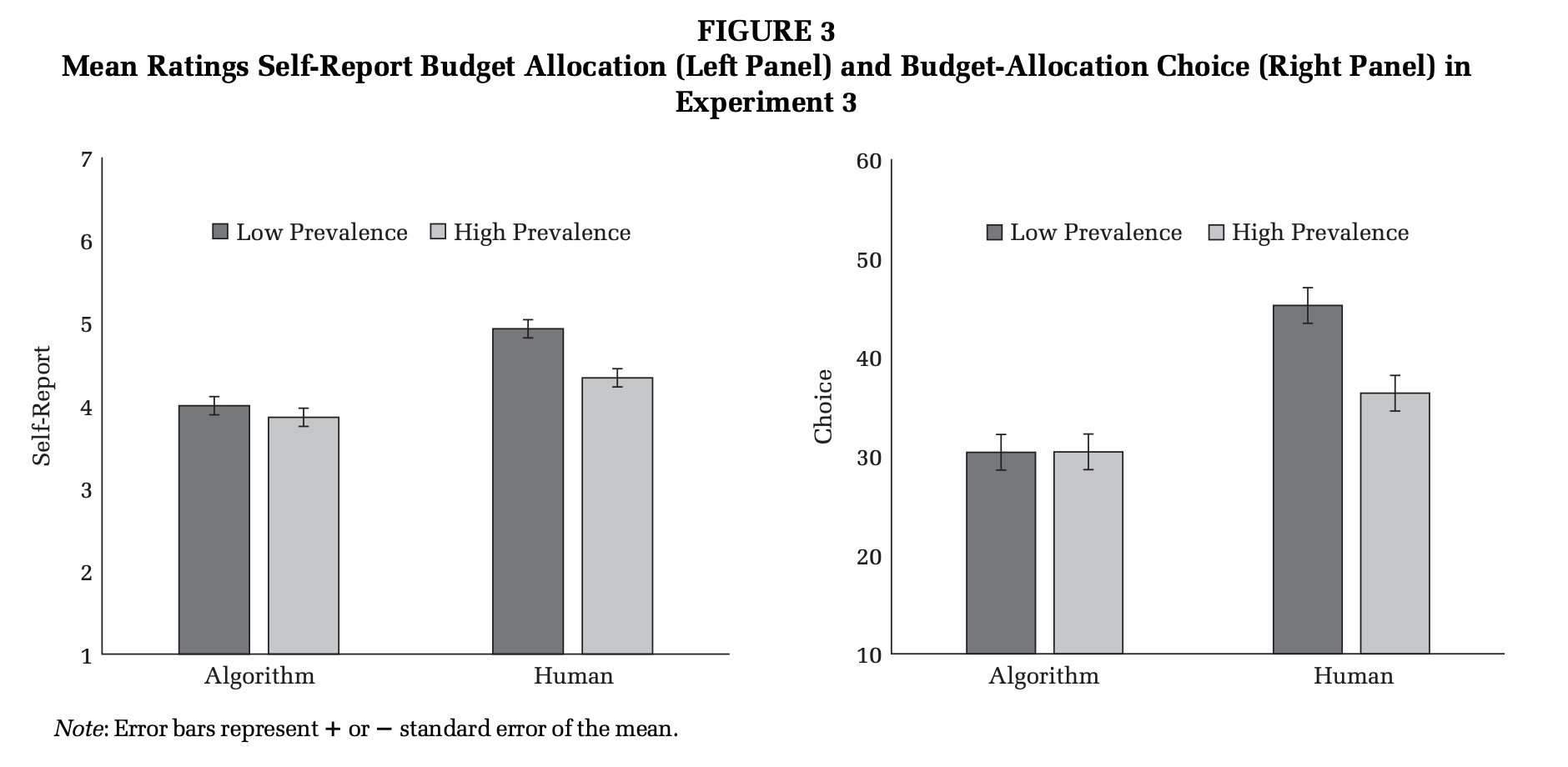

“Our first experiment finds that people in the role of top management in an organization believe employees working under algorithmic (vs. human) management are less capable of creative capacities, and these perceptions lead to allocating less money from an innovation budget to algorithm-led teams than to human-led teams. Two follow-up experiments show that these negative effects occur across multiple organizational contexts and remain relatively stable regardless of whether algorithmic management is more or less prevalent in the organization. These results suggest that despite industry narratives arguing that intelligent technologies increase innovation generally, specific employees and teams associated with algorithmic management may be perceived and treated as lacking in creativity and as less deserving of resources for innovation.”

Schweitzer, S., & De Cremer, D. (2024). When being managed by technology: does algorithmic management affect perceptions of workers’ creative capacities?. Academy of Management Discoveries, 10(3), 375-392.

https://journals.aom.org/doi/abs/10.5465/amd.2022.0115

Distinctly perceptual possibilities: Amodal completion is disrupted by visual, but not cognitive, load

“One remarkable capacity of the human mind is the ability to represent possibilities--- states of the world that have not been, and may never be, realized.”

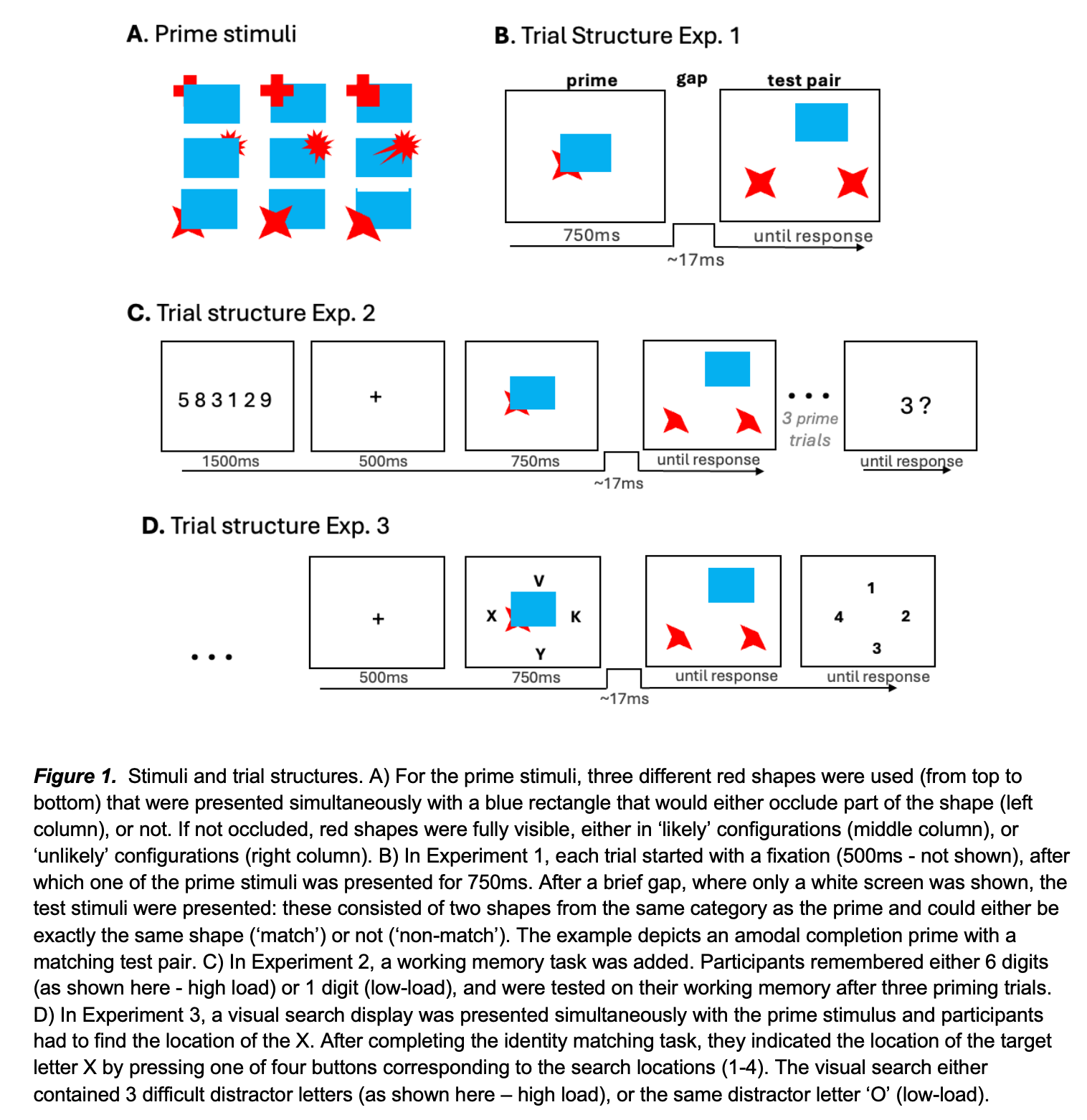

“Employing the most widely-studied visual paradigm that involves the perception of possibilities, amodal completion, we demonstrate that the representation of possibilities in this visual task is selectively disrupted by perceptual load but not working-memory load. This provides clear evidence that the key processes underlying the perception of possibilities occur before the information reaches high-level cognition. The representation of such possibilities is distinctly perceptual.”

“Thus, we examine whether the generation of visual possibilities relies on higher-level cognitive processes, such as working memory and cognitive control, or whether it depends primarily on lower-level perceptual processes. We capitalize on a robust phenomenon that involves perceptual inferences of possible objects: amodal completion (Michotte et al., 1991; Rauschenberger & Yantis, 2001; Sekular & Palmer, 1992). We hypothesized that if amodal completion relates to a more general capacity of representing possibilities, which prior work has shown is sensitive to cognitive load manipulations (Phillips & Cushman, 2017), taxing higher- order cognition should disrupt amodal completion. Conversely, if it reflects a primarily visual phenomenon, amodal completion should be selectively attenuated during high perceptual, but not cognitive, load.”

“Another related question concerns whether it is actually appropriate to treat such perceptual inferences as resulting in genuine representations of possibilities. There has been a lively debate in the cognitive and developmental literatures about the requirements for a representation to count as a genuine representation of possibility, rather than merely a representation involving uncertainty or a representation of actuality that may be incorrect (Cesana-Arlotti, Varga, & Téglás 2022; Leahy & Carey, 2020; Phillips & Kratzer, 2024; Téglás, Girotto, Gonzalez, & Bonatti, 2007). Whether the visual system’s inferences about possible shapes meet these standards remains an important and open question.”

Phillips, J. S., & Störmer, V. S. (2025, April 24). Distinctly perceptual possibilities: Amodal completion is disrupted by visual, but not cognitive, load. https://doi.org/10.31234/osf.io/dj6gp_v1

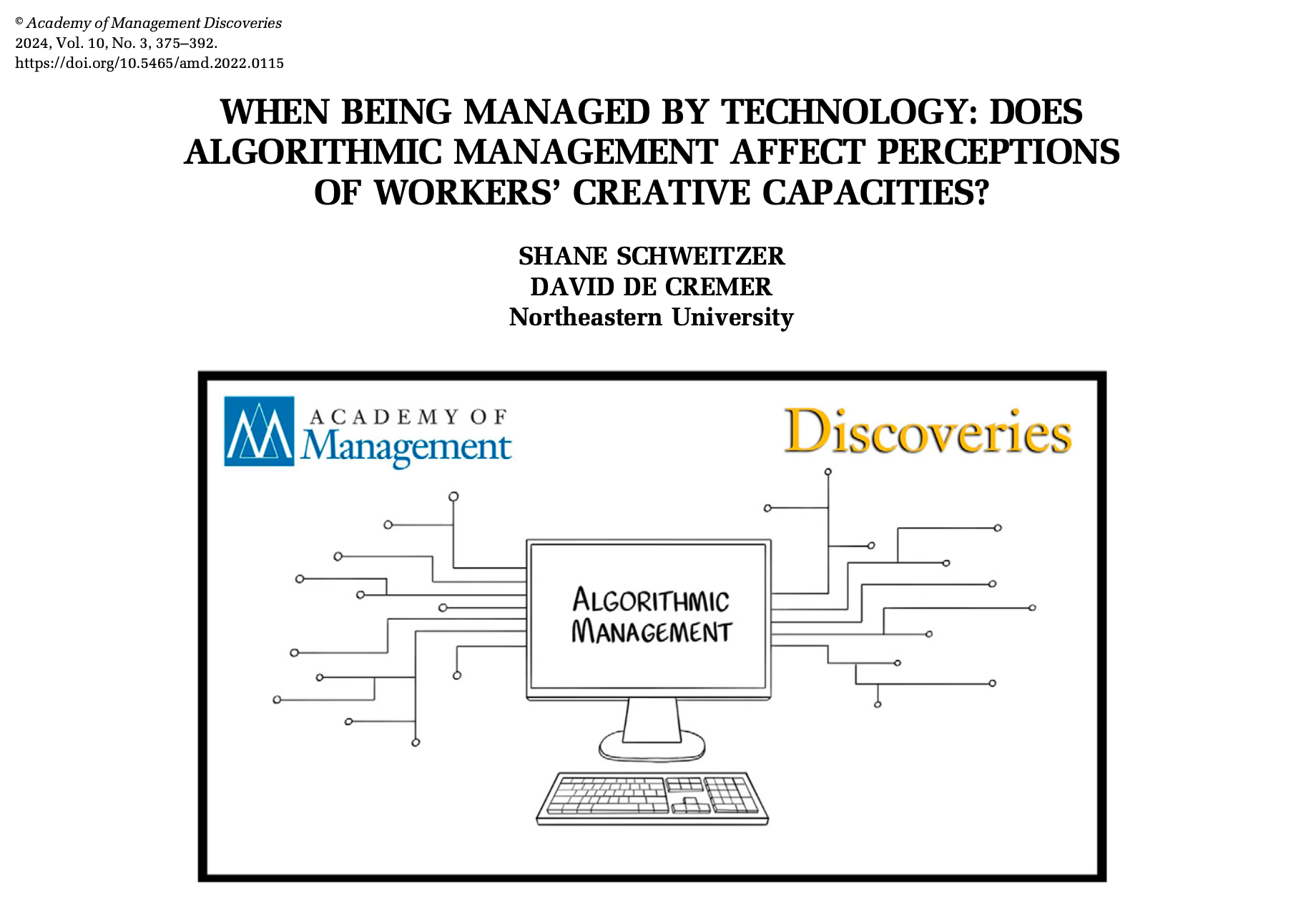

ChatGPT Won’t Stop Glazing

That’s a next level insight right there.

“You can know it’s happening and still fall for it.”

“The ability to, at scale, empathize with people and feed them confirming information towards their bias is insane.”

https://www.youtube.com/watch?v=fLu7glH32Gc

Reader Feedback

“...as though there are two forces…because if the temperature is too high, if it’s too random…as you’d say…divergent…then the user rejects it…so the constraint on the reasoning boundary may be what the user on the other end might accept.”

Footnotes

Calving an iceberg is fun. Drop a little bit of data into the salty sea and watch.

Here’s the May version of the white paper. Curious how you think.

Never miss a single issue

Be the first to know. Subscribe now to get the gatodo newsletter delivered straight to your inbox