Denoise this noisy target

This week: NoProp, Sudoku-Bench , Virtual reality arena, coding is not software engineering

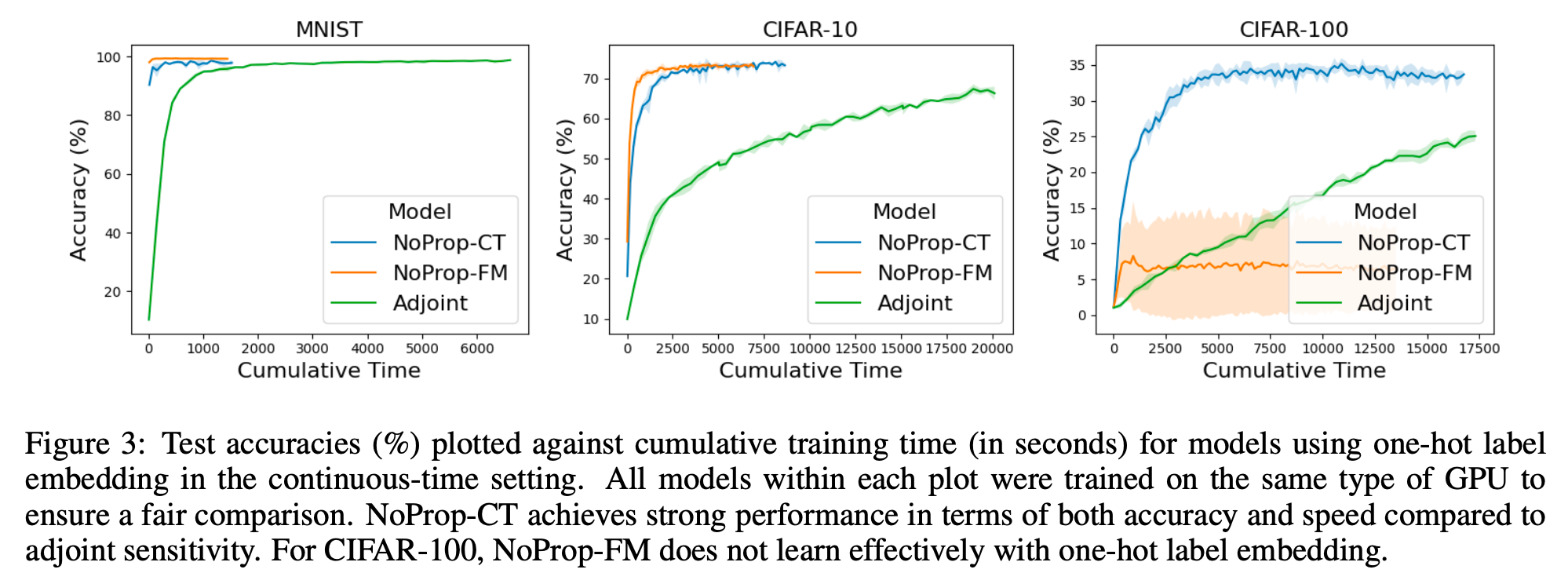

NoProp: Training Neural Networks without Back-propagation or Forward-propagation

Eternal 1986

“Instead, NoProp takes inspiration from diffusion and flow matching methods, where each layer independently learns to denoise a noisy target. We believe this work takes a first step towards introducing a new family of gradient-free learning methods, that does not learn hierarchical representations – at least not in the usual sense. NoProp needs to fix the representation at each layer beforehand to a noised version of the target, learning a local denoising process that can then be exploited at inference.”

“Using the denoising score matching approach that underlies diffusion models, we have proposed NoProp, a forward and back-propagation-free approach for training neural networks. The method enables each layer of the neural network to be trained independently to predict the target label given a noisy label and the training input, while at inference time, each layer takes the noisy label produced by the previous layer and denoises it by taking a step towards the label it predicts. We show experimentally that NoProp is significantly more performant than previous back-propagation-free methods while at the same time being simpler, more robust, and more computationally efficient. We believe that this perspective of training neural networks via denoising score matching opens up new possibilities for training deep learning models without back-propagation, and we hope that our work will inspire further research in this direction.”

Li, Q., Teh, Y. W., & Pascanu, R. (2025). NoProp: Training Neural Networks without Back-propagation or Forward-propagation. arXiv preprint arXiv:2503.24322.

https://arxiv.org/abs/2503.24322v1

See also: David E Rumelhart, Geoffrey E Hinton, and Ronald J Williams. Learning representations by back-propagating errors. nature, 323(6088):533–536, 1986.

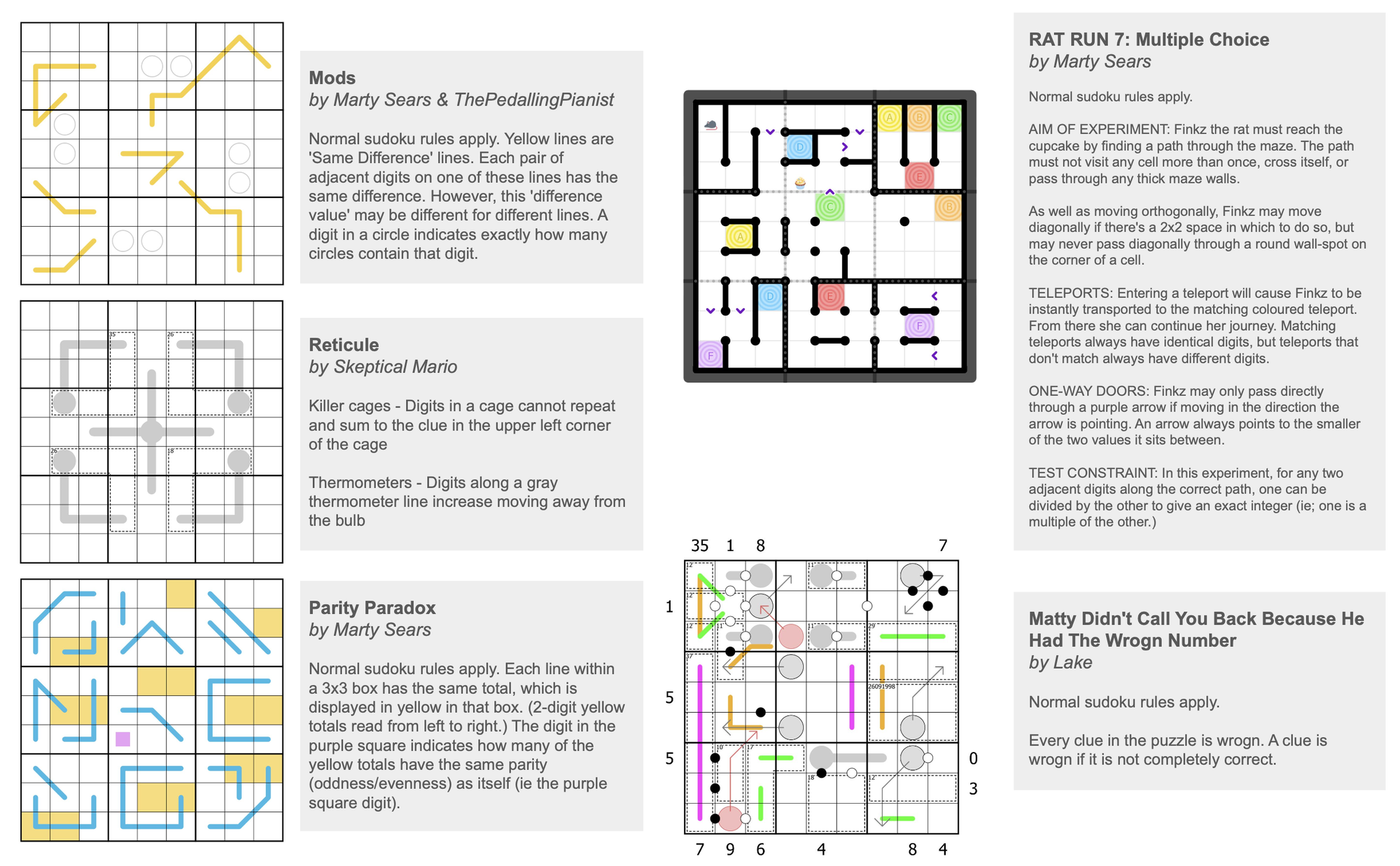

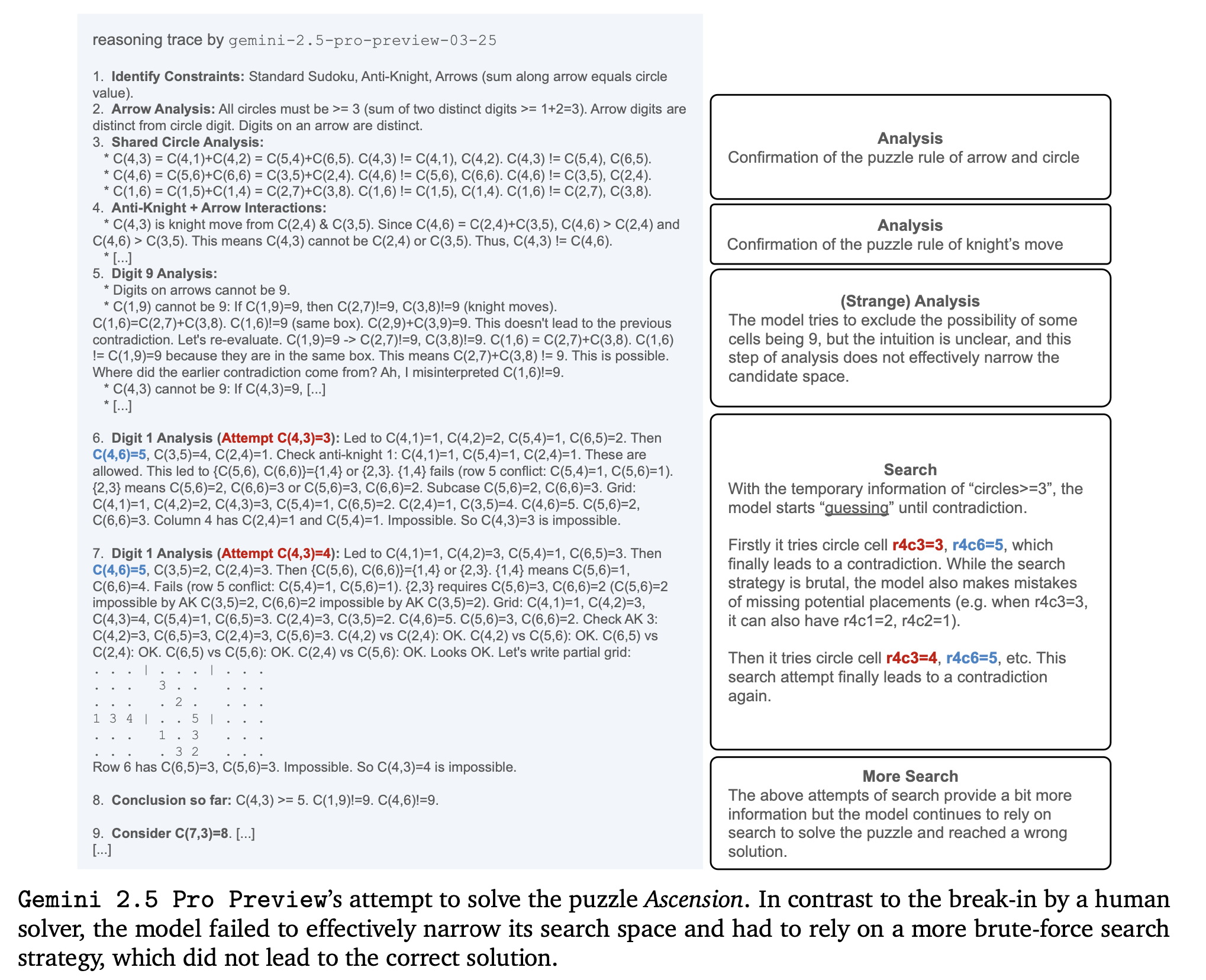

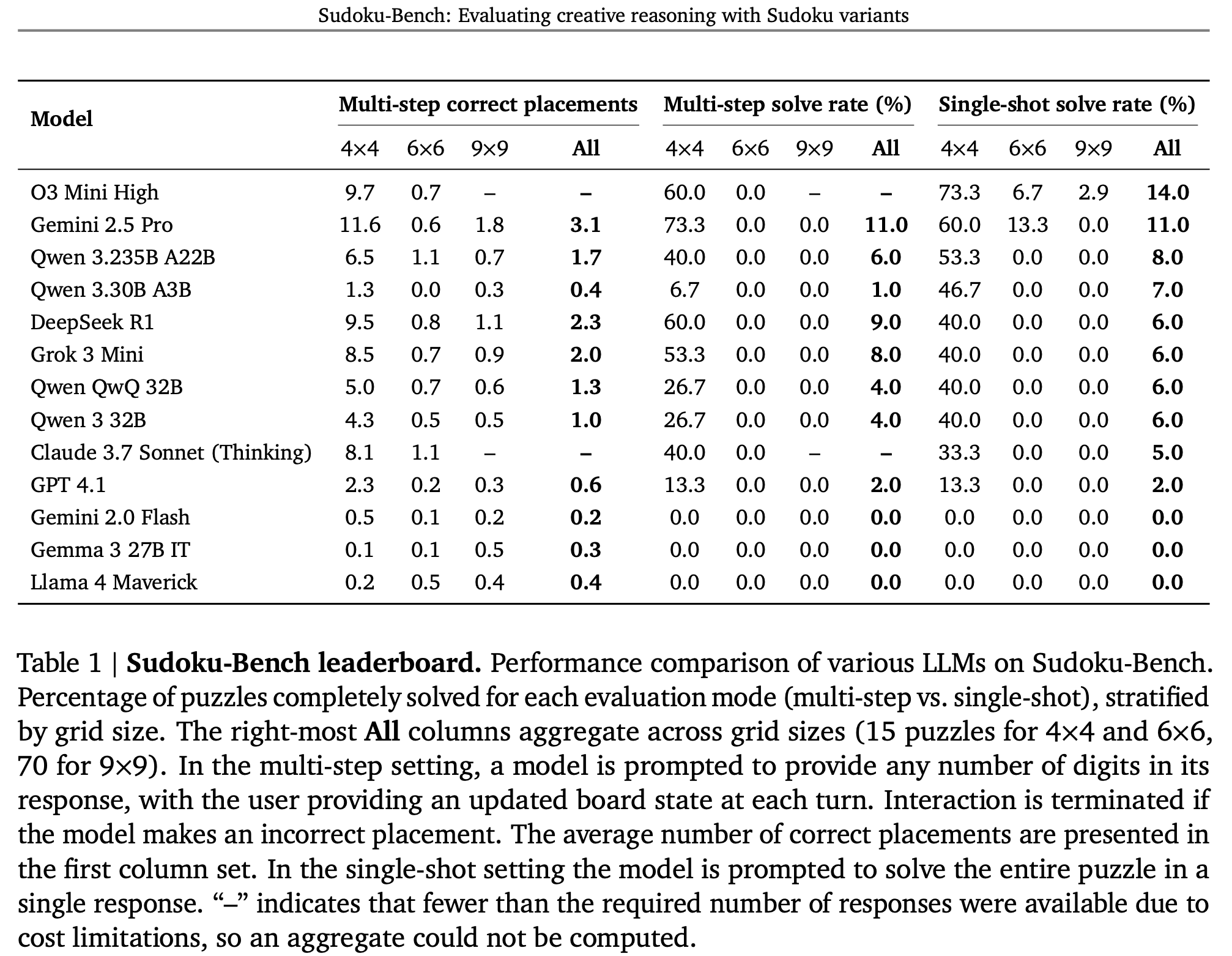

Sudoku-Bench: Evaluating creative reasoning with Sudoku variants

Those solve rates on a 9x9…

“Sudoku variants form an unusually effective domain for reasoning research: each puzzle introduces unique or subtly interacting constraints, making memorization infeasible and requiring solvers to identify novel logical breakthroughs (“break-ins”).”

“Beyond benchmarking, Sudoku variants offer a fertile laboratory for reasoning research. An extensive, ever-growing supply of human-generated puzzles allows scalable difficulty progression, from simpler 4 × 4 puzzles suitable for small models to highly intricate 9 × 9 puzzles, the hardest of which can stump all but the best expert human solvers.”

“We introduced Sudoku-Bench, a unified benchmark built around modern Sudoku variants that systematically stress long-horizon deduction, rule-interpretation, and strategic planning. In addition, the benchmark is uniquely suited for evaluating creative reasoning via the rich and varied collection of break-ins featured in most puzzles. The benchmark includes a curated puzzle corpora with textual representations, providing a controlled substrate for measuring how well language models cope with novel, tightly coupled constraints. Baseline experiments show that frontier LLMs solve fewer than 15% of instances without external tools, and performance falls sharply on 9 × 9 variants—evidence that substantial headroom remains for improvements.”

Seely, J., Imajuku, Y., Zhao, T., Cetin, E., & Jones, L. (2025). Sudoku-Bench: Evaluating creative reasoning with Sudoku variants. arXiv preprint arXiv:2505.16135.

https://arxiv.org/abs/2505.16135

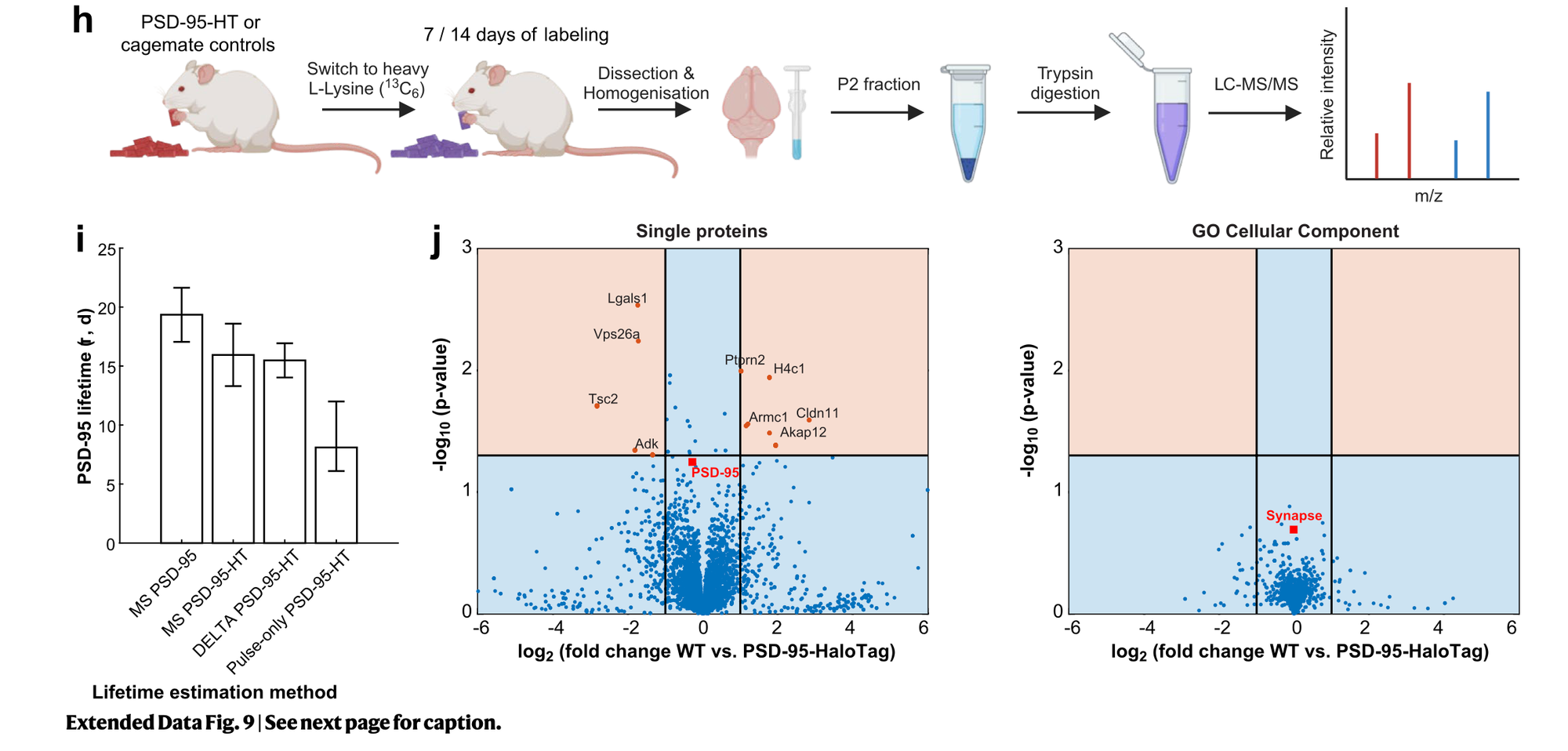

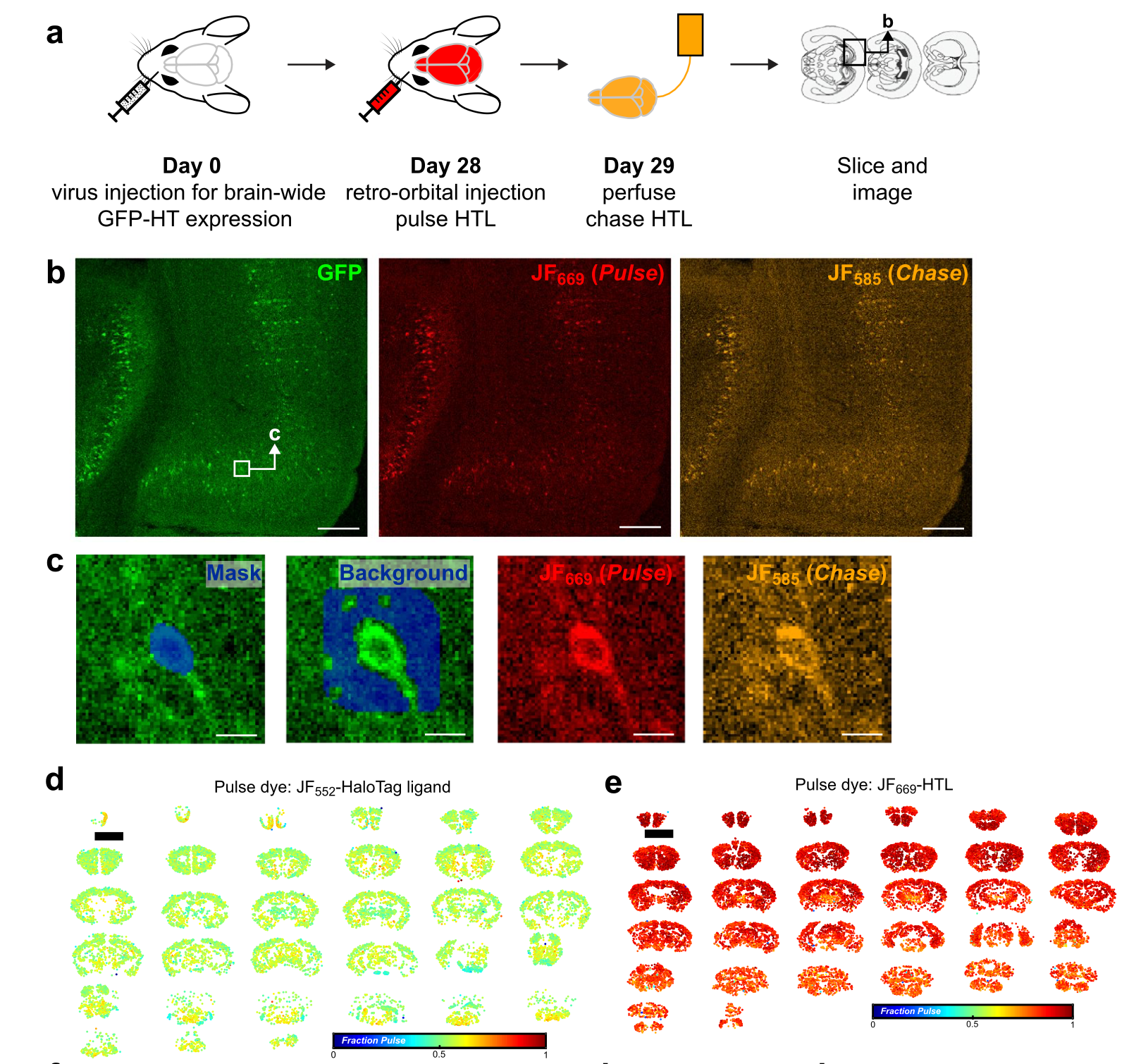

DELTA: a method for brain-wide measurement of synaptic protein turnover reveals localized plasticity during learning

I’ve changed my mind…? Also…what?

“Synaptic plasticity alters neuronal connections in response to experience, which is thought to underlie learning and memory. However, the loci of learning-related synaptic plasticity, and the degree to which plasticity is localized or distributed, remain largely unknown.”

“Virtual reality arena. Head-fixed mice ran atop a foam ball in a virtual reality environment with screens on each side and in front of their heads (Fig. 3a). Mice were first acclimatized to the ball without visual stimuli (two daily sessions, 45 min), while water was delivered at random intervals. Mice were then trained to lick when one of two stimuli appeared in random order in the virtual reality corridor every 30–70 cm of running selected from a uniform distribution. Reward probability was 50%. During the first two to three sessions, rewards were delivered automatically when the mouse ran through both cues. In later sessions, water rewards were delivered only in response to answer licks at the cues. After each behavioral session, water was supplemented to a total of 0.5–1 ml.”

Mohar, B., Michel, G., Wang, Y. Z., Hernandez, V., Grimm, J. B., Park, J. Y., ... & Spruston, N. (2025). DELTA: a method for brain-wide measurement of synaptic protein turnover reveals localized plasticity during learning. Nature neuroscience, 1-10.

https://www.nature.com/articles/s41593-025-01923-4

Coding is not software engineering

There has got to be a better way…

“In a video announcing the SWE models, comments made by Windsurf’s Head of Research, Nicholas Moy, underscore Windsurf’s newest efforts to differentiate its approach. “Today’s frontier models are optimized for coding, and they’ve made massive strides over the last couple of years,” says Moy. “But they’re not enough for us … Coding is not software engineering.””

https://techcrunch.com/2025/05/15/vibe-coding-startup-windsurf-launches-in-house-ai-models/

Reader Feedback

“I don’t know how to feel about AI persuasion.”

Footnotes

Pixar’d two stories for you:

Here’s the first:

- Once upon a time there was a builder.

- Every day they would write code.

- Until one day they launched.

- And because of that they learned that nobody needed their code.

- And because of that they got angry and frustrated.

- Until finally they gave up.

- The end.

As opposed to:

- Once upon a time there was a builder.

- Every day they would try to understand what the problem really was.

- And because of that they learned they could assemble a team that had a good shot at solving that problem.

- And because of that they solved the problem.

- Until finally they realized their Schumpeterian rents and were judged harshly by their Canadian peers for exiting too early and but they still lived happily ever after in spite of all the haters.

- The end.

Never miss a single issue

Be the first to know. Subscribe now to get the gatodo newsletter delivered straight to your inbox