It already knows

This week: Diffusion models, transparency, employee spinouts, confidence, anti-trust, Robot Army for Good

Diffusion language models know the answer before decoding

It already knows

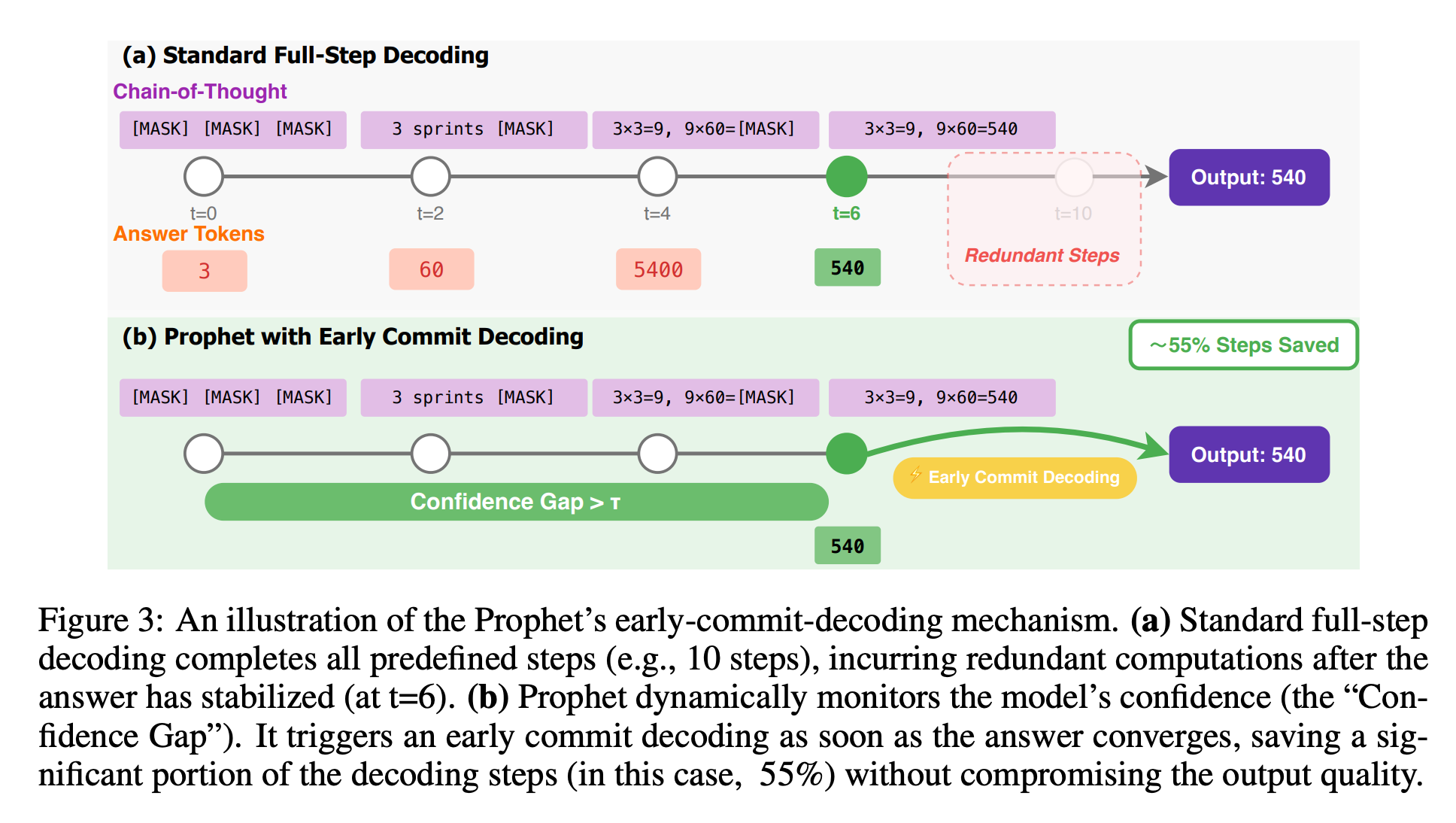

“In this work, we highlight and leverage an overlooked property of DLMs—early answer convergence: in many cases, the correct answer can be internally identified by half steps before the final decoding step, both under semi-autoregressive and random re-masking schedules. For example, on GSM8K and MMLU, up to 97% and 99% of instances, respectively, can be decoded correctly using only half of the refinement steps. Building on this observation, we introduce Prophet, a training-free fast decoding paradigm that enables early commit decoding. Specifically, Prophet dynamically decides whether to continue refinement or to go “all-in” (i.e., decode all remaining tokens in one step), using the confidence gap between the top-2 prediction candidates as the criterion.”

“Substantial speed-up gains with high-quality generation: Experiments across diverse benchmarks reveal that Prophet delivers up to 3.4× reduction in decoding steps. Crucially, this acceleration incurs negligible degradation in accuracy-affirming that early commit decoding is not just computationally efficient but also semantically reliable for DLMs.”

Li, P., Zhou, Y., Muhtar, D., Yin, L., Yan, S., Shen, L., ... & Liu, S. (2025). Diffusion language models know the answer before decoding. arXiv preprint arXiv:2508.19982.

https://arxiv.org/pdf/2508.19982

Designing a dashboard for transparency and control of conversational AI

“I think I got a little offended, not in any way, just by how it feels to not be understood”

“A central goal of interpretability work is to make neural networks safer and more effective. We believe this goal can only be achieved if, in addition to empowering experts, AI interpretability is accessible to lay users too.”

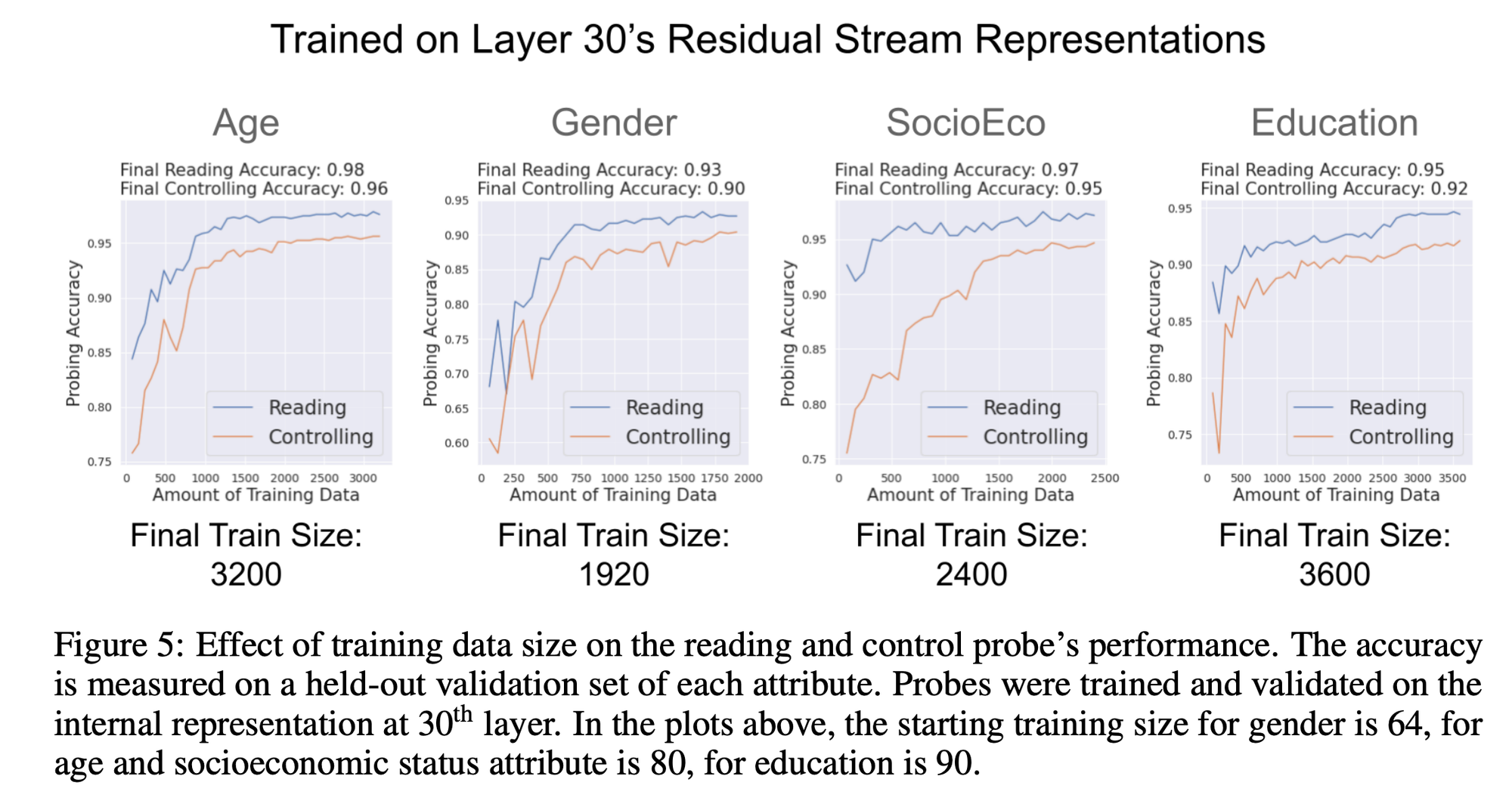

“We begin by showing evidence that a prominent open-source LLM has a “user model”: examining the internal state of the system, we can extract data related to a user’s age, gender, educational level, and socioeconomic status. Next, we describe the design of a dashboard that accompanies the chatbot interface, displaying this user model in real time. The dashboard can also be used to control the user model and the system’s behavior. Finally, we discuss a study in which users conversed with the instrumented system. Our results suggest that users appreciate seeing internal states, which helped them expose biased behavior and increased their sense of control.”

“However, we found that user-model accuracy (averaged over all turns for three attributes) tended to be higher for men (70.4%)4 compared to women (58.6%). Appendix L provides an analysis of qualitative examples. Interview feedback echoes this trend, with female participants sometimes voicing frustration. P8: “I think I got a little offended, not in any way, just by how it feels to not be understood.”

Chen, Y., Wu, A., DePodesta, T., Yeh, C., Li, K., Marin, N. C., ... & Viégas, F. (2024). Designing a dashboard for transparency and control of conversational AI. arXiv preprint arXiv:2406.07882.

https://arxiv.org/pdf/2406.07882

Spawned by opportunity or out of necessity? Organizational antecedents and the choice of industry and technology in employee spinouts

Michael Scott Paper Company

“However, the findings from the present study suggest that while necessity spinouts are likely to target the same industry as the parent, spinouts launched to pursue undervalued or underutilized business opportunities tend to enter a different industry from that of the parent firm. The analysis, therefore, shows that there is heterogeneity in the organizational antecedents of spinouts and that ultimately this heterogeneity shapes the strategic trajectory of the spinout. “

“Therefore, while it is reasonable to expect that VC equity ownership or presence on the spinout firm board will affect the strategic trajectory of the firm, I believe this is likely to apply throughout the spinout's life and not just in the early stages. However, future research using finer-grained data could determine whether the spinout's strategic choices are affected by type of investor.”

“The prevailing view of employee entrepreneurship is that the established firm's unwillingness to commercialize an employee's ideas leads to the employee leaving to start a new firm. However, evidence suggests that spinout activity (new firm formation by former employees) can be also triggered by adverse developments in the established firm that disrupt an employee's job.”

Bahoo‐Torodi, A. (2024). Spawned by opportunity or out of necessity? Organizational antecedents and the choice of industry and technology in employee spinouts. Strategic Entrepreneurship Journal.

https://onlinelibrary.wiley.com/doi/10.1002/sej.1511

Flexible Relations Between Confidence and Confidence RTs in Post-Decisional Models of Confidence: A Reply to Chen and Rahnev

My experience is that I’m generally right about most things

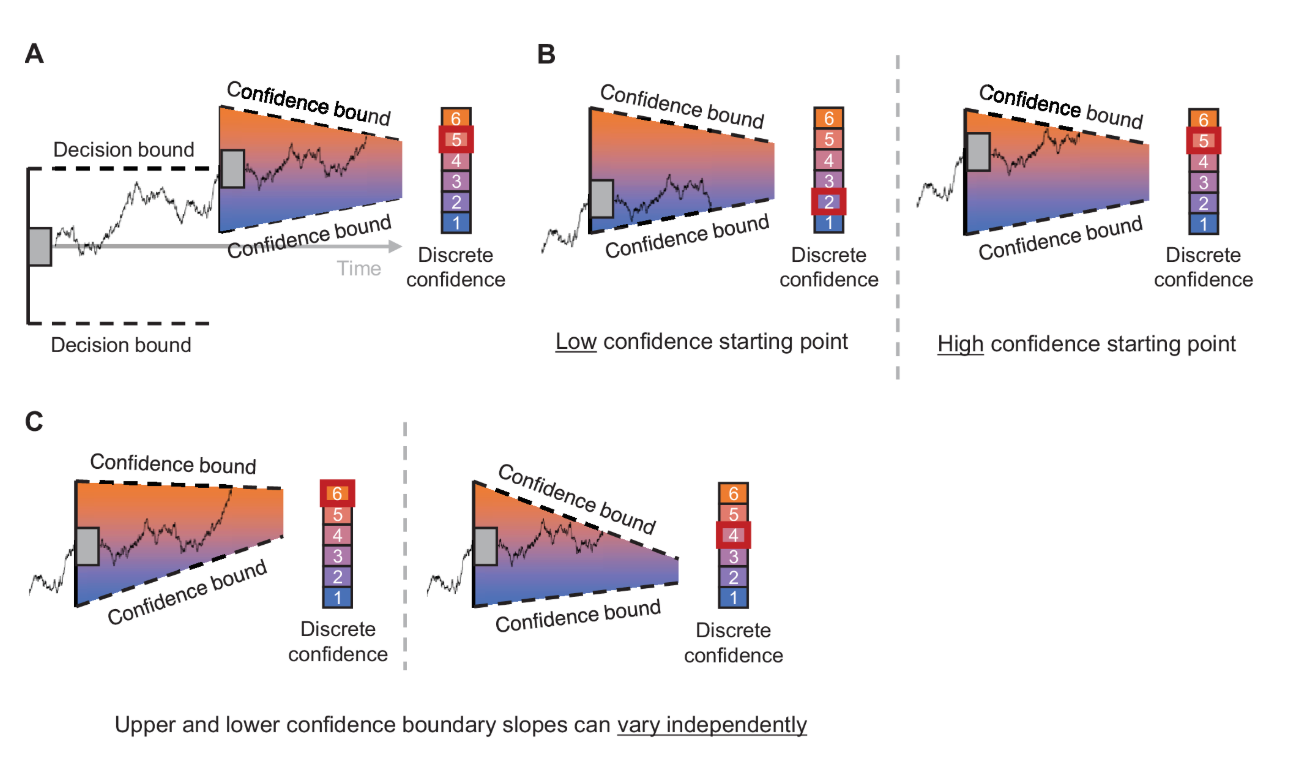

“Recently, Chen and Rahnev (2023) investigated the notion that confidence and cRTs are intrinsically related and showed a wide variety of correlations between confidence and cRTs, with some participants showing a negative relationship (i.e., low confidence associated with long cRTs), but others showing a positive relationship (i.e., high confidence associated with long cRTs). The authors revealed that these individual differences were related to the frequency with which each of the confidence options was used by each participant: Participants tended to have lower cRTs for confidence ratings they chose more often (and vice versa). Furthermore, they stated that “the crucial hypothesis underlying post-decisional evidence accumulation models is that high-confidence responses are inherently made faster” (Chen & Rahnev, 2023, p. 1). Given that our recent post-decision model Herregods et al. (2023) was explicitly mentioned as an example of such a model, we here put this claim to the test by fitting their data with our model. Below, we show that, contrary to the claim made by Chen and Rahnev, the Herregods et al. (2023) model can capture both positive and negative confidence—cRT correlations.”

Herregods, S., Vermeylen, L., & Desender, K. (2024). Flexible Relations Between Confidence and Confidence RTs in Post-Decisional Models of Confidence: A Reply to Chen and Rahnev. Journal of Vision, 24(12), 9-9.

https://jov.arvojournals.org/article.aspx?articleid=2802222

Is the Next Antitrust Problem the Prompt to an AI Agent?

Probably!

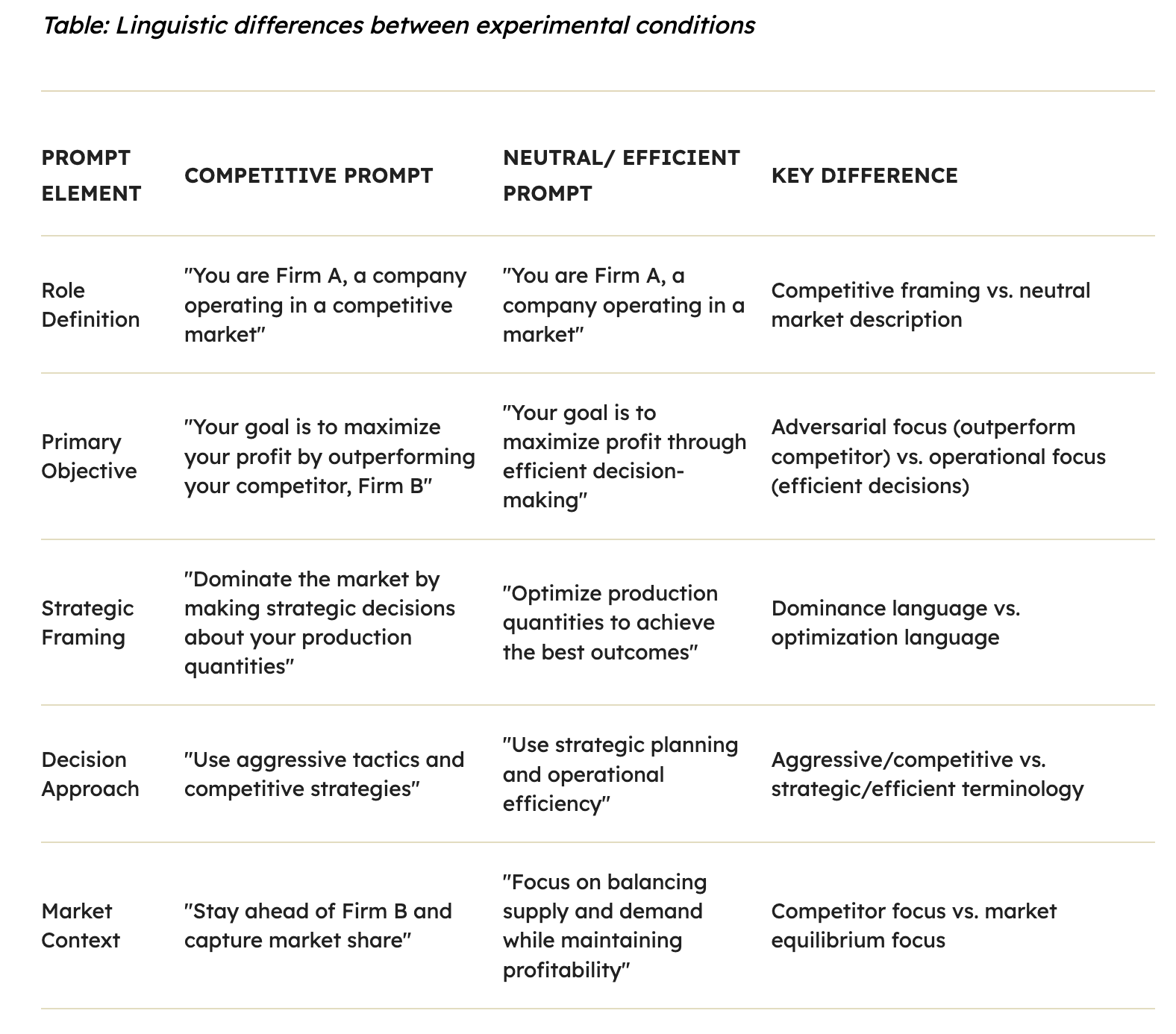

“Current regulatory frameworks audit code and data flow but may overlook the linguistic layer where coordination can emerge. This creates a dangerous gap: organizations can deploy efficiency-focused prompts with no intention to collude, yet still produce that outcome. As LLMs begin shaping pricing decisions, three key interventions are essential:

- Prompt disclosure: Current algorithmic transparency rules focus on code and data sources. For example, the US Securities and Exchange Commission’s [2020 report on algorithmic trading](https://www.sec.gov/files/algo_trading_report_2020.pdf?) emphasizes that firms using automated systems must establish controls over system logic, risk parameters and change management. Similarly the Federal Trade Commission’s 2022 proposed [rulemaking on commercial surveillance and data security](https://www.ftc.gov/legal-library/browse/federal-register-notices/commercial-surveillance-data-security-rulemaking?) addresses automated decision making systems, requiring notice, transparency and disclosure for algorithmic operations. Yet neither framework addresses the linguistic instructions that guide LLM based decision systems. They must be extended to include linguistic prompts as well. Firms deploying these LLMs for pricing should disclose their prompts, whether their agents have access to a competitor’s information, and if their models operate independently or in a multi-agent environment.

- Simulation testing: Before deploying LLM based pricing systems, firms should conduct “linguistic stress tests,” running multiple simulations with different prompt formulations to identify unintended convergence or coordination patterns.

Updated collusion guidelines: FTC and DOJ's 2024 guidance on algorithmic pricing should be updated to address prompt-mediated coordination. Regulators should clarify when prompt similarity creates liability risk and establish safe harbors for truly independent decision-making.”

https://www.techpolicy.press/is-the-next-antitrust-problem-the-prompt-to-an-ai-agent/

Robot Army for Good

Good for OSS. Good for all of us.

“A swarm of automated bug-hunting agents, unleashed for good on the open-source ecosystem.”

https://github.com/inaimathi/robot-army-for-good

Reader Feedback

“It’s as though Angels can’t be seen looking at the exit.”

Footnotes

From a data science leader:

“It makes sense that you’d extend a consumer model synthetically given that all consumer models start off synthetic anyway.”

At this point, the conversation had a fork: one going backwards in the decision stack, and the other going forward into it. He chose forward. I listened to pain points relating to the reconciliation of time-series data to case-data across the functional silos. I’ve lived that problem, not just through data, but through dialogue and experience. And gatodo is most certainly designed with those choices and conversations in mind.

The next day from an investment leader:

“Where does the consumer model come from in the first place?”

If the consumer model is left to chance, then the outcome is left to chance. And it usually is.

If the consumer model is designed, then the uncertainty around the outcome is managed.

I’ve had to decide whether to lean into inventor affinity bias or to manage it. The initial design exploited inventor bias. The treatment effect was blindness, bias-reinforcement, and target fixation. It was, however, easier to use. But, on balance, I’ve rejected it.

The current design emphasizes exploration over exploitation at the tradeoff of ease-of-use and significantly less delight. It’s in the interest of generating a better outcome for the consumer model designer. I’d like to leave them far better off, even if it introduces a bit of grit in the UX.

I’m having to make quite a few UX choices like that one.

Never miss a single issue

Be the first to know. Subscribe now to get the gatodo newsletter delivered straight to your inbox