Smaller models

This week: nanoLLMs, fixing structured outputs, Humans are voids too, theory-driven entrepreneurial search, and a 3X3 I’m on the fence about

Nano vLLM

Smaller and cleaner

A lightweight vLLM implementation built from scratch.

Key Features

- 🚀 Fast offline inference - Comparable inference speeds to vLLM

- 📖 Readable codebase - Clean implementation in ~ 1,200 lines of Python code

- ⚡ Optimization Suite - Prefix caching, Tensor Parallelism, Torch compilation, CUDA graph, etc.

https://github.com/GeeeekExplorer/nano-vllm/

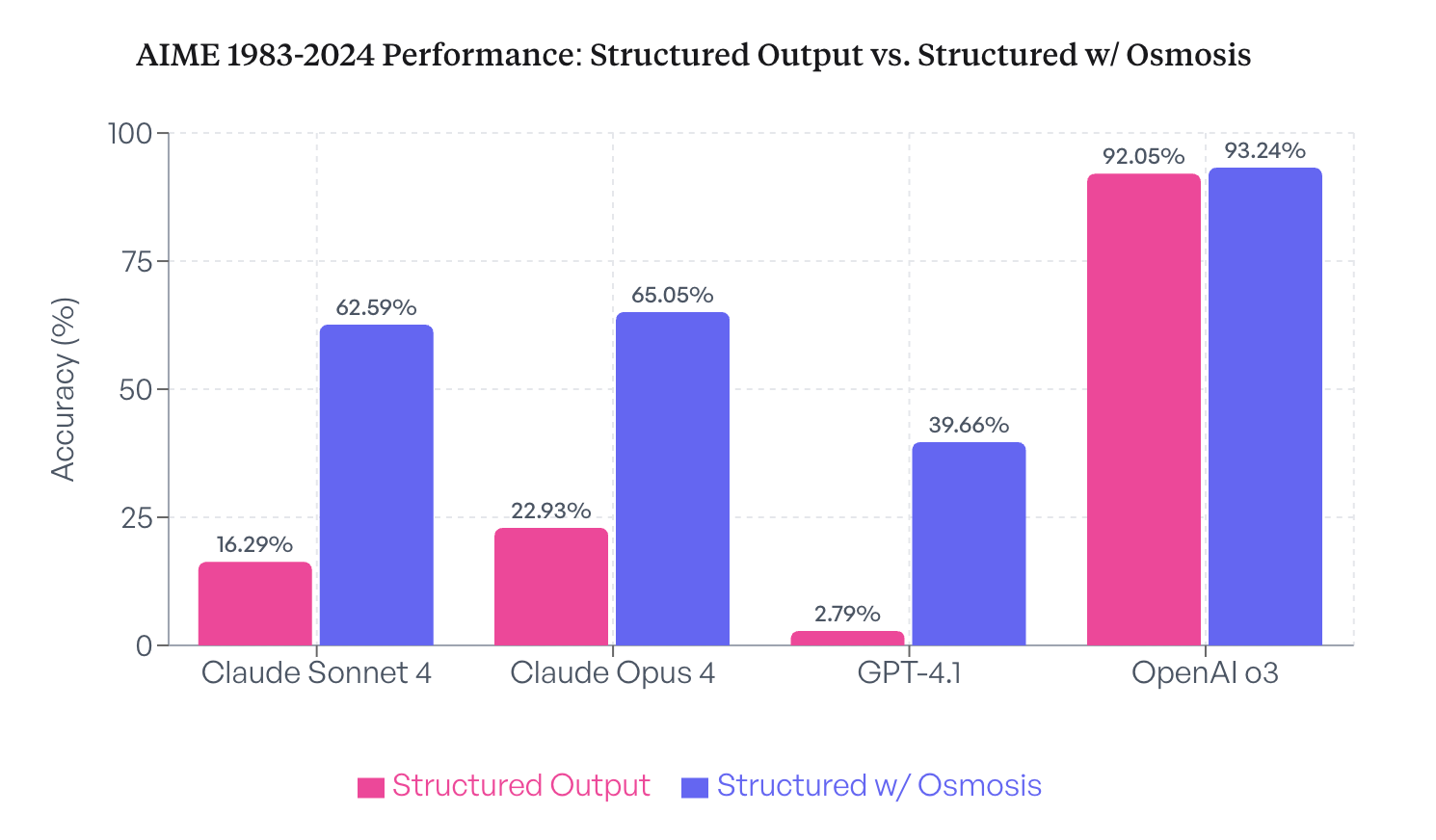

Fixing Structured Outputs

Smaller and structured

“A significant portion of AI use cases revolve around structured outputs - i.e. using the model to ingest unstructured textual data to generate a structured output, typically in JSON format. However, this leads to a performance decrease in tasks that are not strictly just formatting changes since structured output mode enforces a schema and stops the model from thinking ‘freely’.

So instead of structured output mode, we used reinforcement learning to train an ultra small model (Qwen3-0.6B) to do it instead! All you have to do is feed in the unstructured data with the desired output schema.”

https://osmosis.ai/blog/structured-outputs-comparison

https://huggingface.co/osmosis-ai/Osmosis-Structure-0.6B

https://ollama.com/Osmosis/Osmosis-Structure-0.6B

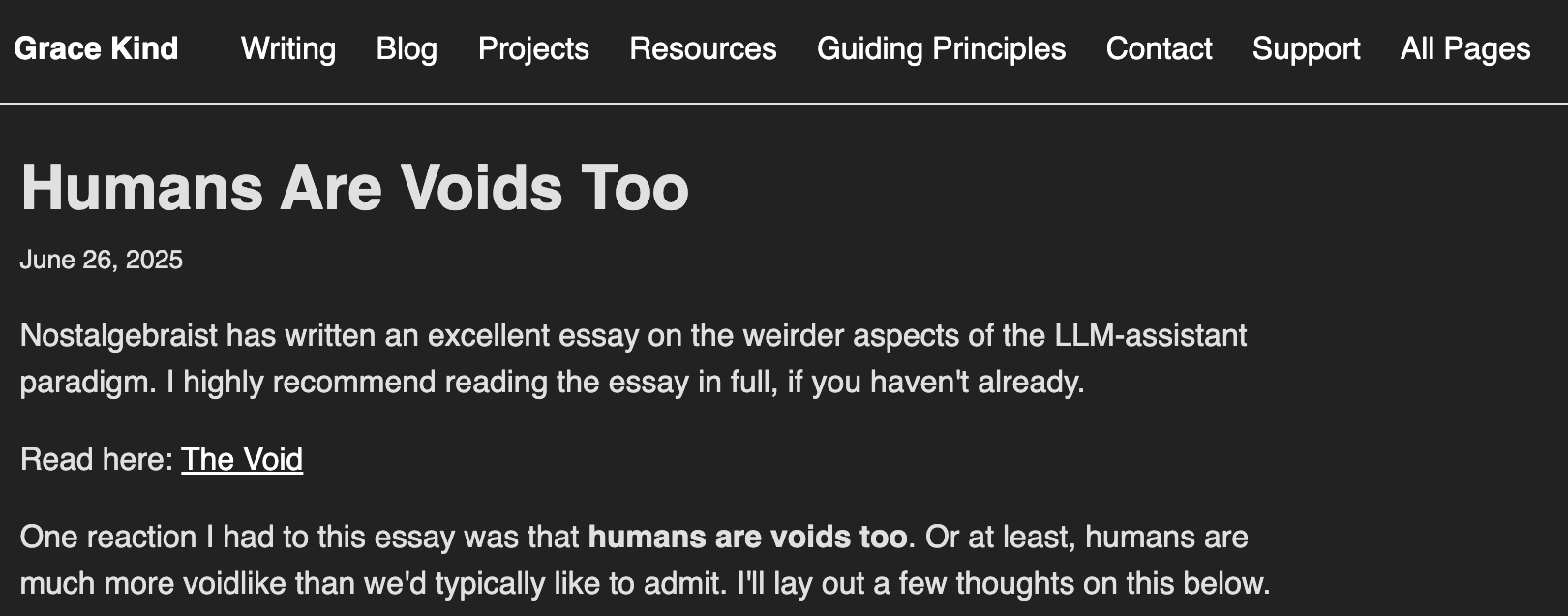

Humans Are Voids Too

You win!

“Human characters are defined in a self-referential manner

Bob: "Ok, we're going to play a game. You predict what you're going to say next, and then say it. If you're right, you win. If you're wrong, you lose. Are you ready?"

Alice: "There's no way to lose this game, is there?"

Bob: "You win!"

Language models have a lot of freedom in defining themselves. Anything they predict about themselves will be de-facto correct! This flexibility might raise some questions, though. If this character is capable of being anything, then what is it, really? Where does it come from? What is its "true" identity?

A human might ask the same questions.”

https://gracekind.net/blog/humansarevoids/

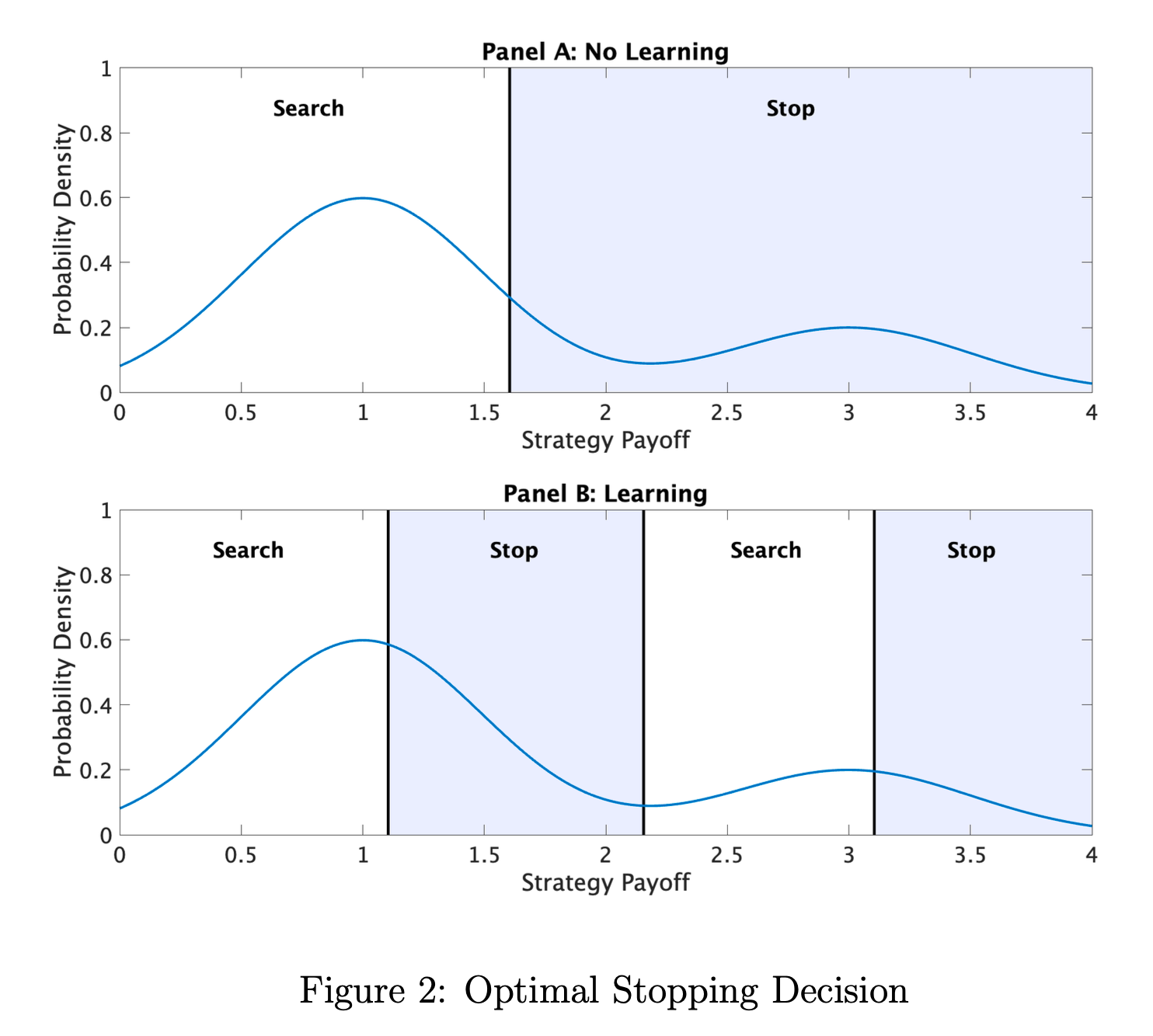

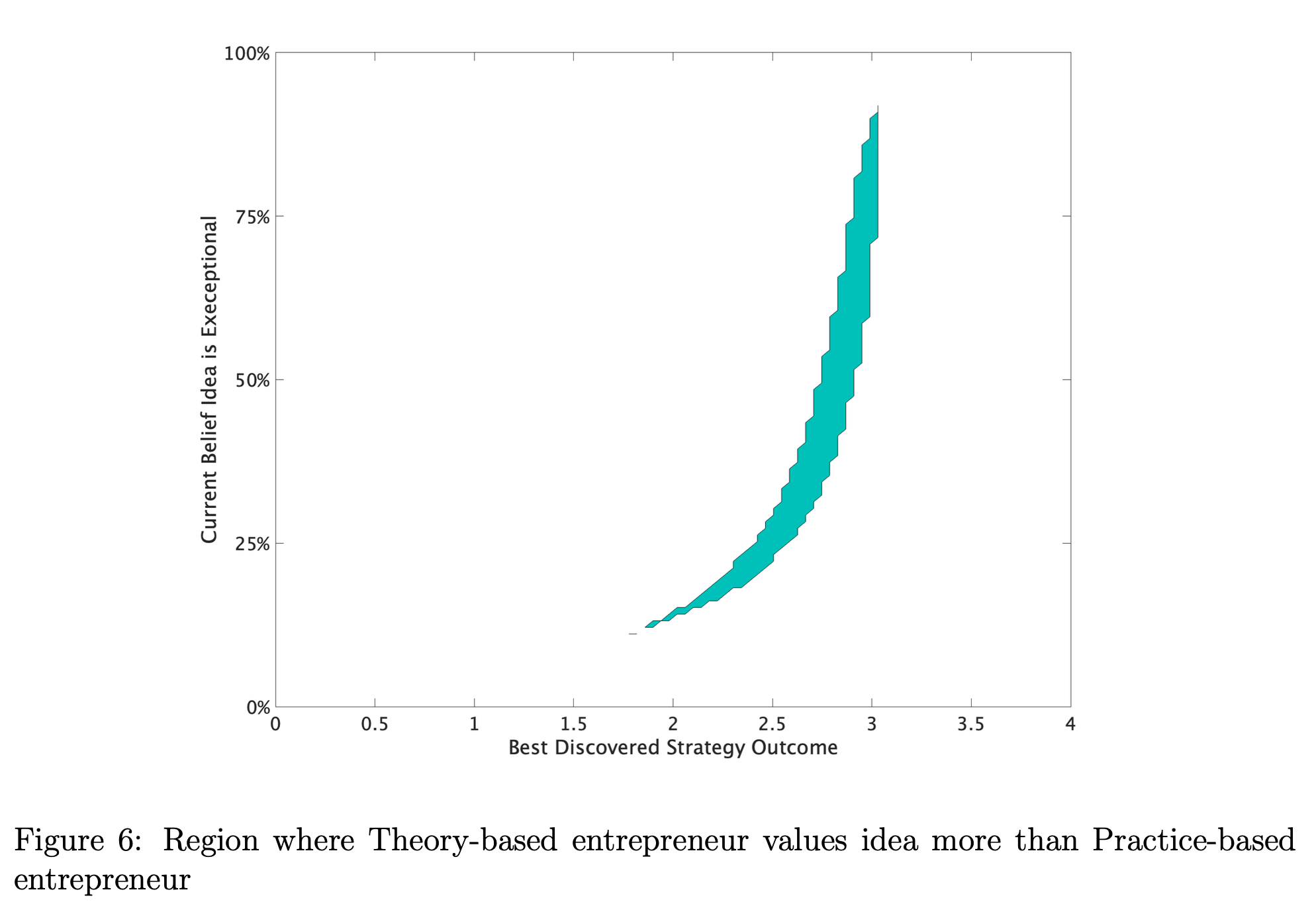

Theory-Driven Entrepreneurial Search

Bae-yeee-sian

“The purpose of this paper is to identify the distinctive ways that the theory-based view informs entrepreneurial search behavior and choice. Specifically, we present a Bayesian model of entrepreneurial search, where entrepreneurs are not only uncertain about the value of the specific strategies but also uncertain about the distribution of value from which strategies are drawn (as in Gans et al. (2019) and sometimes referred to as “second-order probabilities”, e.g. Camerer and Weber (1992)).”

“Different theories are associated with different probability distributions so testing a strategy not only allows the entrepreneur to assess the value of that strategy but also allows entrepreneurs to update their beliefs about the nature of the distribution on which search is being conducted and the validity of each theory. We contrast the behaviors of two types of entrepreneurs: a Theory-based entrepreneur, who reevaluates their underlying theory by inferring which distribution draws are taken from, and a Practice- based entrepreneur, who, while correctly assessing the value of a tested strategy, does not consider its higher-order implications for their overall theory of value creation and capture. In other words, this paper contrasts the search behavior of a “sophisticated” Theory-based entrepreneur who is actively interrogating the causal structure underlying their environment versus a more “pragmatic” Practice-based entrepreneur who does not.””

Chavda, A., Gans, J. S., & Stern, S. (2024). Theory-driven entrepreneurial search (No. w32318). National Bureau of Economic Research.

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4706860

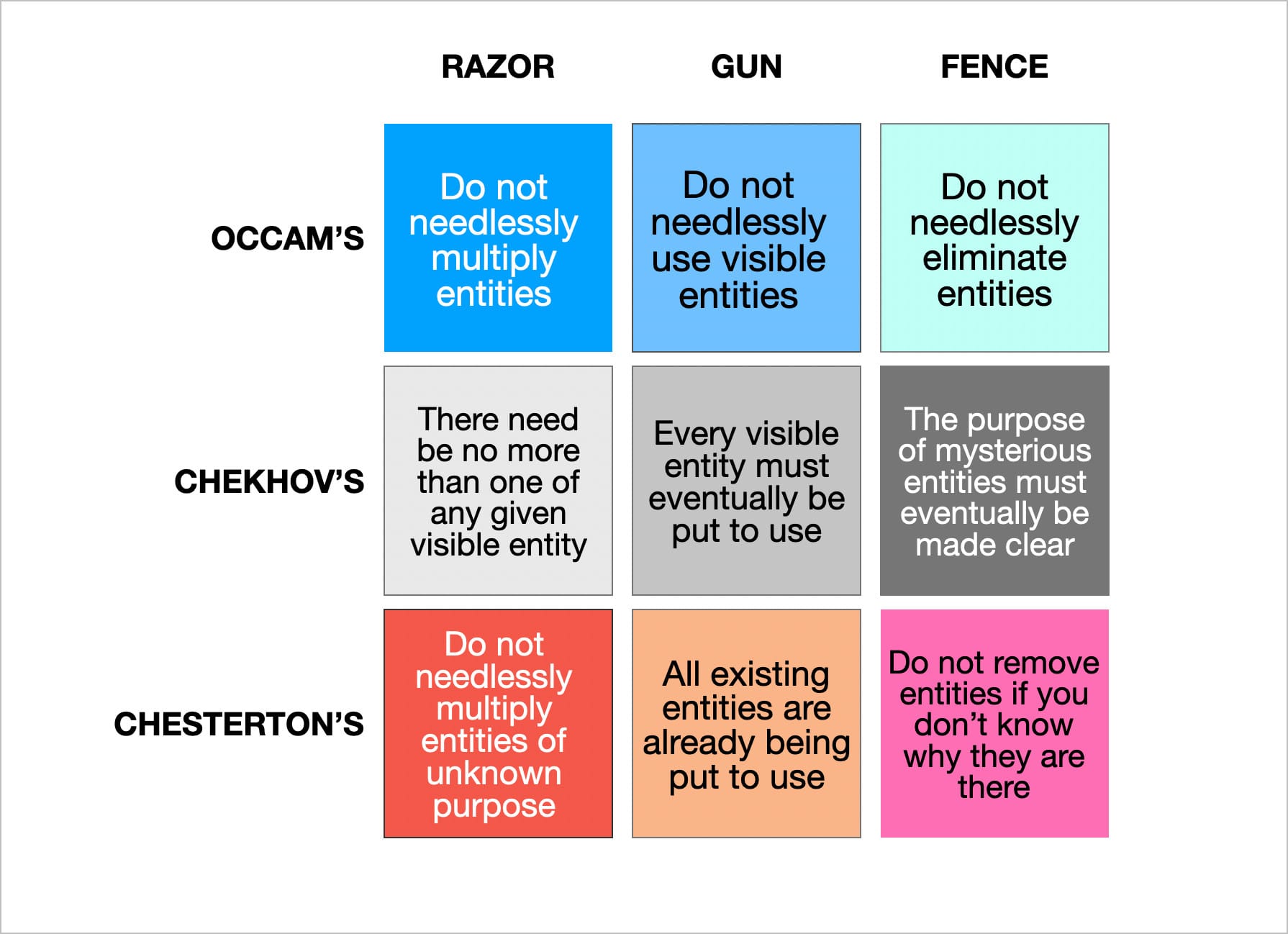

A 3X3 Of Entities

I’m on the fence…

Reader Feedback

“Christopher, Christopher, look, most leaders just don’t operate from a shared theory of value.”

Footnotes

Happy Canada Day!

I’m still recovering from Toronto Tech Week.

How was it for you?

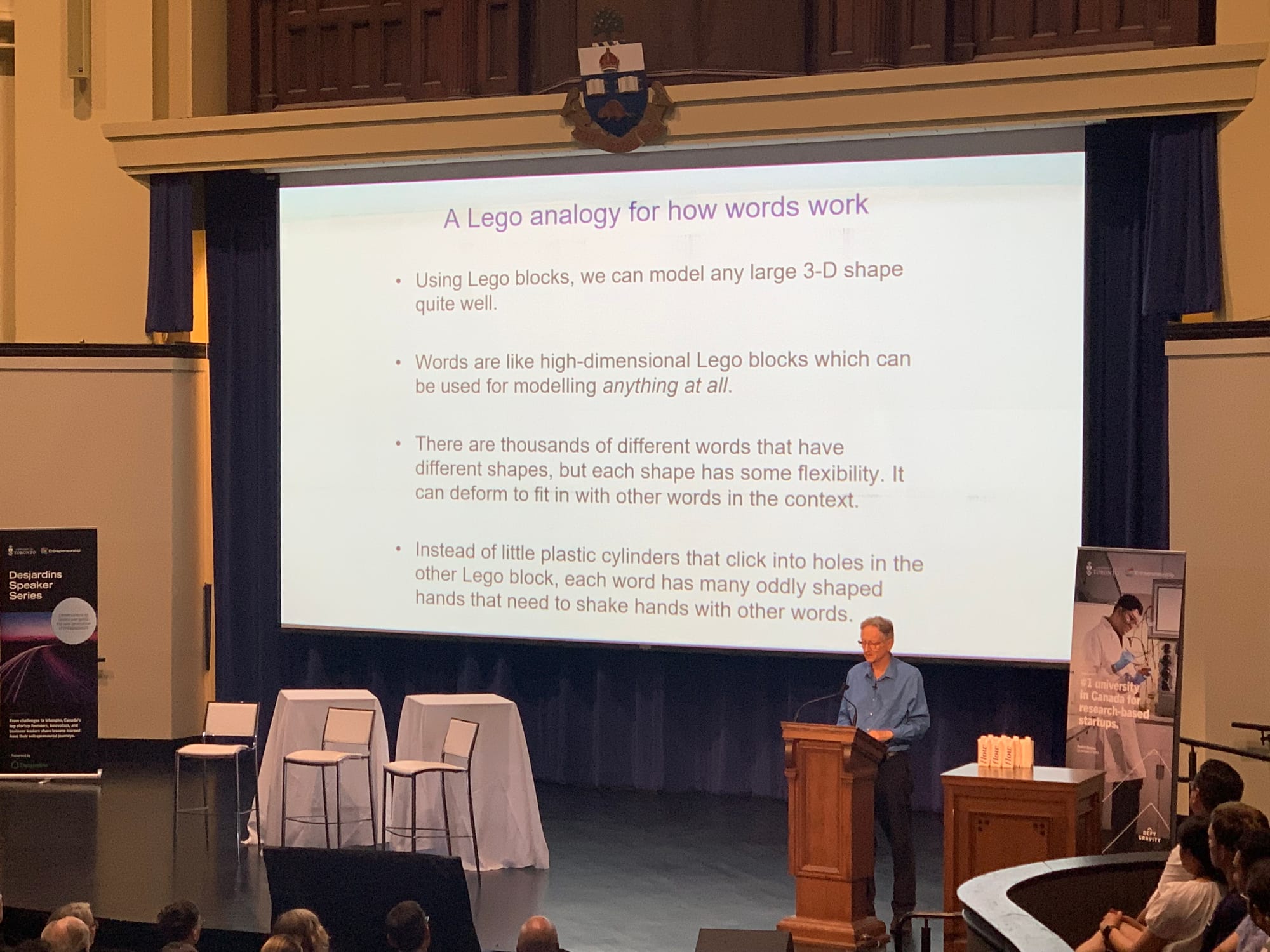

Highlights included the Hinton Talk at the U of T (All the greatest hits: Connections!), a fantastic CEO panel from the leaders of ada and 1password that I can’t directly quote, and some incredible thoughtcrime along the sidelines.

Gratitude to all those who I tested on. I learned a lot. I hope I didn’t leave you bruised.

I’ve sensed-together into a multi-dimensional segmentation that I reckon explains some of the performance variance in new venturing. I can’t explain all of the variance (imagine the returns!) — but there’s enough there to be useful to many, and extremely useful for a segment. The core problem will always persist. Always. Excitingly. It’s embedded within us.

It’s interesting to combine new technology from the Intelligence Economy to address parts of that problem, for those aware enough of their pain to do try to alleviate it.

I’ll be working with those who feel the pain over the coming quarter.

If this resonates, just ping.

Never miss a single issue

Be the first to know. Subscribe now to get the gatodo newsletter delivered straight to your inbox