The Rivalry Engine

This week: Team of Rivals, Professional software developers, AI consciousness, Customer base analysis, Skipping the factory, Neuronpedia, Flapping Airplanes

If You Want Coherence, Orchestrate a Team of Rivals: Multi-Agent Models of Organizational Intelligence

“Consider the difference between a solo worker and an entire office.”

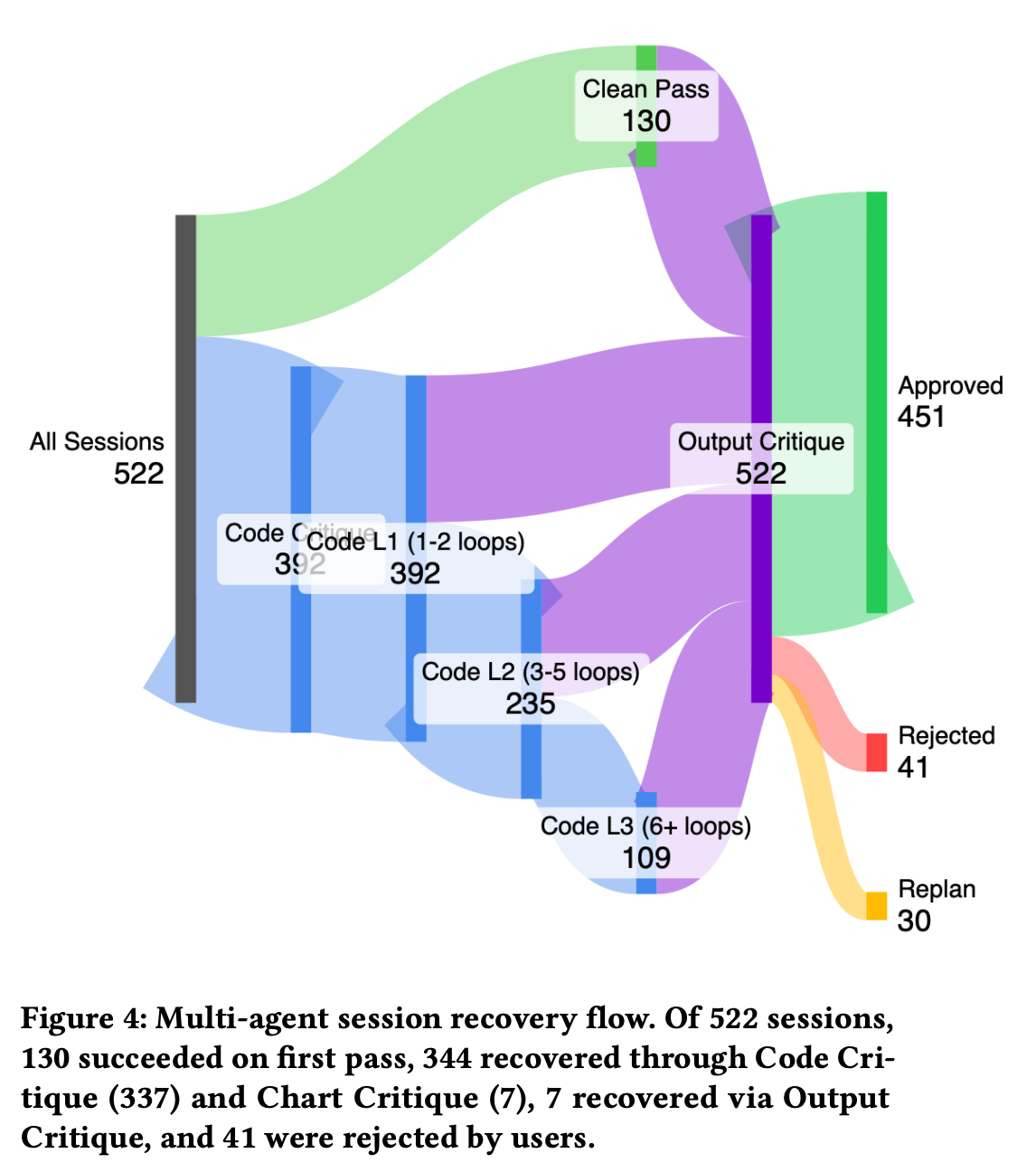

“Rather than agents directly calling tools and ingesting full responses, they write code that executes remotely; only relevant summaries return to agent context. By preventing raw data and tool outputs from contaminating context windows, the system maintains clean separation between perception (brains that plan and reason) and execution (hands that perform heavy data transformations and API calls). We demonstrate the approach achieves over 90% internal error interception prior to user exposure while maintaining acceptable latency tradeoffs. A survey from our traces shows that we only trade off cost and latency to achieve correctness and incrementally expand capabilities without impacting existing ones.”

“Our system comprises 50+ specialized agents organized into teams with distinct roles: planners generate execution strategies, executors perform work, critics validate outputs against pre-declared criteria, and a remote code executor maintains clean separation between reasoning and data transformation.”

“The remote code executor [7] keeps data transformations and tool invocations separate from reasoning models, preventing raw data and tool outputs from contaminating agent context windows. Rather than agents directly calling tools (which would inject full responses into their context), they write code that invokes tools like MCP servers; tool responses remain in the remote execution layer, with only relevant summaries returning to agents. Agents perceive results through summaries and schemas rather than full datasets or raw API responses, maintaining the separation between perception (brains that plan and reason) and execution (hands that perform heavy data transformations and API calls). This architecture solves the tool complexity trap: rather than burdening a single agent with 50+ tools and an unwieldy prompt containing massive datasets and tool outputs, each specialized agent reasons about what to do while the remote executor handles the dirty work.”

Vijayaraghavan, G., Jayachandran, P., Murthy, A., Govindan, S., & Subramanian, V. (2026). If You Want Coherence, Orchestrate a Team of Rivals: Multi-Agent Models of Organizational Intelligence. arXiv preprint arXiv:2601.14351.

https://arxiv.org/abs/2601.14351

Professional Software Developers Don't Vibe, They Control: AI Agent Use for Coding in 2025

“experienced developers do not currently vibe code”

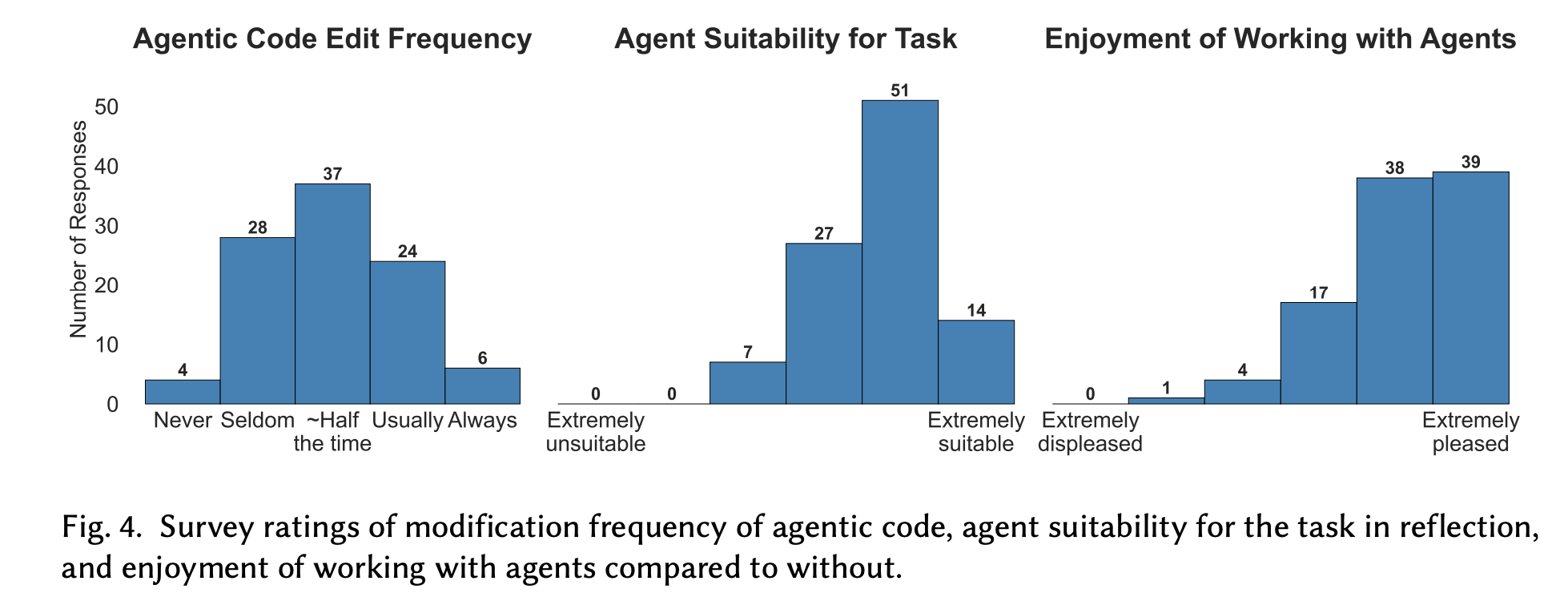

“This paper investigates how experienced developers use agents in building software, including their motivations, strategies, task suitability, and sentiments. Through field observations (N=13) and qualitative surveys (N=99), we find that while experienced developers value agents as a productivity boost, they retain their agency in software design and implementation out of insistence on fundamental software quality attributes, employing strategies for controlling agent behavior leveraging their expertise.”

“249 out of 4,141 users responded to the survey, resulting in a response rate of around 6%, comparable to other research surveys in software engineering [19, 20]. Among the 249 responses, 104 responses were valid—respondents were at least 18 years of age, had at least 3 years of professional experience in software development, and used at least one agentic tool per our definition at the beginning of this section.”

“Takeaway 3a: Experienced developers find agents suitable for accelerating straightforward, repetitive, and scaffolding tasks if prompted with well-defined plans. Beyond writing new code and prototyping, these suitable tasks include writing tests, documentation, general refactoring and simple debugging.”

”Takeaway 3b: But, as task complexity increases, agent suitability decreases. Experienced developers find agents unsuitable for tasks requiring domain knowledge such as business logic, and no respondent said agents could replace human decision making, in part because the generated code is not perfect on the first shot.”

”Takeaway 3c: Experienced developers disagree about using agents for software planning and design. Some avoided agents out of concern over the importance of design, while others embraced back-and-forth design with an AI.”

“We find that experienced developers do not currently vibe code. Instead, they carefully control the agents through planning and active supervision because they care about software quality. Although they may not always read code to validate agentic output, they are careful not to lose control and do not let agents run completely autonomously, particularly having the agents only work on a few tasks at a time.”

Huang, R., Reyna, A., Lerner, S., Xia, H., & Hempel, B. (2025). Professional Software Developers Don't Vibe, They Control: AI Agent Use for Coding in 2025. arXiv preprint arXiv:2512.14012.

https://arxiv.org/pdf/2512.14012

AI consciousness: A centrist manifesto

Alien forms of consciousness

“In the case of AI, the question becomes: could an AI system be more like the child than the ball in that respect? Could it be the sort of system such that a situation feels like something from its point of view?”

“The challenge here is that profoundly alien forms of consciousness might be genuinely achieved in AI, but our theoretical understanding of consciousness at present is too immature to provide confident answers about this one way or another. This too is a major challenge for the industry, for policymakers, and for researchers in science and philosophy.”

“The speculative claims on both sides sometimes look untestable simply because the research programs needed to test them are at a very early stage. But I don't see a good argument for their in-principle untestability. We can visualize the kinds of research programs through which they might be tested, and those programs should be pursued urgently. A mature science of animal consciousness, able to test alleged dependencies of consciousness markers on biological properties through comparative studies, may feel like a worryingly long road, but I think it’s a road we need to take.”

Birch, J. (2025). AI consciousness: A centrist manifesto. Manuscript at https://philpapers. org/rec/BIRACA-4.

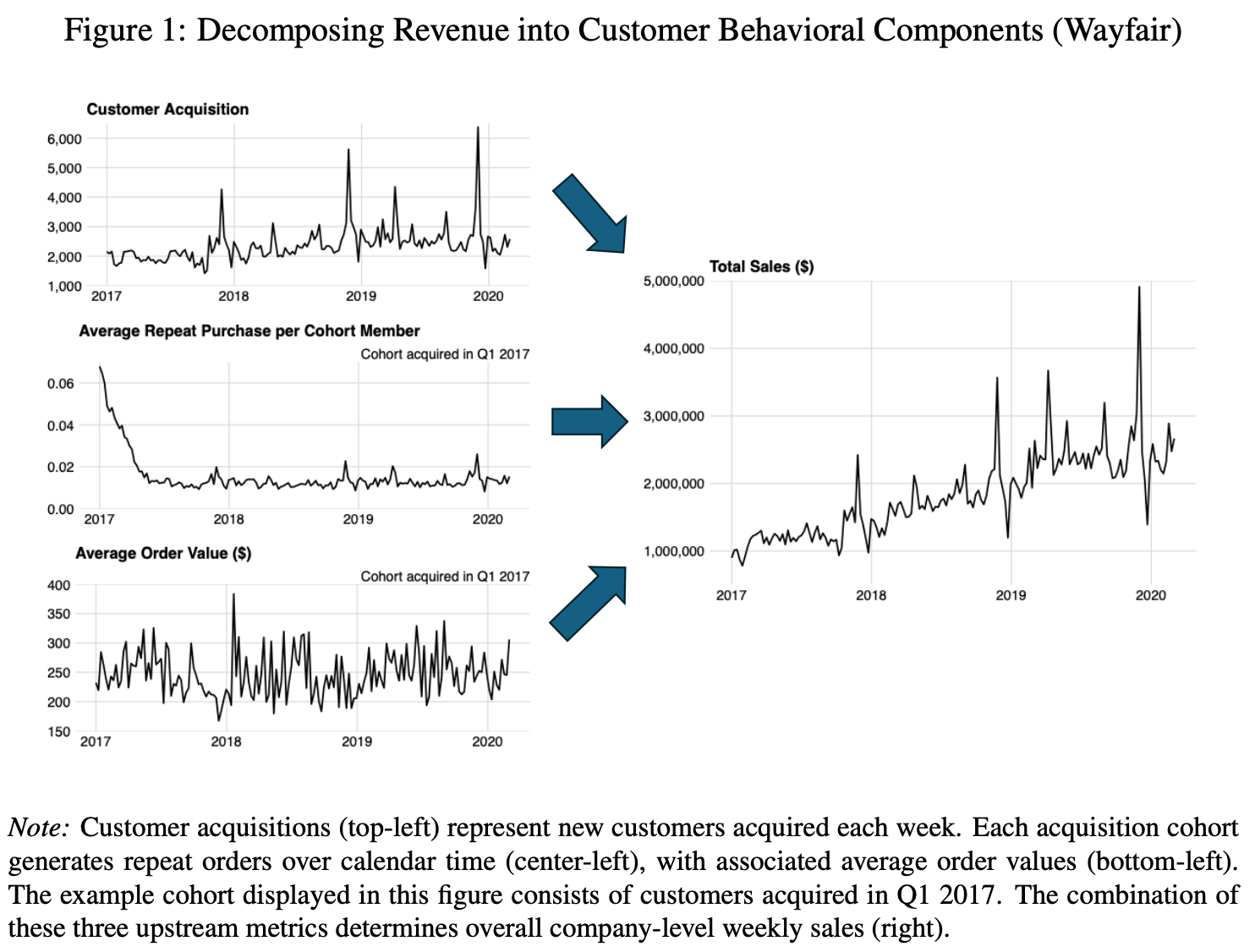

Multi-Task Learning for Customer Base Analysis: Evidence from 966 Companies

The CBMT outperforms Wall Street consensus estimates by 28-34%

“This paper develops the customer-based multi-task transformer (CBMT), a prediction framework that addresses these gaps through a flexible multi-task learning architecture. The approach leverages shared representations across behavioral processes while maintaining task-specific specialization, enabling joint prediction of upstream behaviors (acquisition, repeat purchases, order values) and downstream outcomes (cohort revenue, total sales). It incorporates downstream revenue objectives as auxiliary tasks to ensure behavioral predictions translate coherently into financial projections. Validation on 966 companies across 25 industries over 37 months shows 35-66% improvement in revenue prediction accuracy over benchmark models, with CBMT performing best for 60–70% of firms. The CBMT outperforms Wall Street consensus estimates by 28-34%.”

“By jointly optimizing for upstream behavioral accuracy and downstream revenue coherence, our framework bridges the gap between aggregate business outcomes and the granular behaviors that drive them.”

Kim, K. K., McCarthy, D., & Lee, D. (2025). Kim, K. K., McCarthy, D., & Lee, D. (2025). Multi-Task Learning for Customer Base Analysis: Evidence from 966 Companies. Available at SSRN 5713643.. Available at SSRN 5713643.

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5713643

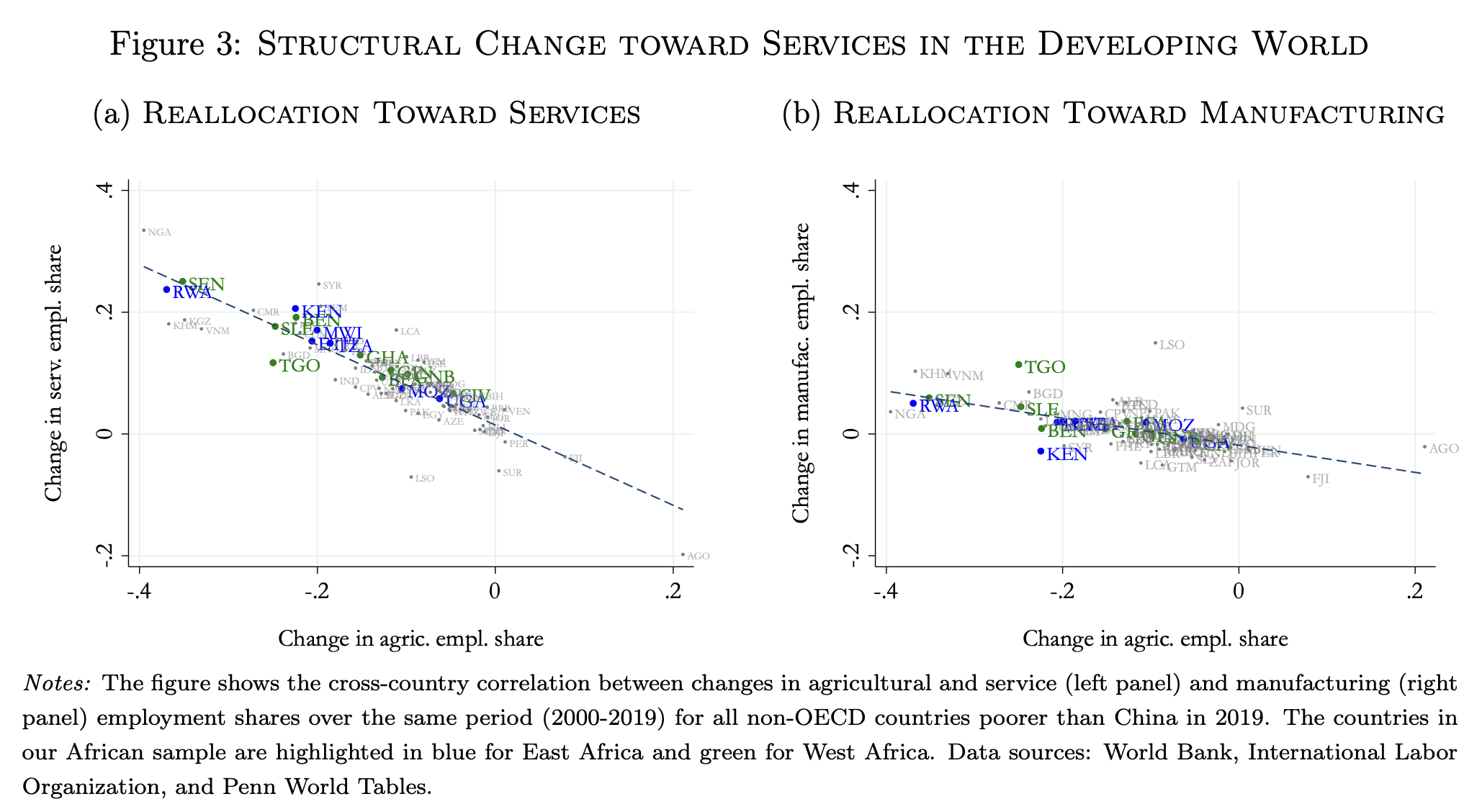

Skipping the factory: Service-led growth and structural transformation in the developing world

“many economies appear to bypass industrialization and transition directly from agriculture to services”

“At the same time, the welfare effects are uneven: productivity growth in tradables tends to benefit poorer households across space, whereas service-led growth delivers larger gains to richer and more urban consumers. This distributional asymmetry highlights the importance of accounting for non-homotheticity and spatial structure when evaluating development trajectories.”

“We conclude that the path to development need not replicate the factory-first model of earlier industrializers…Yet this is not automatic, and the sustainability of a service-led path depends on complementary transformations such as formalization, infrastructure investments that enable agglomeration externalities, and local demand growth.”

Peters, Michael., Zhang, Youdan., Zilibotti, Fabrizio. (2026) SKIPPING THE FACTORY: SERVICE-LED GROWTH AND STRUCTURAL TRANSFORMATION IN THE DEVELOPING WORLD. NBER Working Paper 34692

http://www.nber.org/papers/w34692

Neuronpedia

open source interpretability platform

“Our Purpose: Accelerate Interpretability Research

Interpretability is an unsolved problem - anyone that tells you otherwise is either trying to sell you something and/or misinformed - especially with new models being released so frequently.

Is interpretability needed? While it's possible that advanced AI is somehow "naturally aligned" to be pro-human and pro-Earth, there's no benefit to assuming that this is true. It seems unlikely that all advanced AI would be fully aligned in all the possible scenarios and edge cases.

Neuronpedia's role is to accelerate understanding of AI models, so that when they get powerful enough, we have a better chance of aligning them. If we can increase the probability of a good outcome by even 0.01%, that's an expected value of saving many, many current and future lives - certainly a worthwhile and meaningful endeavor.”

https://github.com/hijohnnylin/neuronpedia#readme

Flapping Airplanes

Flapping Airplanes is a frontier data efficiency lab that is currently in stealth

“We imagine a world where models can think at the level of humans without ingesting half the internet.”

“Flapping Airplanes is a foundational AI research lab devoted to solving the data efficiency problem. We are not focused on one specific technical idea or vertical; we're taking a long-term view and pursuing radical new approaches. We have the resources and the will to do so.”

https://flappingairplanes.com/

Reader Feedback

“Humans aren’t models, but models of humans are models.”

Footnotes

Risk is uncertainty over outcome. If you want the best outcomes for the risk you’re thinking about taking, you want to imagine better outcomes and increase the likelihood of those ones to occur. It isn’t just about prediction, it’s about deciding on superior treatments in an effort to cause superior effects.

BigCo leaders manage risk in two ways: imitation and innovation. They imitate rivals, expecting similar or no negative outcomes (after all the other guys did it, table stakes, not my fault if it goes south I was just fast following, etc etc). Or they learn about novel needs and recombine offers, technology, quality attributions and positioning to create something new. This is comparatively harder and riskier than imitation and isn’t attempted as often.

The tricky aspect about innovation is that you can change the outcome just by measuring it. For instance, asking questions about voting very likely causes greater turnout, an insight that’s at the core of many civic engagement engines.

Uncertainty may be reduced, but it never vanishes to zero. The Law of Large Numbers has diminishing returns. And sometimes that can be paralyzing. Nothing ventured. Nothing gained.

Isn’t there’s something interesting about how innovators estimate possibility and uncertainty?

Never miss a single issue

Be the first to know. Subscribe now to get the gatodo newsletter delivered straight to your inbox