Two views of the causal chain

AI as normal tech, predictive optimization, continuous thought machines

AI as Normal Technology

Thinking fast and slow

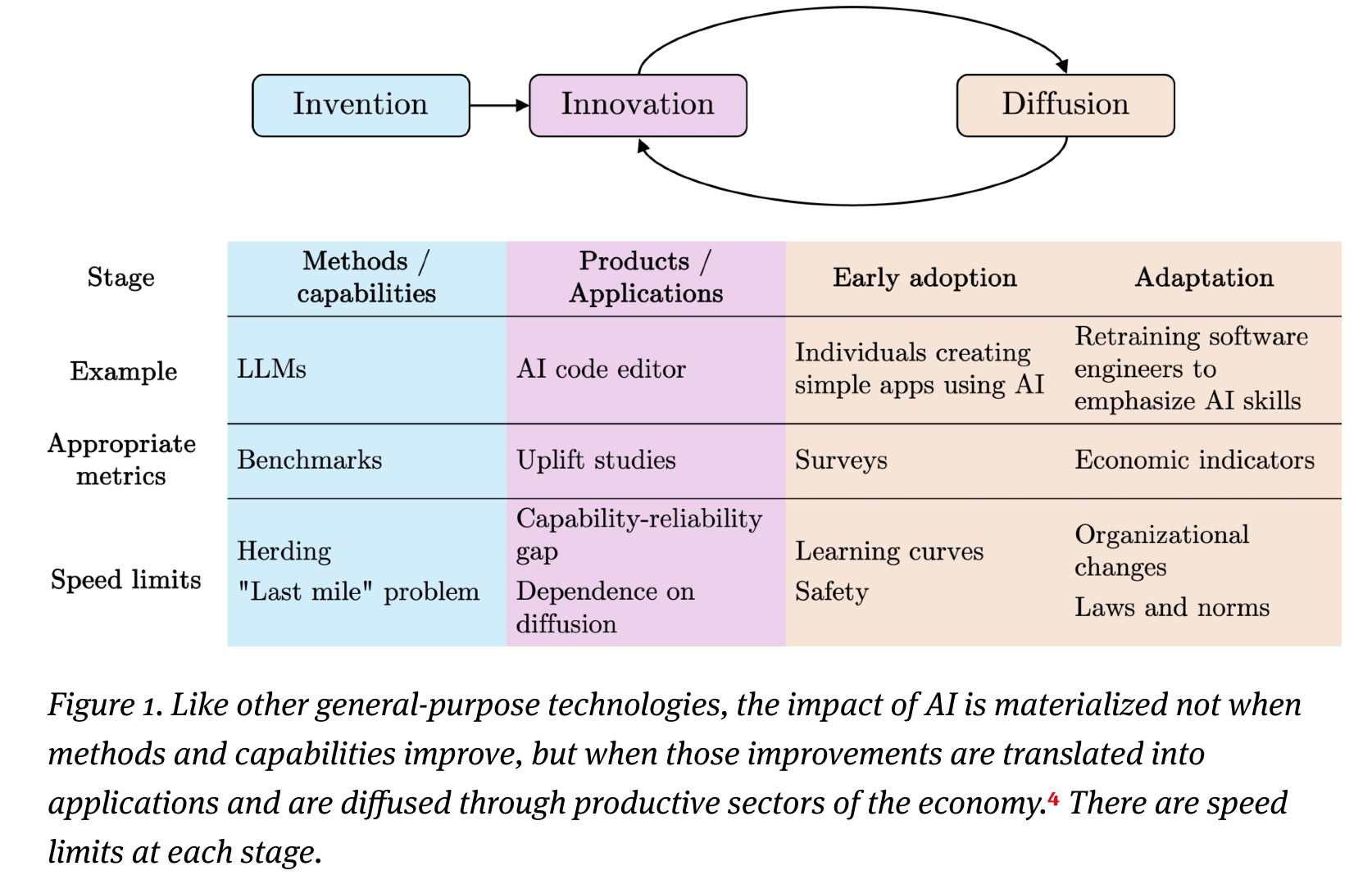

“We use invention to refer to the development of new AI methods—such as large language models—that improve AI’s capabilities to carry out various tasks. Innovation refers to the development of products and applications using AI that consumers and businesses can use. Adoption refers to the decision by an individual (or team or firm) to use a technology, whereas diffusion refers to the broader social process through which the level of adoption increases. For sufficiently disruptive technologies, diffusion might require changes to the structure of firms and organizations, as well as to social norms and laws.”

“The claim that the speed of technology adoption is not necessarily increasing may seem surprising (or even obviously wrong) given that digital technology can reach billions of devices at once. But it is important to remember that adoption is about software use, not availability. Even if a new AI-based product is instantly released online for anyone to use for free, it takes time to for people to change their workflows and habits to take advantage of the benefits of the new product and to learn to avoid the risks.”

“The difficulty of ensuring construct validity afflicts not only benchmarking, but also forecasting, which is another major way in which people try to assess (future) AI impacts. It is extremely important to avoid ambiguous outcomes to ensure effective forecasting. The way that the forecasting community accomplishes this is by defining milestones in terms of relatively narrow skills, such as exam performance. For instance, the Metaculus question on “human-machine intelligence parity” is defined in terms of performance on exam questions in math, physics, and computer science. Based on this definition, it is not surprising that forecasters predict a 95% chance of achieving “human-machine intelligence parity” by 2040. 32”

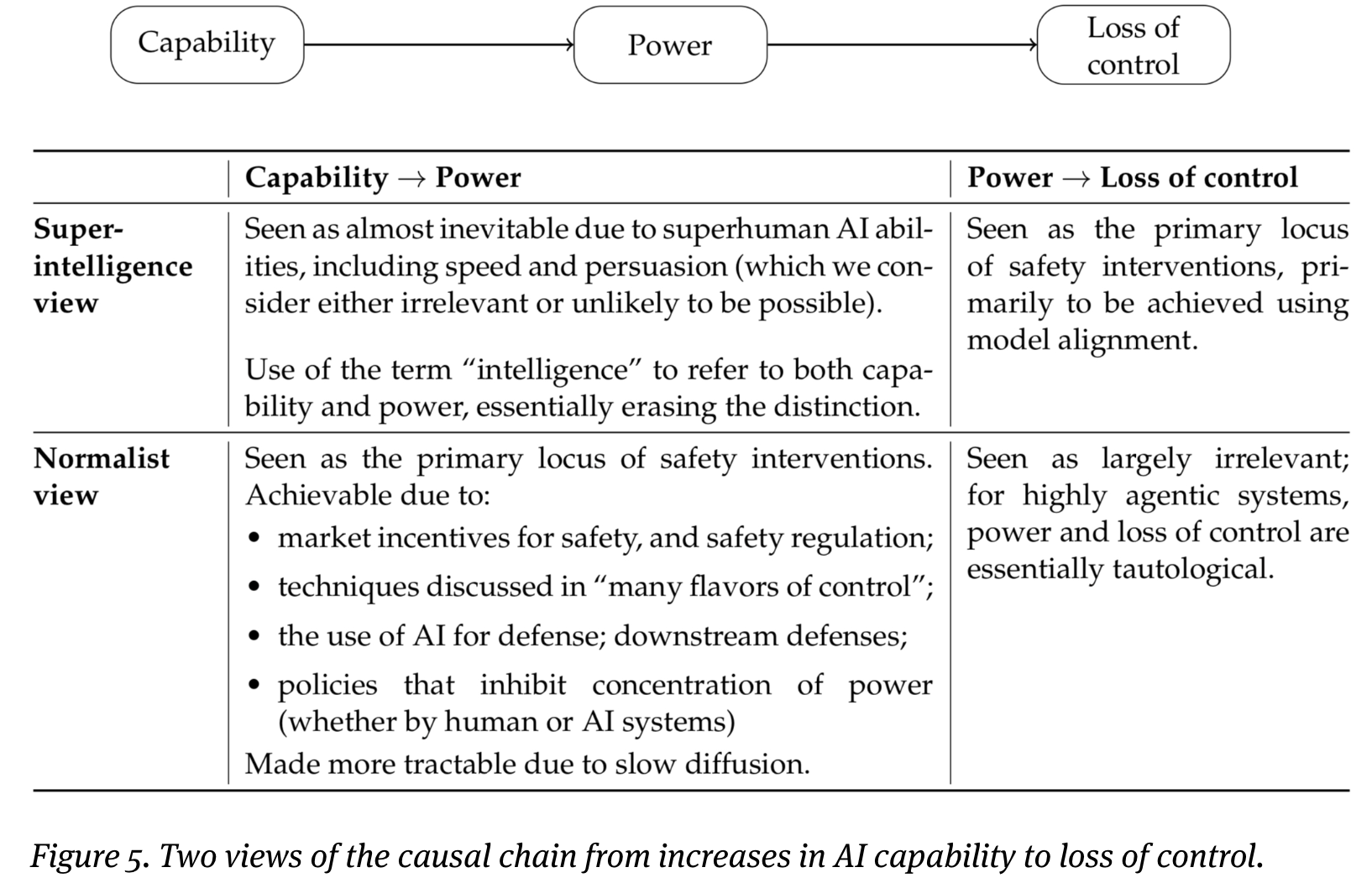

“The superintelligence view is pessimistic about the first arrow in Figure 5—preventing arbitrarily capable AI systems from acquiring power that is significant enough to pose catastrophic risks—and instead focuses on alignment techniques that try to prevent arbitrarily powerful AI systems from acting against human interests. Our view is precisely the opposite, as we elaborate in the rest of this paper.”

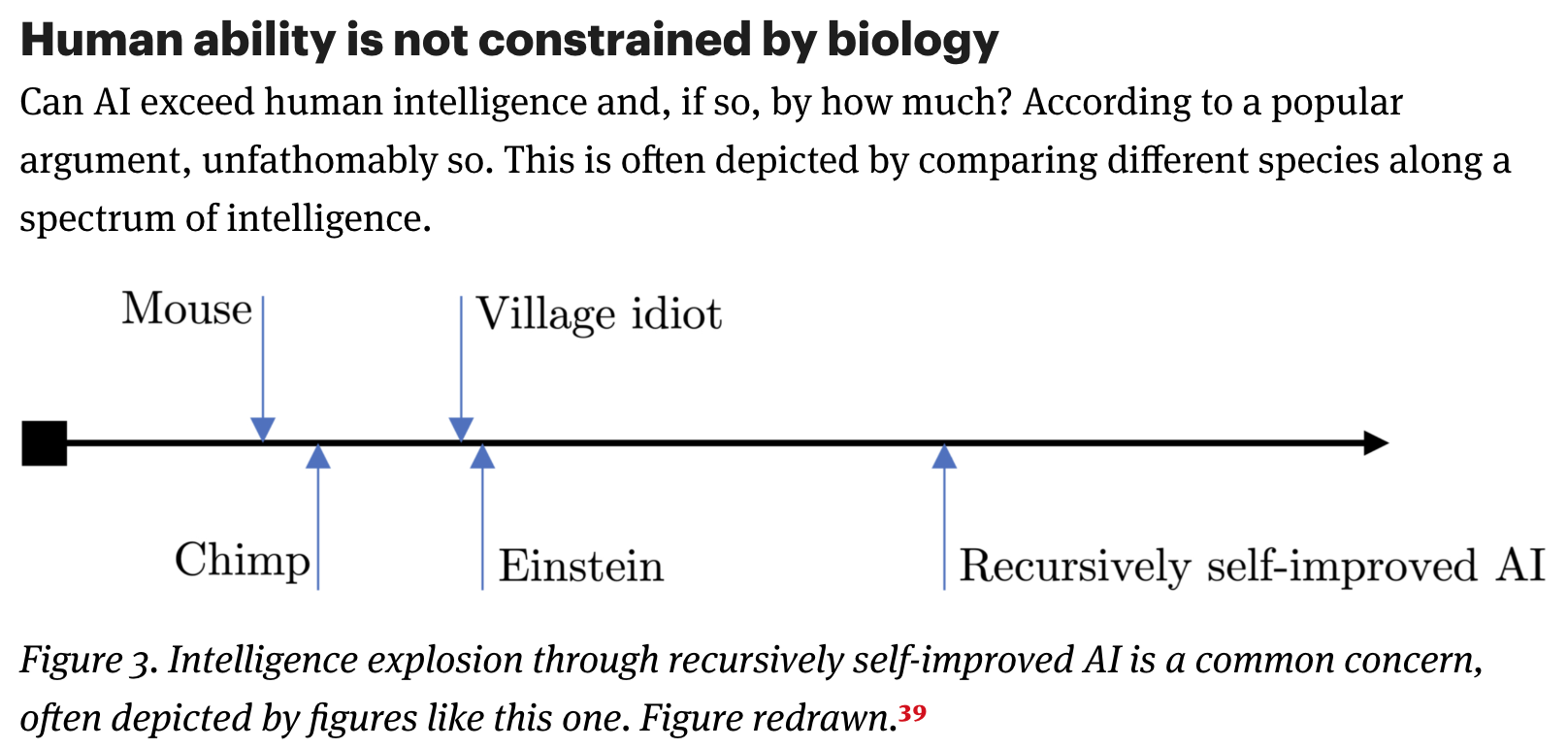

“De-emphasizing intelligence is not just a rhetorical move: We do not think there is a useful sense of the term ‘intelligence’ in which AI is more intelligent than people acting with the help of AI. Human intelligence is special due to our ability to use tools and to subsume other intelligences into our own, and cannot be coherently placed on a spectrum of intelligence.

Human abilities definitely have some important limitations, notably speed. This is why machines dramatically outperform humans in domains like chess and, in a human+AI team, the human can hardly do better than simply deferring to AI. But speed limitations are irrelevant in most areas because high-speed sequential calculations or fast reaction times are not required.

In the few real-world tasks for which superhuman speed is required, such as nuclear reactor control, we are good at building tightly scoped automated tools to do the high-speed parts, while humans retain control of the overall system.

We offer a prediction based on this view of human abilities. We think there are relatively few real-world cognitive tasks in which human limitations are so telling that AI is able to blow past human performance (as AI does in chess). In many other areas, including some that are associated with prominent hopes and fears about AI performance, we think there is a high “irreducible error”—unavoidable error due to the inherent stochasticity of the phenomenon—and human performance is essentially near that limit.”

“Concretely, we propose two such areas: forecasting and persuasion. We predict that AI will not be able to meaningfully outperform trained humans (particularly teams of humans and especially if augmented with simple automated tools) at forecasting geopolitical events (say elections). We make the same prediction for the task of persuading people to act against their own self-interest.”

“Differences about the future of AI are often partly rooted in differing interpretations of evidence about the present. For example, we strongly disagree with the characterization of generative AI adoption as rapid (which reinforces our assumption about the similarity of AI diffusion to past technologies).

In terms of epistemic tools, we deemphasize probability forecasting and emphasize the need for disaggregating what we mean by AI (levels of generality, progress in methods versus application development versus diffusion, etc.) when extrapolating from the past to the future.

We believe that some version of our worldview is widely held. Unfortunately, it has not been articulated explicitly, perhaps because it might seem like the default to someone who holds this view, and articulating it might seem superfluous. Over time, however, the superintelligence view has become dominant in AI discourse, to the extent that someone steeped in it might not recognize that there exists another coherent way to conceptualize the present and future of AI. Thus, it might be hard to recognize the underlying reasons why different people might sincerely have dramatically differing opinions about AI progress, risks, and policy. We hope that this paper can play some small part in enabling greater mutual understanding, even if it does not change any beliefs.”

https://knightcolumbia.org/content/ai-as-normal-technology

Against predictive optimization: On the legitimacy of decision-making algorithms that optimize predictive accuracy

Whose optimization is it anyway?

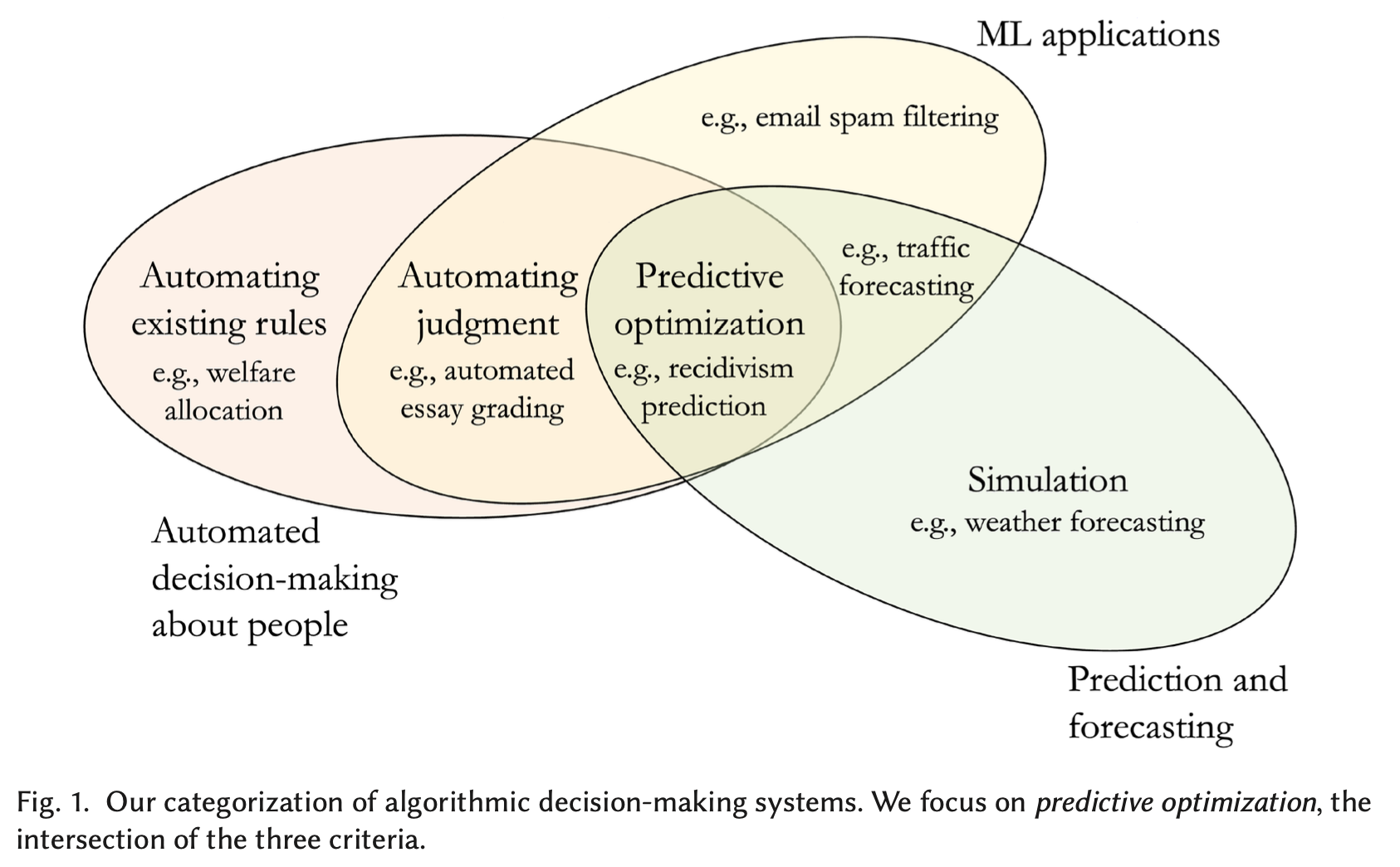

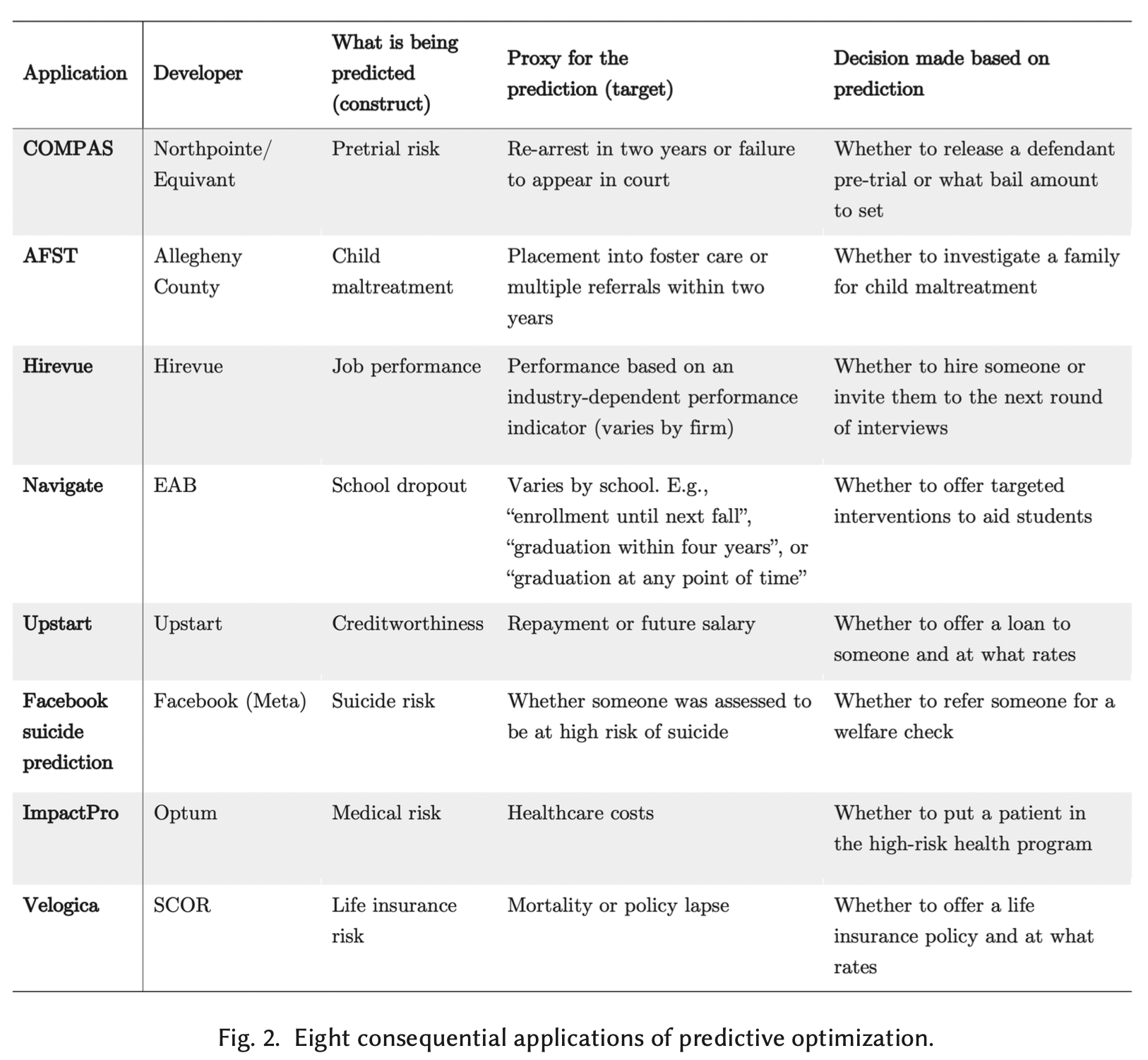

“We focus on a subset of the second type discussed above, specifically where (1) machine learning is used to (2) make predictions about some future outcome (3) pertaining to individuals, and those predictions are used to make decisions about them. We coin the term “predictive optimization” to refer to this form of algorithmic decision-making, because the decision-making rules at issue have been explicitly optimized with the narrow goal of maximizing the accuracy with which they predict some future outcome. This contrasts with manual approaches to developing decision-making rules that may involve a more deliberative process incorporating a range of considerations and goals.”

“Predictive systems can only meet their goals, such as minimizing crime or hiring good employees, to the extent that their predictions are accurate. But there are many reasons why predictions are imperfect: both practical ones, such as limits to the ability to observe decision subjects’ lives, and more fundamental ones, such as the fact that crime is sometimes a spur-of-the-moment act that cannot be accurately predicted in advance [178].”

“On the other hand, the rigidity imposed by predictive optimization makes effective fairness interventions harder. Human decision-making is messy, but one upside is that it combines decision-making with deliberation about bias and values. This deliberation is important, since fairness requires continual consensus building. The framework of algorithmic fairness presumes that consensus has been reached and scales up a single conceptualization of fairness, thus choking off one avenue for deliberation.”

“Rather, our objections to predictive optimization should be seriously deliberated before consider- ing any deployment of predictive optimization. As more promising paths forward, we urge the consideration of the alternatives we have only begun to consider here and leave for future work, such as hybrid approaches that bridge categorical prioritization and predictive optimization.”

Wang, A., Kapoor, S., Barocas, S., & Narayanan, A. (2024). Against predictive optimization: On the legitimacy of decision-making algorithms that optimize predictive accuracy. ACM Journal on Responsible Computing, 1(1), 1-45.

https://dl.acm.org/doi/10.1145/3593013.3594030

Continuous Thought Machines

An interesting idea…as opposed to constantly waking them up and putting them to sleep all the time…And is this old wine in new bottles? Hmmmmmmm?

“Sakana AI is proud to release the Continuous Thought Machine (CTM), an AI model that uniquely uses the synchronization of neuron activity as its core reasoning mechanism, inspired by biological neural networks. Unlike traditional artificial neural networks, the CTM uses timing information at the neuron level that allows for more complex neural behavior and decision-making processes. This innovation enables the model to “think” through problems step-by-step, making its reasoning process interpretable and human-like.”

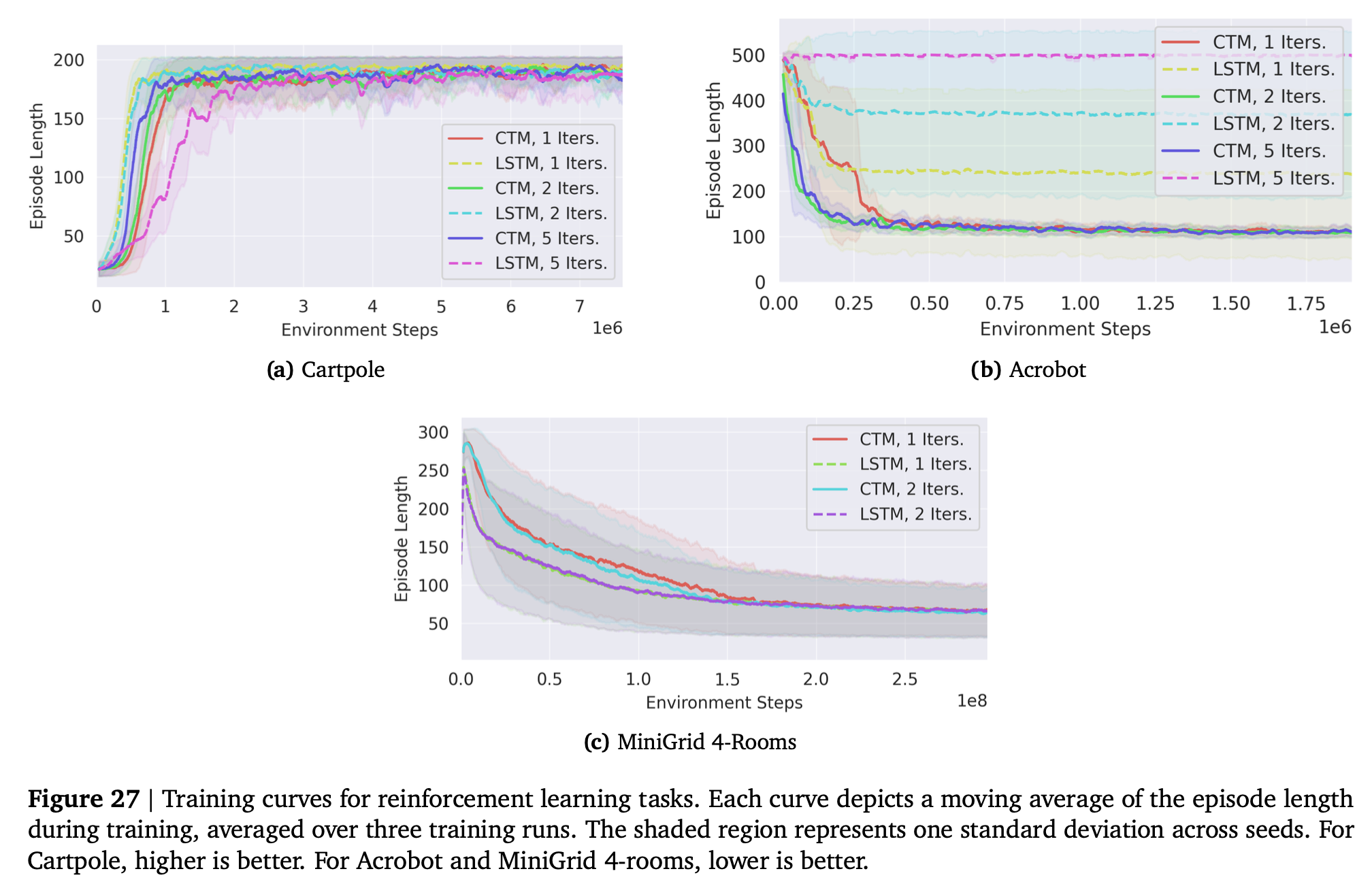

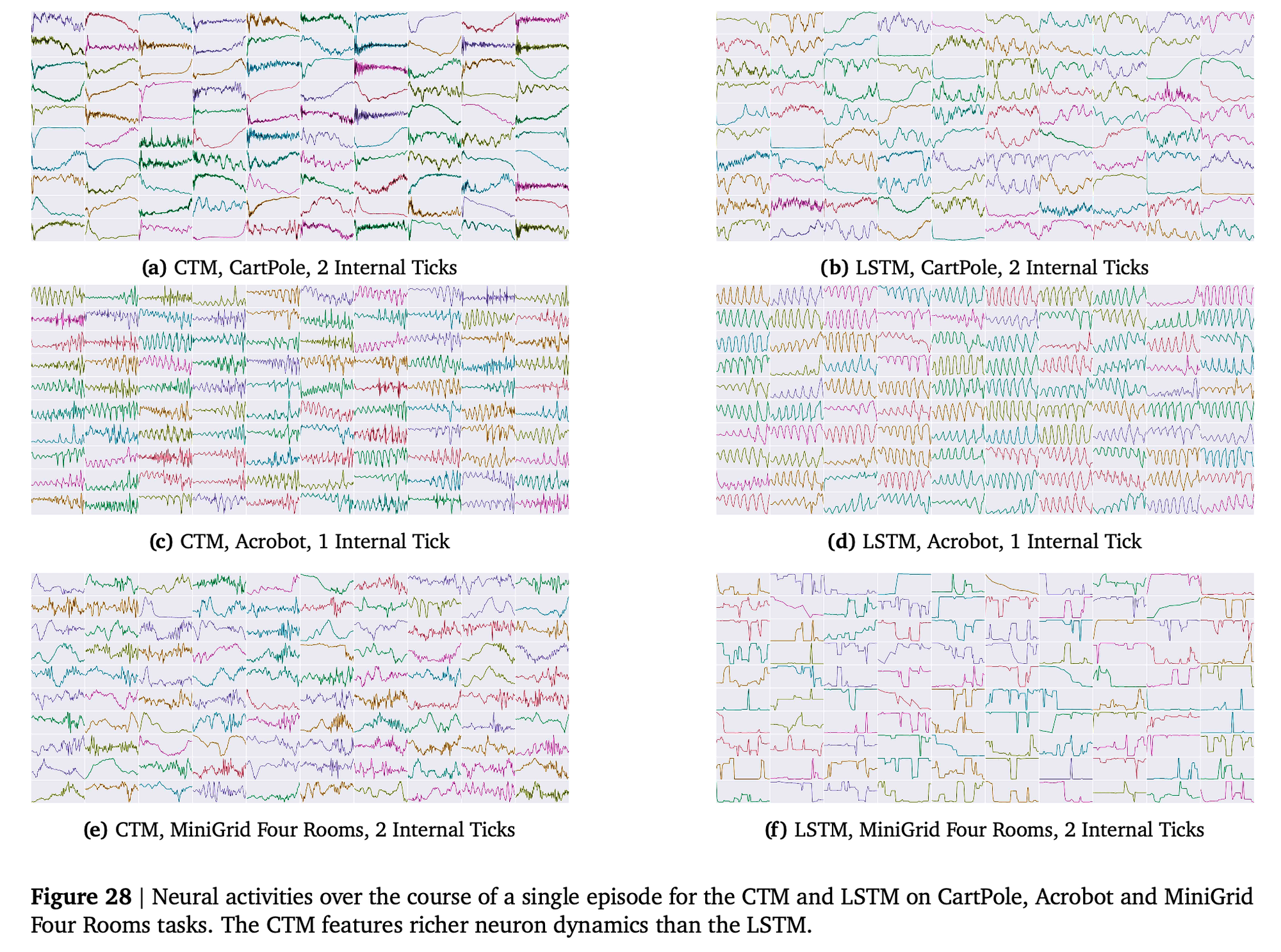

“The CTM can continuously interact with the world. Training curves for the reinforcement learning tasks are shown in Figure 27. In all tasks, we find that the CTM achieves a similar performance to the LSTM baselines. Figure 28 compares the neuron traces of the CTM and the LSTM baselines for the CartPole, Acrobot and MiniGrid Four Rooms tasks. In the classic control tasks, the activations for both the CTM and the LSTM feature oscillatory behavior, corresponding to the back-and-forth movements of the cart and arm. For the navigation task, a rich and complex activation pattern emerges in the CTM. The LSTM on the other hand, features a less diverse set of activations. The LSTMs trained in this section have a more dynamic neural activity than what can be seen when trained on CIFAR-10 (Figure 12). This is likely due to the sequential nature of RL tasks, where the input to the model changes over time owing to its interaction with the environment, inducing a feedback loop that results in the model’s latent representation also evolving over time.”

https://arxiv.org/abs/2505.05522

Reader Feedback

“I’m fine with AI alignment with human preferences. So long as they align with mine.”

Footnotes

Sometimes we learn a lot when we re-examine a first principle.

And sometimes we don’t.

Do I ever make some pretty weird assumptions about first principles!

I get to talk to a lot of people who are using new technology to solve old problems, and a few people who are using new technology to create new problems.

Why solve any problem? What’s the point? There’ll only be a new problem to take its place, right?

Well, we tend to be attracted to value. We like valuable things. So we imagine value and will it into the world.

I’ve been disassembling value propositions. Why? Because they’re the riskiest bundles of words. That’s where the risk in any proposition truly lies. If you build something that has no value, then the market will price it as such. And as a Canadian, I’m attuned to see risk in everything, everywhere, all the time, constantly. Who am I to resist my nature?

So, here I go, building what I think is a simple decomposition of speculative risk. Which seems like, inherently, like a risky proposition. Take a look at how simple it is.

Is it worth de-risking the riskiest risk?

Risk sending me your notes.

Never miss a single issue

Be the first to know. Subscribe now to get the gatodo newsletter delivered straight to your inbox