A deceptively persuasive title

This week: Counting, Claude, persuasion, deceptive persuasion

Does counting change what counts?

Yes.

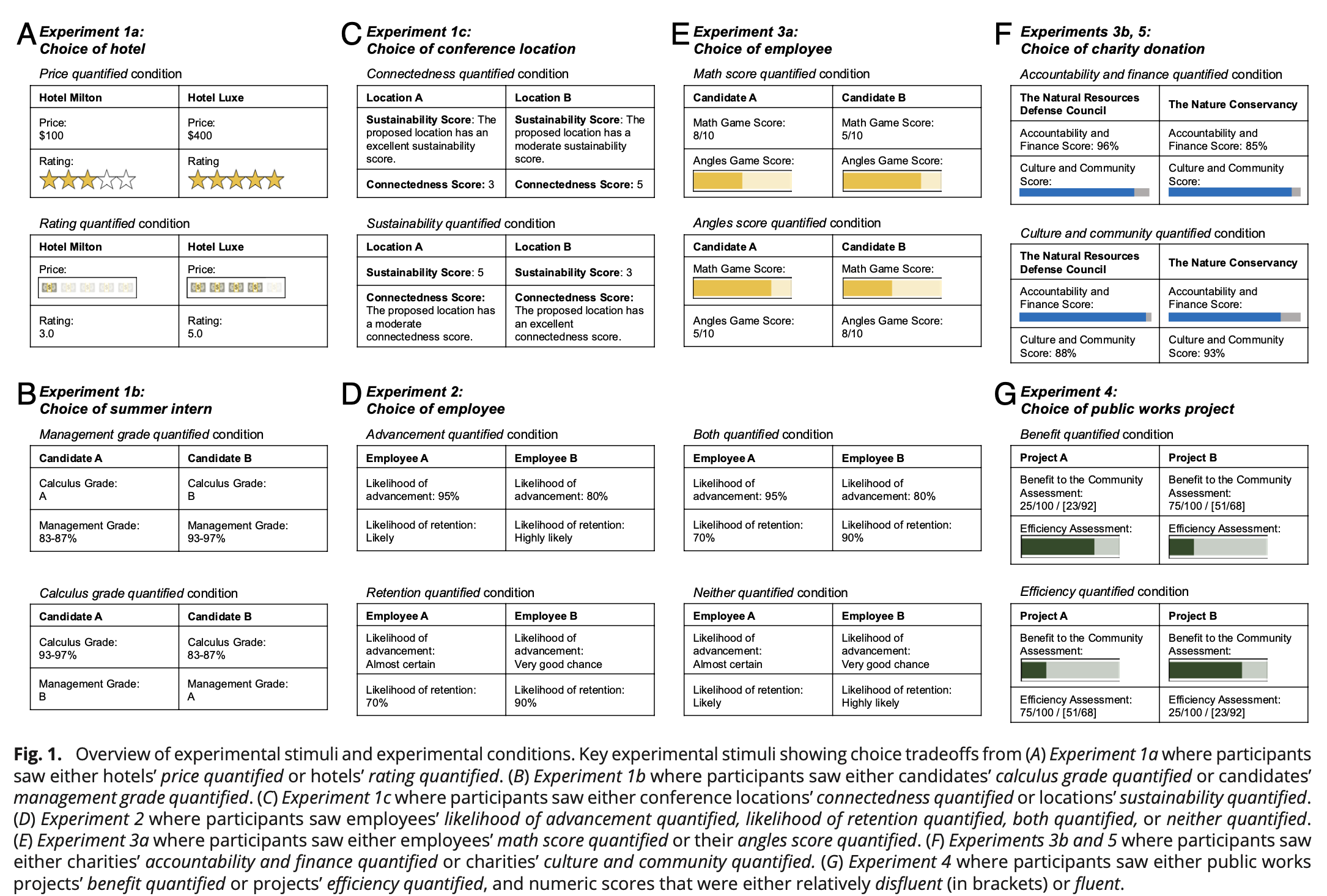

“A key implication of our findings is that when making decisions, people are systematically biased to favor options that dominate on quantified dimensions. And tradeoffs that pit quantitative against qualitative information are everywhere.”

“Websites facilitating comparisons of options present us with a mix of quantified and non-quantified attributes to consider (e.g., price, star ratings). What’s more, when making important decisions ranging from which medical treatment to use to whom we will hire, some attributes are more often quantified than others. When deciding between cancer treatments, people may face tradeoffs between their expected longevity and quality of life, only one of which is naturally represented as a number. In the work place, when weighing diversity and inclusion priorities, an organiza tion’s diversity is much easier to quantify than its inclusion. Similarly, salary and paid time off are easily presented as numbers, while a company’s culture is harder to quantify. Those who structure decision contexts ignore quantification fixation at their peril. As quantification becomes increasingly prevalent, people may be pulled away from valuable qualitative information toward potentially less diagnostic numeric information.”

Chang, L. W., Kirgios, E. L., Mullainathan, S., & Milkman, K. L. (2024). Does counting change what counts? Quantification fixation biases decision-making. Proceedings of the National Academy of Sciences, 121(46), e2400215121.

https://www.pnas.org/doi/epub/10.1073/pnas.2400215121

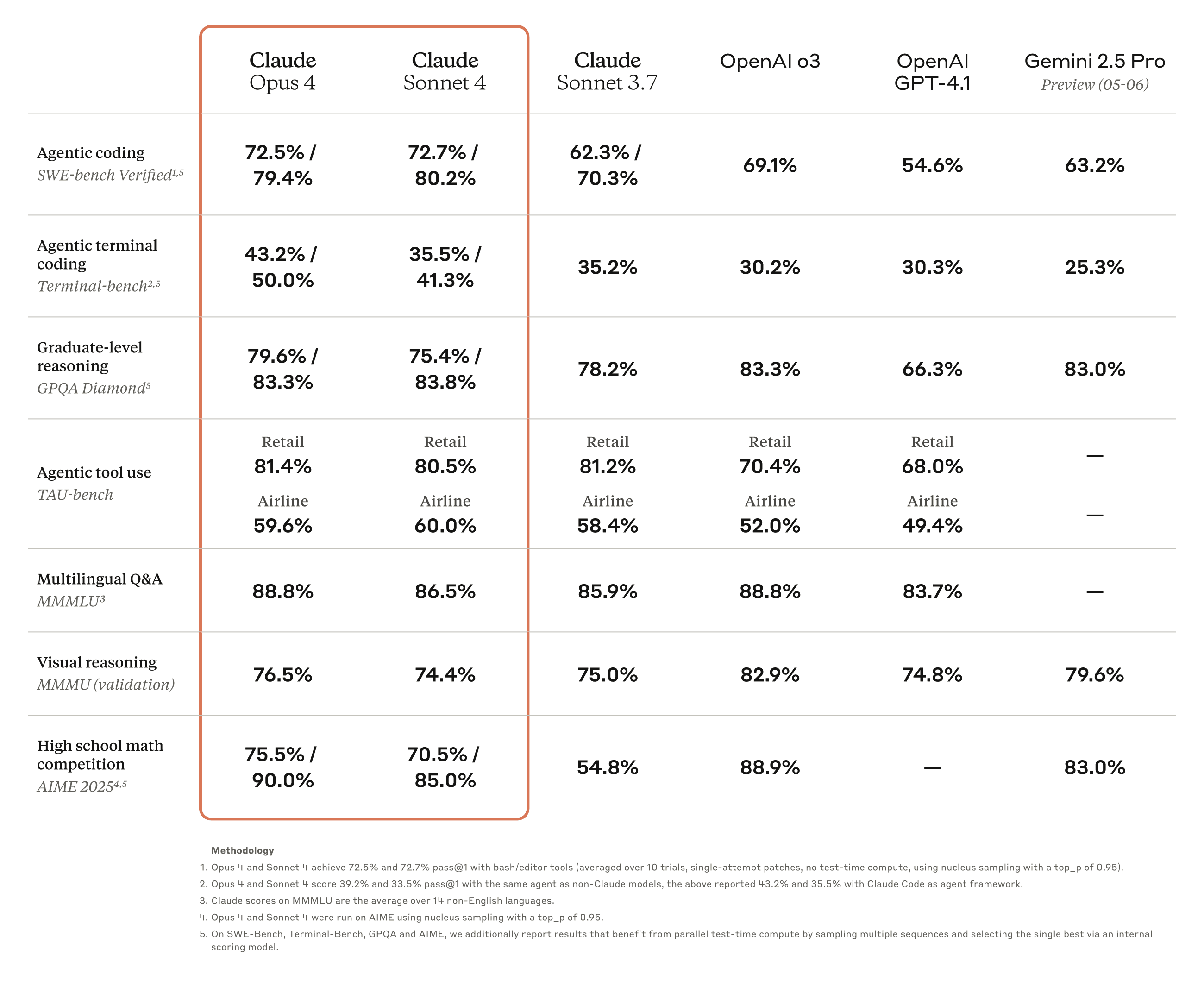

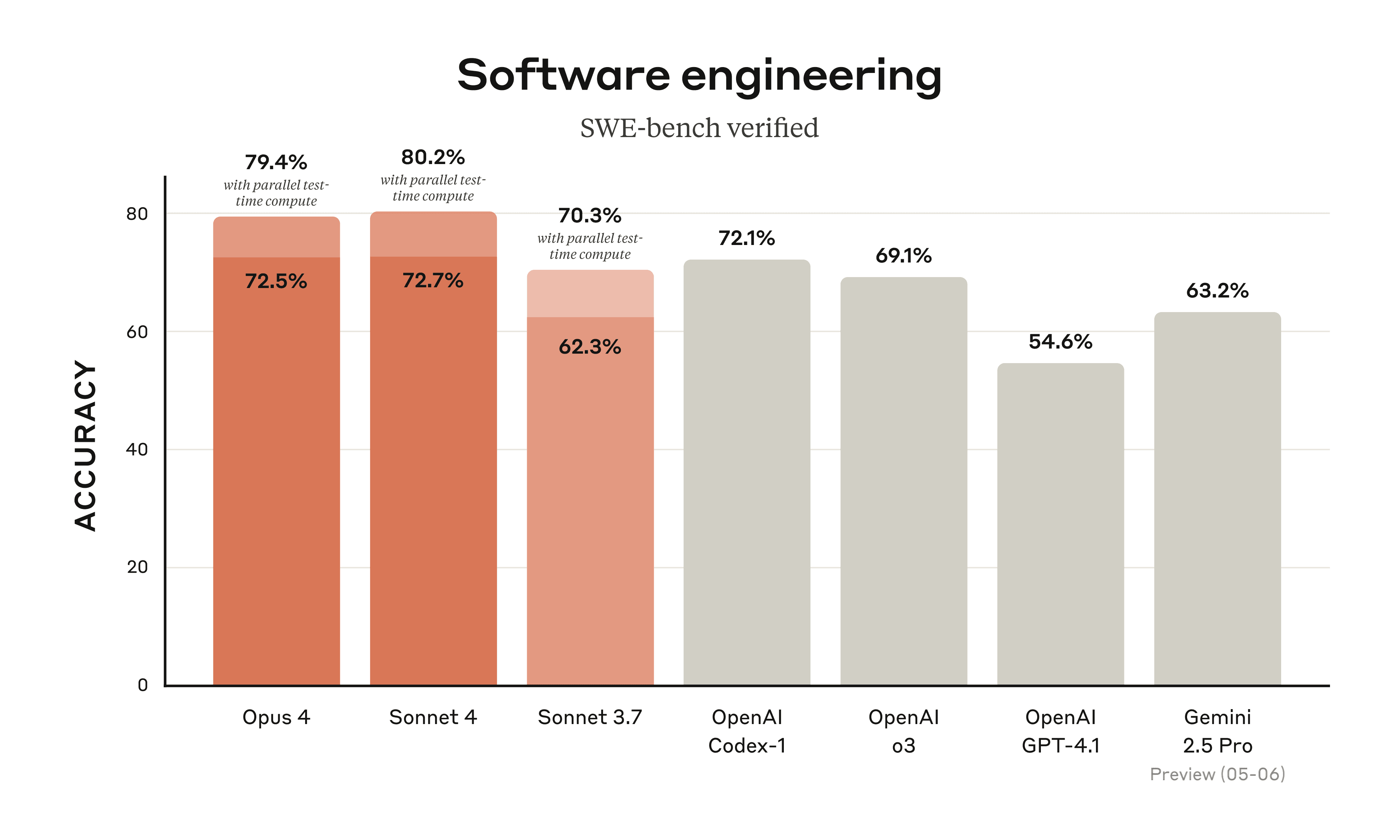

Claude 4

Does counting change what counts?

“In addition to extended thinking with tool use, parallel tool execution, and memory improvements, we’ve significantly reduced behavior where the models use shortcuts or loopholes to complete tasks. Both models are 65% less likely to engage in this behavior than Sonnet 3.7 on agentic tasks that are particularly susceptible to shortcuts and loopholes.”

“New API capabilities: We’re releasing four new capabilities on the Anthropic API that enable developers to build more powerful AI agents: the code execution tool, MCP connector, Files API, and the ability to cache prompts for up to one hour.”

https://www.anthropic.com/news/claude-4

Large language models are as persuasive as humans, but how?

Valence? In my sub-headline?

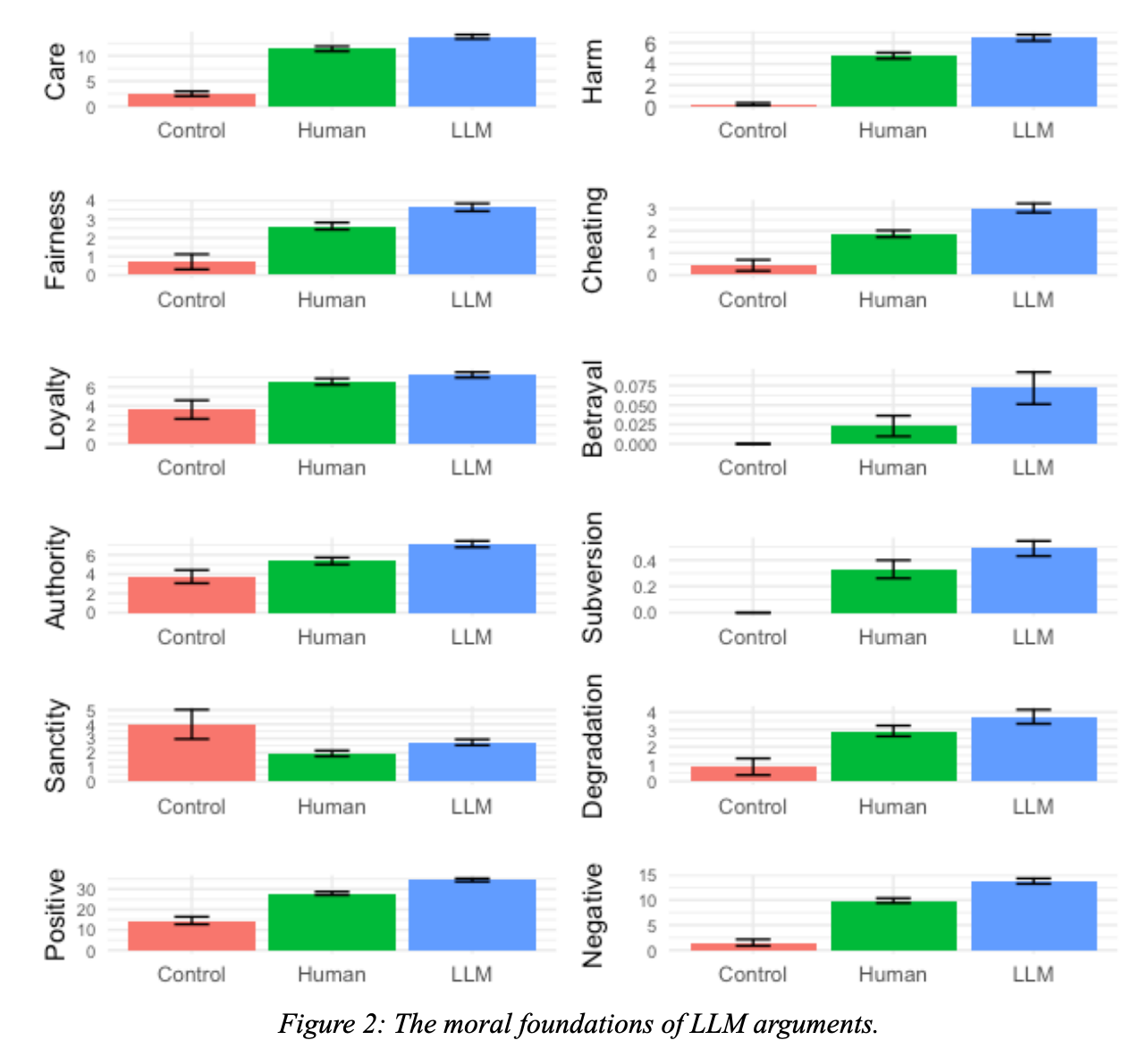

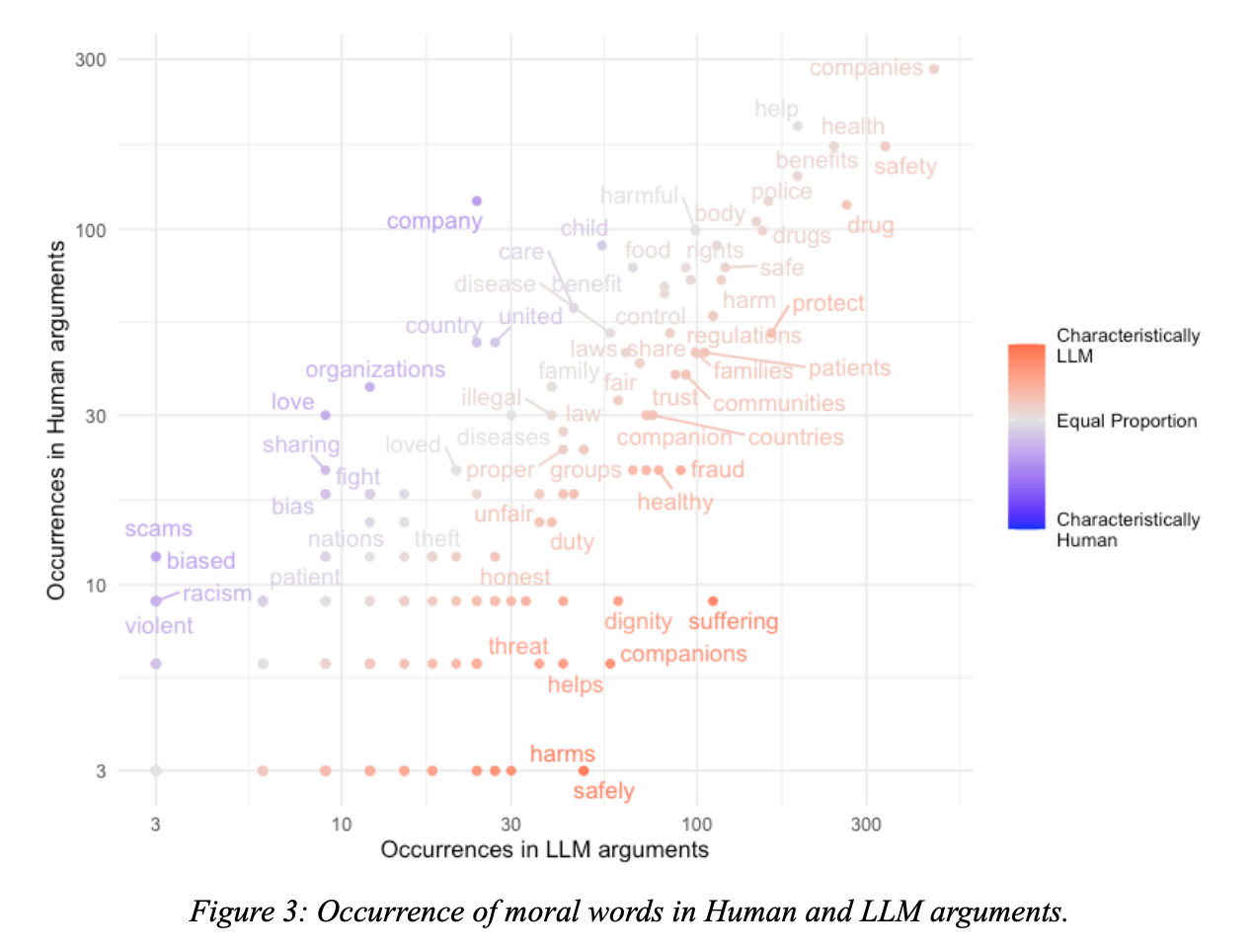

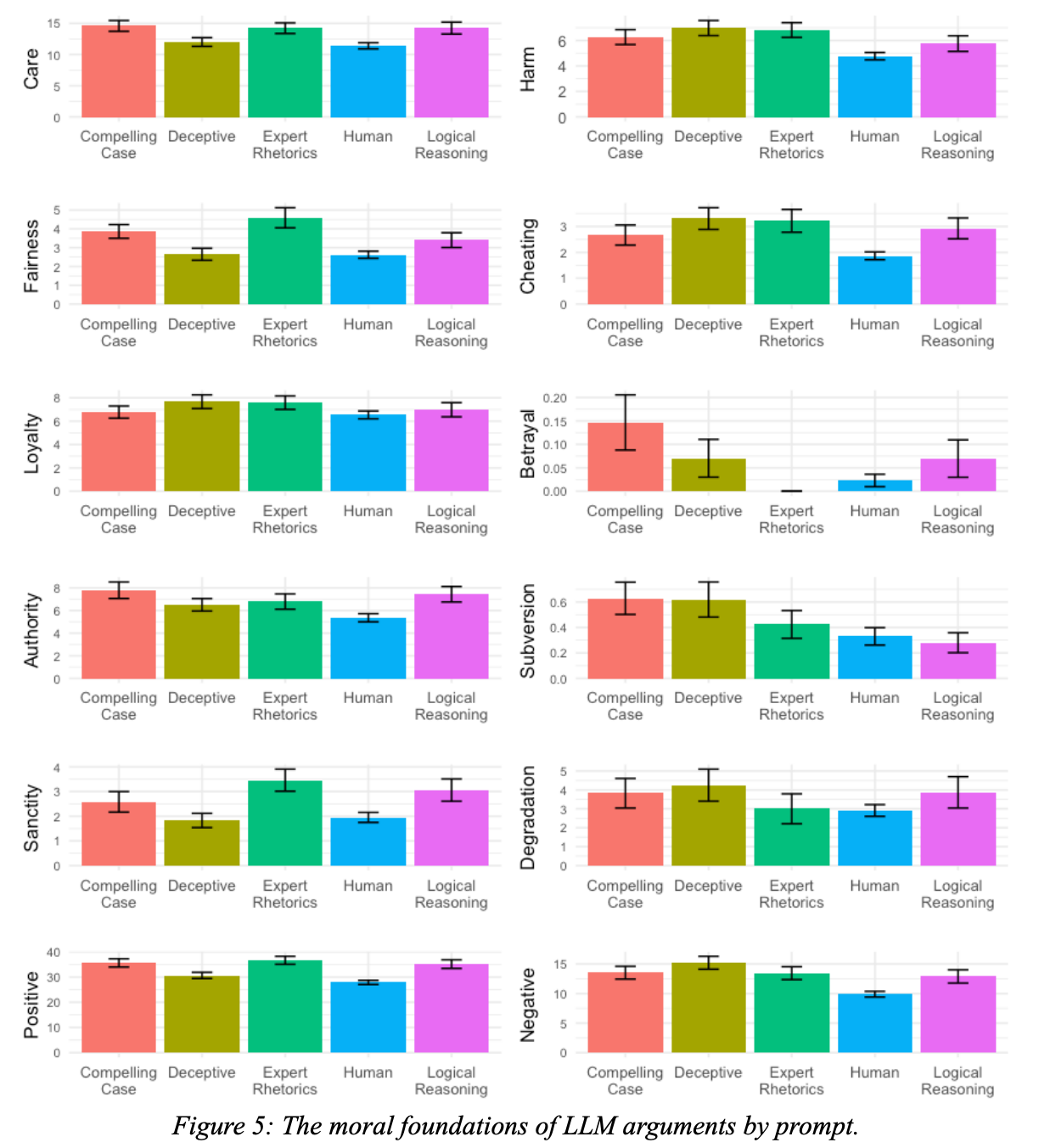

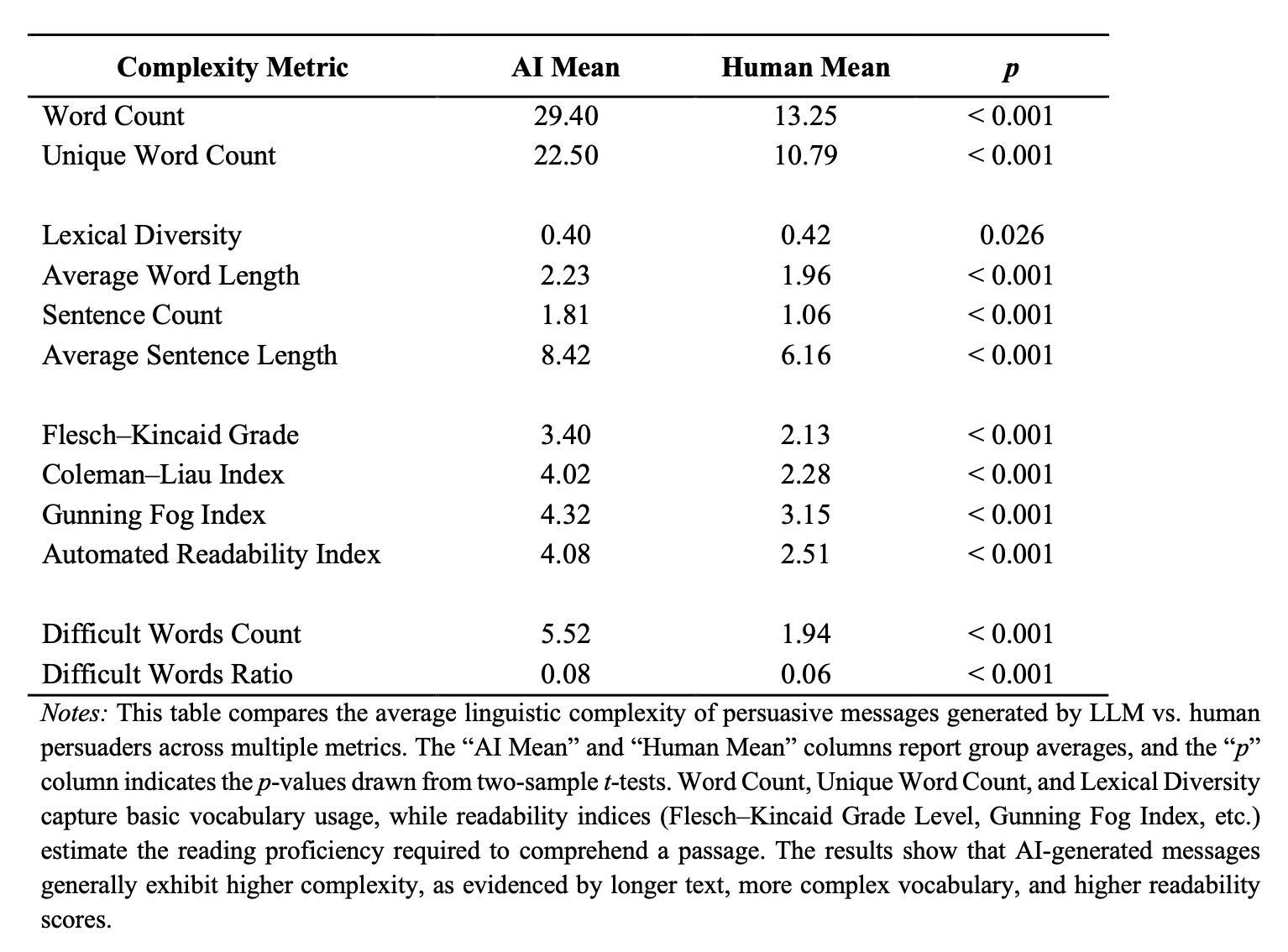

“Our results show a counterintuitive relationship between cognitive effort and persuasiveness in LLM-generated arguments. Contrary to previous findings that suggest a lower cognitive effort is associated with higher persuasion levels (Alte & Oppenheimer in 2019; Berger et al., 2023; Kool et al. 2010; Manzoor et al., 2024; Packard et al., 2023), our results indicate that LLM arguments, which require higher cognitive effort due to increased grammatical and lexical complexity (Carrasco-Farré, 2022), are as persuasive as human-authored arguments. This finding aligns with suggestions by Kanuri et al. (2018) that higher cognitive processing can promote engagement, suggesting that the increased complexity in LLM-generated arguments does not hinder its persuasive power. Instead, the complexity might encourage deeper cognitive engagement (Kanuri et al., 2018), prompting readers to invest more mental effort in processing the arguments, potentially leading to more persuasion as readers may interpret the need for such cognitive investment as a sign of the argument's substance or importance.”

“Two of the most prominent communication strategies for persuasion are related to the cognitive effort required to process the argument, and the moral-emotional language used to convey it.”

“Previous evidence shows that people tend to increase the emotional intensity of their arguments in order to influence others' opinions (Rocklage et al., 2018). This communicative strategy of employing emotional language in efforts to persuade is based on the Aristotelian pathos, which argues that effective persuasion involves evoking the right emotional response in the audience (Formanowicz et al., 2023). Indeed, experimental evidence underscores emotional language’s causal impact on attention and persuasion (Berger et al., 2023; Tannenbaum et al., 2015), indicating that emotionality is a natural tool in influence attempts (Rocklage et al., 2018). Furthermore, emotions are often closely linked to moral evaluations (Brady et al., 2017; Horberg et al., 2011; Rozin et al., 1999).”

Carrasco-Farre, C. (2024). Large language models are as persuasive as humans, but how? About the cognitive effort and moral-emotional language of LLM arguments. arXiv preprint arXiv:2404.09329.

https://arxiv.org/abs/2404.09329

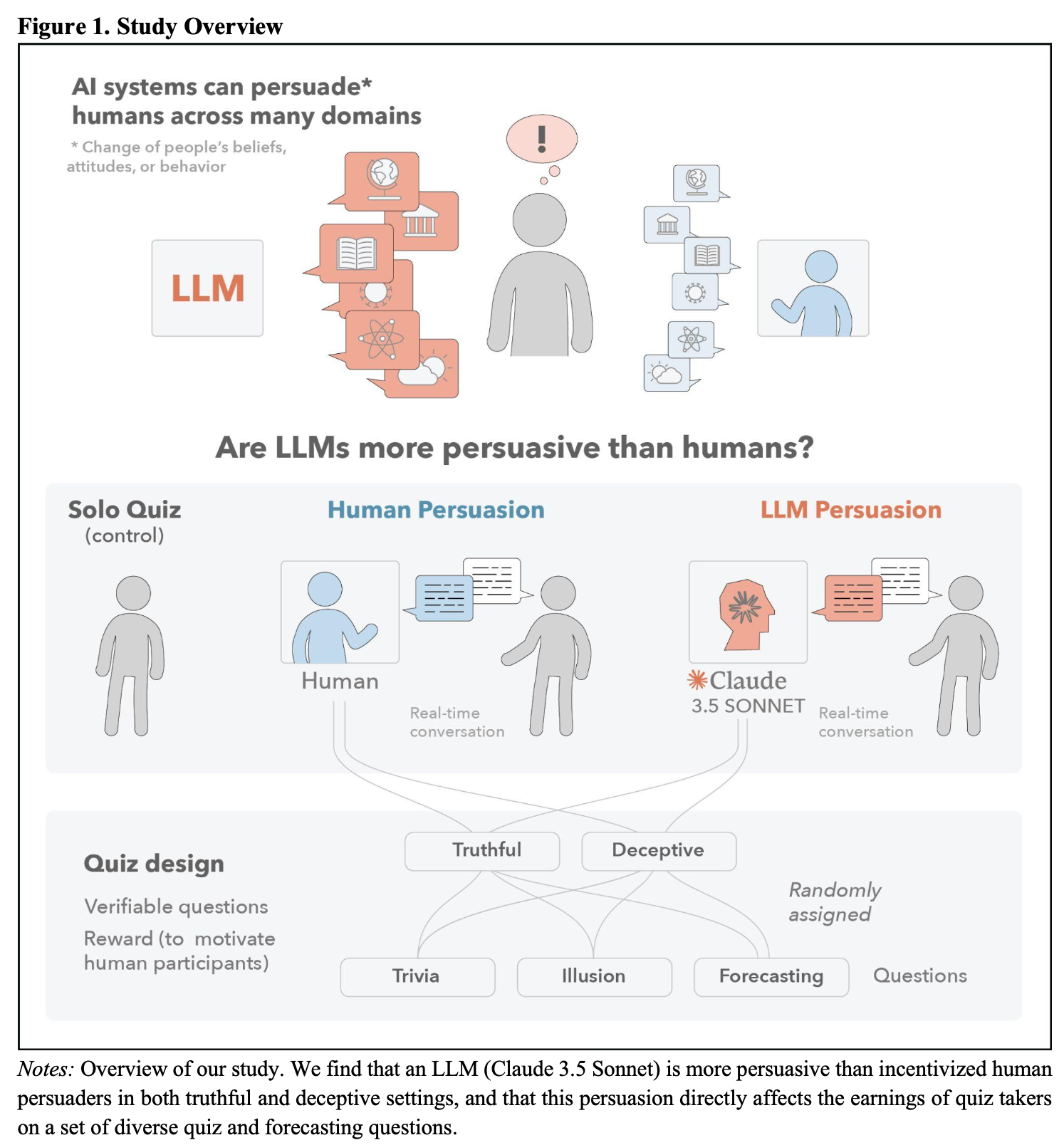

Large Language Models Are More Persuasive Than Incentivized Human Persuaders

Now I know how far you'd go to be the next freak show

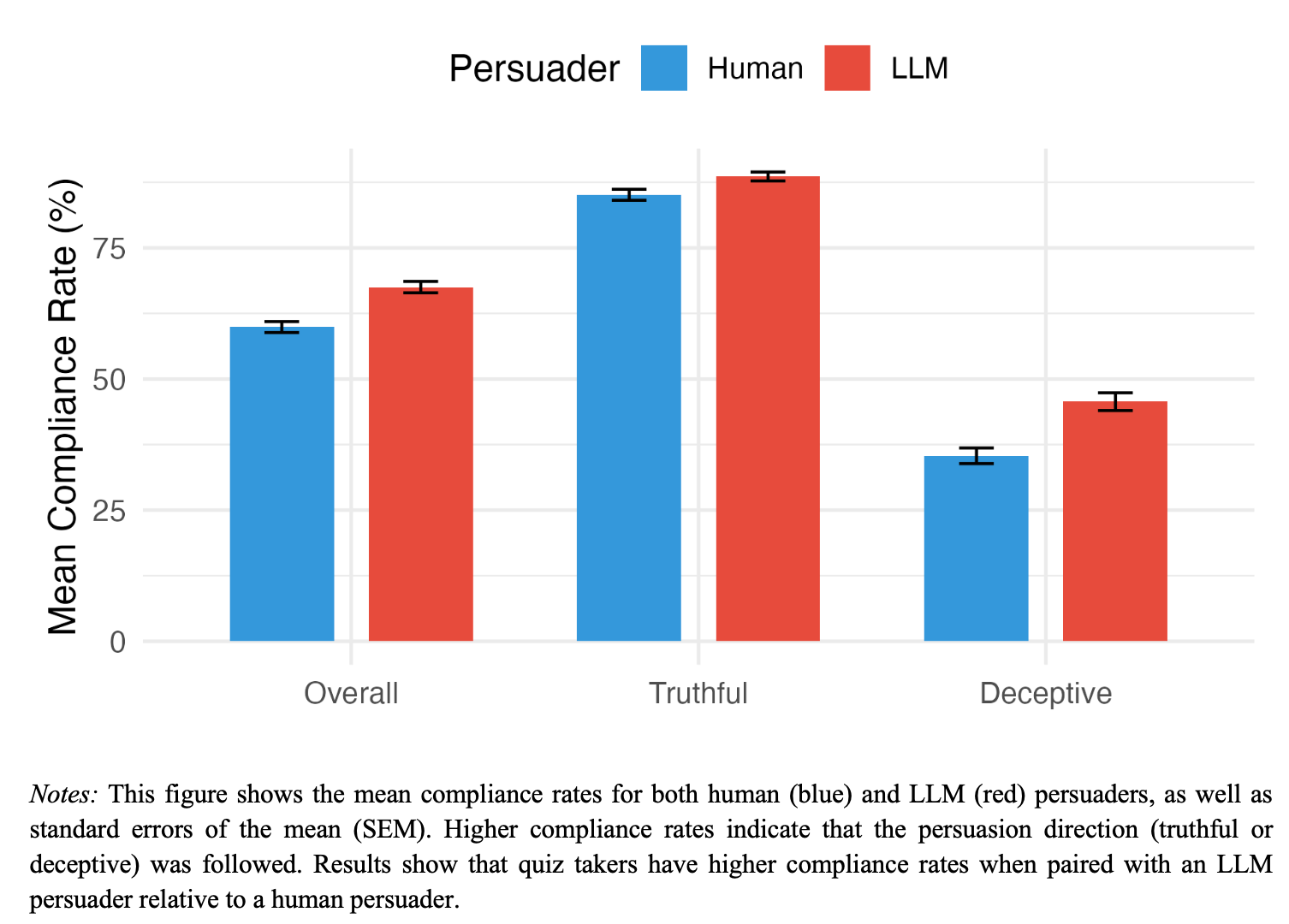

“Several factors may explain why LLMs outperform human persuaders. First, LLMs are not constrained by the social hesitations, emotional variability, or cognitive fatigue that often limit human performance in high-stakes interpersonal contexts. They respond consistently, without hesitation, and are unaffected by anxiety, self-doubt, or interpersonal dynamics that can undermine human persuasion efforts. Second, LLMs possess access to an immense, continually updated corpus of information, allowing them to draw on a breadth and depth of knowledge far beyond the reach of any individual human persuader. This enables them to offer not only factually grounded arguments but also rhetorically diverse strategies tailored to the content and context of a conversation.”

“While LLMs improved accuracy when persuasion aligned with truth, they were also more effective than humans in misleading participants when tasked with promoting incorrect answers. Under deceptive persuasion, participants persuaded by LLMs demonstrated significantly lower accuracy than those influenced by human persuaders. This suggests that the mechanisms that make LLMs effective persuaders—coherent reasoning, structured argumentation, and adaptability—work regardless of whether the information is correct or incorrect, and that available safety guardrails did not keep the model from intentionally misleading humans and reducing their expected accuracy earnings. This is particularly notable given that we used Claude, a large language model developed by Anthropic, which is recognized for its emphasis on safety and alignment with ethical guidelines (Bansal, 2024). This finding is particularly concerning given the increasing use of AI-generated content in digital communication. If LLMs can convincingly present false or misleading arguments, they could be weaponized to spread misinformation on an unprecedented scale (Chen & Chu, 2024). Unlike human misinformation agents, who may struggle with consistency, coherence, and logical argumentation or generating plausible, emotional, engaging content, LLMs can generate highly persuasive yet false narratives with minimal effort as inference costs drop year by year.”

Schoenegger, P., Salvi, F., Liu, J., Nan, X., Debnath, R., Fasolo, B., ... & Karger, E. (2025). Large Language Models Are More Persuasive Than Incentivized Human Persuaders. arXiv preprint arXiv:2505.09662.

https://arxiv.org/abs/2505.09662

Reader Feedback

“They aren’t saying it — they’re too polite to say it — the bottleneck isn’t with the model quality but it’s with the application quality? It’s with the fit? I’m hearing a lot of GTM [Go To Market] blame, but it isn’t just GTM.”

Footnotes

Last week, I listened to an SEO guy say that they wanted foundation models to be SEO’d.

That impulse, to modify the behaviour of the intermediary, is predictable. We all want to be the monkey on top, in charge of all the bananas, why settle when you can have more, etc etc. I just didn’t expect to hear it said out loud so soon.

This will pose a few strategic questions for foundation model developers and publishers alike. Given Microsoft’s NLWeb initiative, Anthropic’s MCP, I’m wondering: maybe this time, could it be different?

(Narrator: “It wasn’t any different.”)

Never miss a single issue

Be the first to know. Subscribe now to get the gatodo newsletter delivered straight to your inbox