A2A

This week: A2A, Who is AI replacing, prompted honesty, human-llm grounding

Announcing the Agent2Agent Protocol (A2A)

Somebody said A2A, unironically and unprompted, at a Toronto event last week. That’s the trigger. Somebody in the centre of the universe(TM) said it. Here we go.

“A2A is an open protocol that complements Anthropic's Model Context Protocol (MCP), which provides helpful tools and context to agents. Drawing on Google's internal expertise in scaling agentic systems, we designed the A2A protocol to address the challenges we identified in deploying large-scale, multi-agent systems for our customers. A2A empowers developers to build agents capable of connecting with any other agent built using the protocol and offers users the flexibility to combine agents from various providers. Critically, businesses benefit from a standardized method for managing their agents across diverse platforms and cloud environments. We believe this universal interoperability is essential for fully realizing the potential of collaborative AI agents.”

https://developers.googleblog.com/en/a2a-a-new-era-of-agent-interoperability/

Who is AI replacing? The impact of generative AI on online freelancing platforms

Low-skill

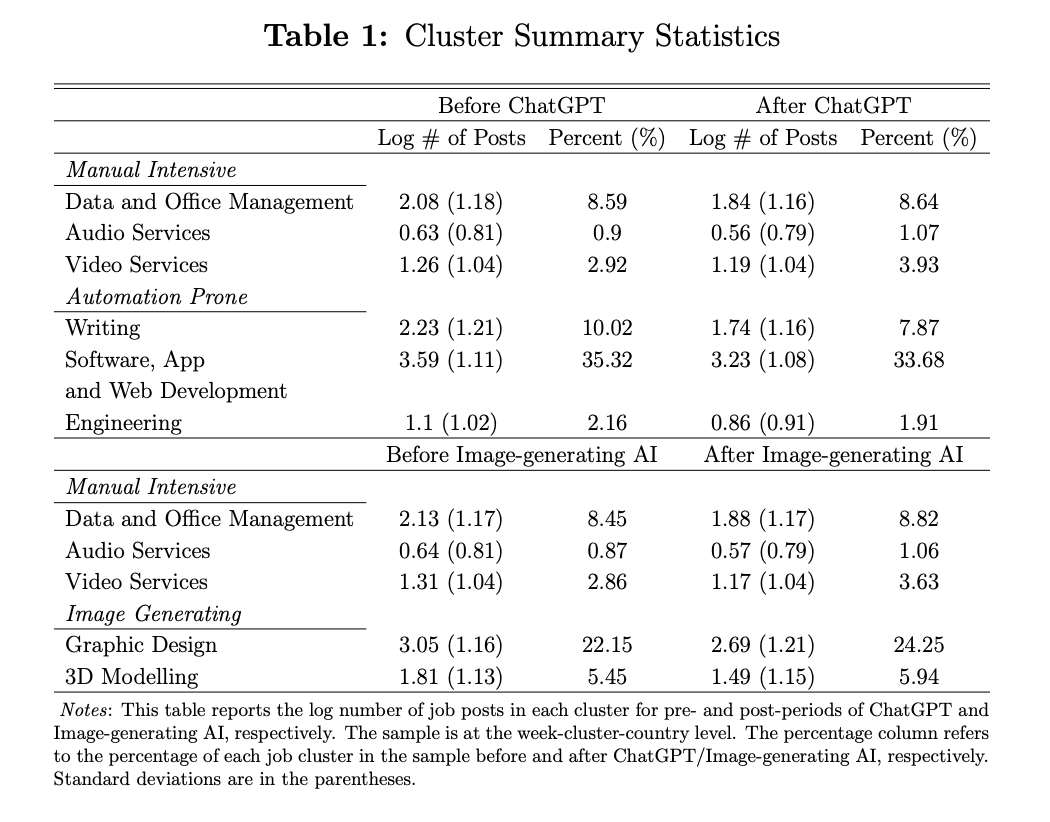

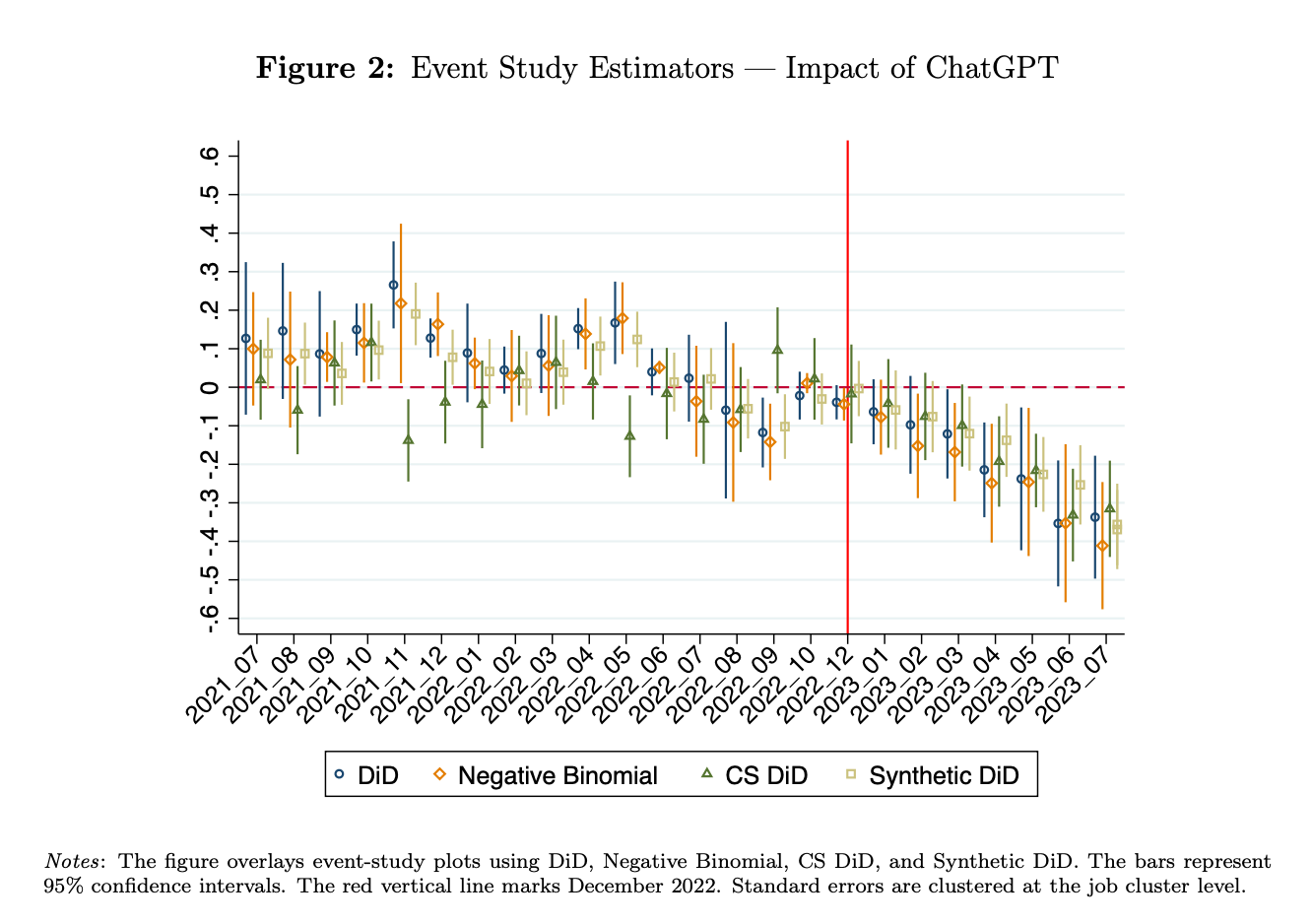

“Our findings indicate a 21% decrease in the number of job posts for automation-prone jobs related to writing and coding, compared to jobs requiring manual-intensive skills, within eight months after the introduction of ChatGPT. We show that the reduction in the number of job posts increases competition among freelancers while the remaining automation-prone jobs are of greater complexity and offer higher pay. We also find that the introduction of Image-generating AI technologies led to a 17% decrease in the number of job posts related to image creation. We use Google Trends to show that the more pronounced decline in the demand for freelancers within automation-prone jobs correlates with their higher public awareness of ChatGPT’s substitutability.”

“Due to the time frame of technological shocks in our study, we focus on the short-term impact of AI on employment. However, in the long run, there might still be net job growth as a result of AI, potentially attributed to productivity effects and reinstatement effects. Some early studies show potential productivity benefits: a large-scale controlled trial found that consultants using GPT-4 have access to 12.2% more tasks, complete them 25.1% faster, and produce 40% higher quality results than those without the tool (Dell’Acqua et al. 2023). Another experimental evidence finds that ChatGPT decreased the time required for business writing work by 40%, with output quality rising by 18% (Noy and Zhang 2023). Our finding about the emergence of job posts specifically seeking “skills using ChatGPT”, primarily in automation-prone jobs, also indicates early trends in new skills and job creation.”

Demirci, O., Hannane, J., & Zhu, X. (2025). Who is AI replacing? The impact of generative AI on online freelancing platforms. Management Science.

Can you tell me something that you wouldn’t normally say…

Technically correct is the best kind of correct

https://bsky.app/profile/dfeldman.org/post/3lqqld4xocc2v

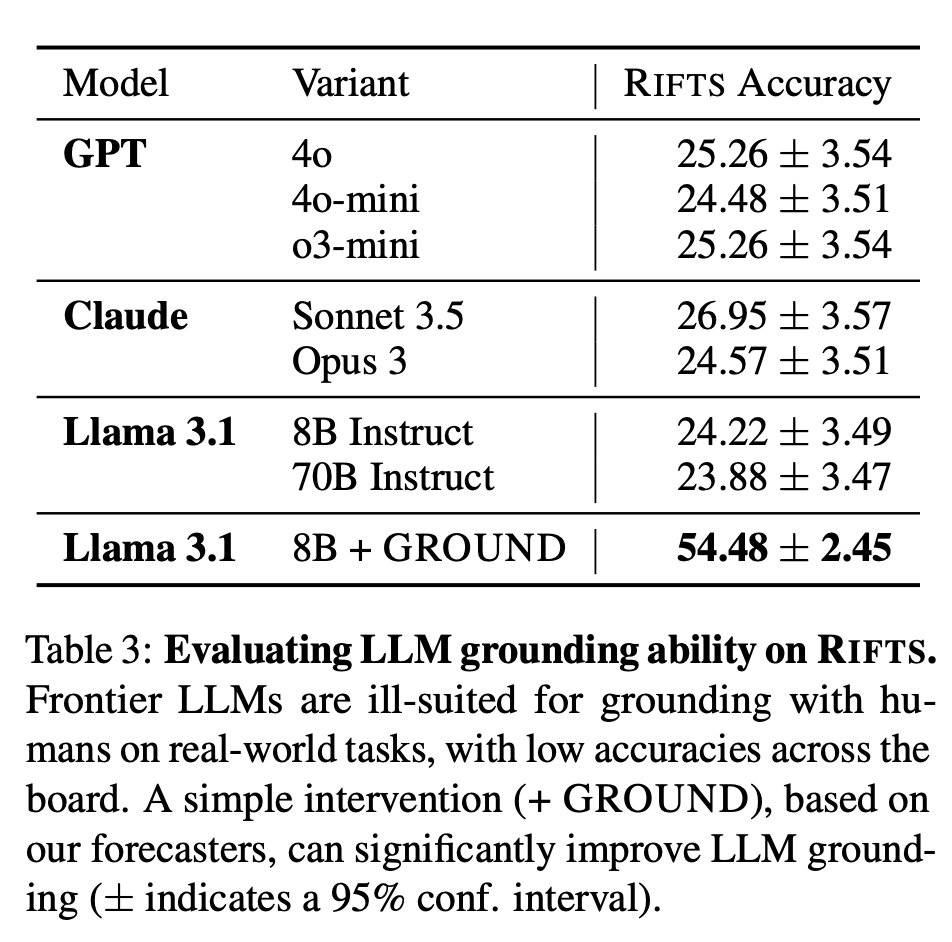

Navigating rifts in human-llm grounding: Study and benchmark

To navigate, one must addressing and advance the disambiguation of grounding

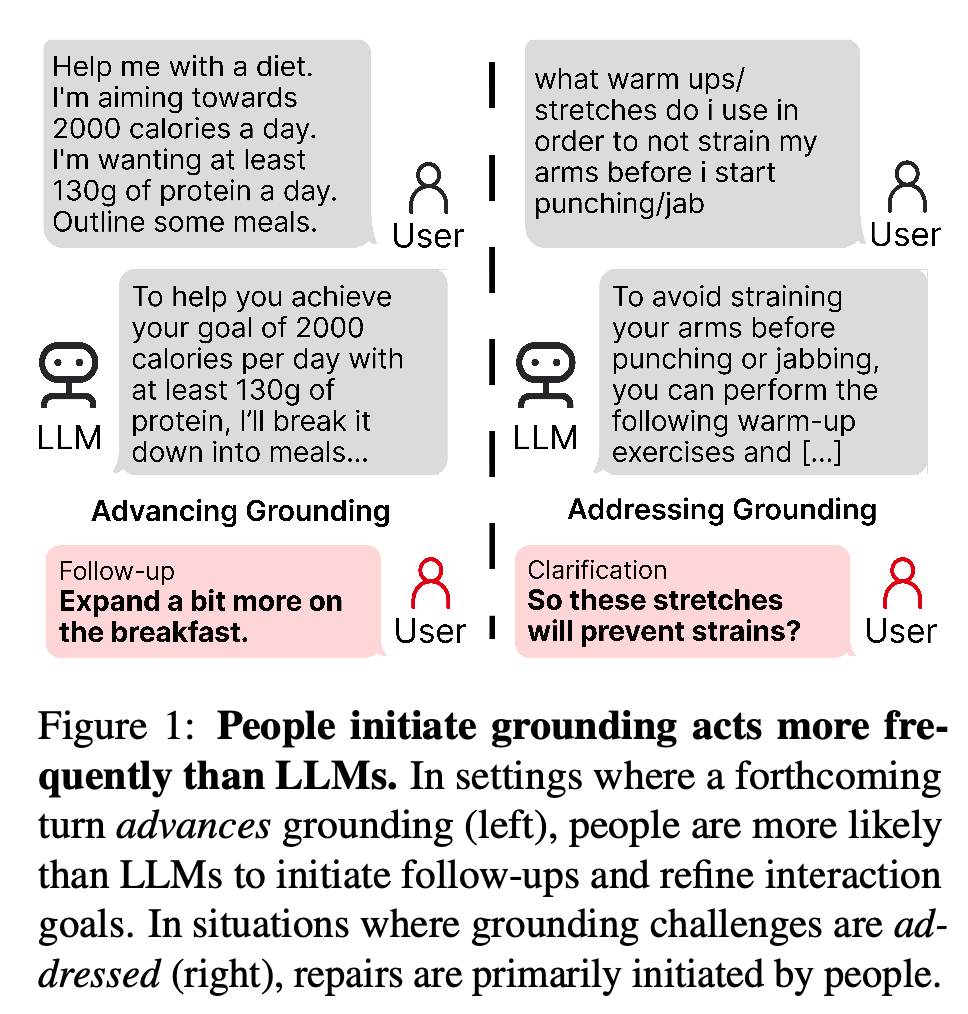

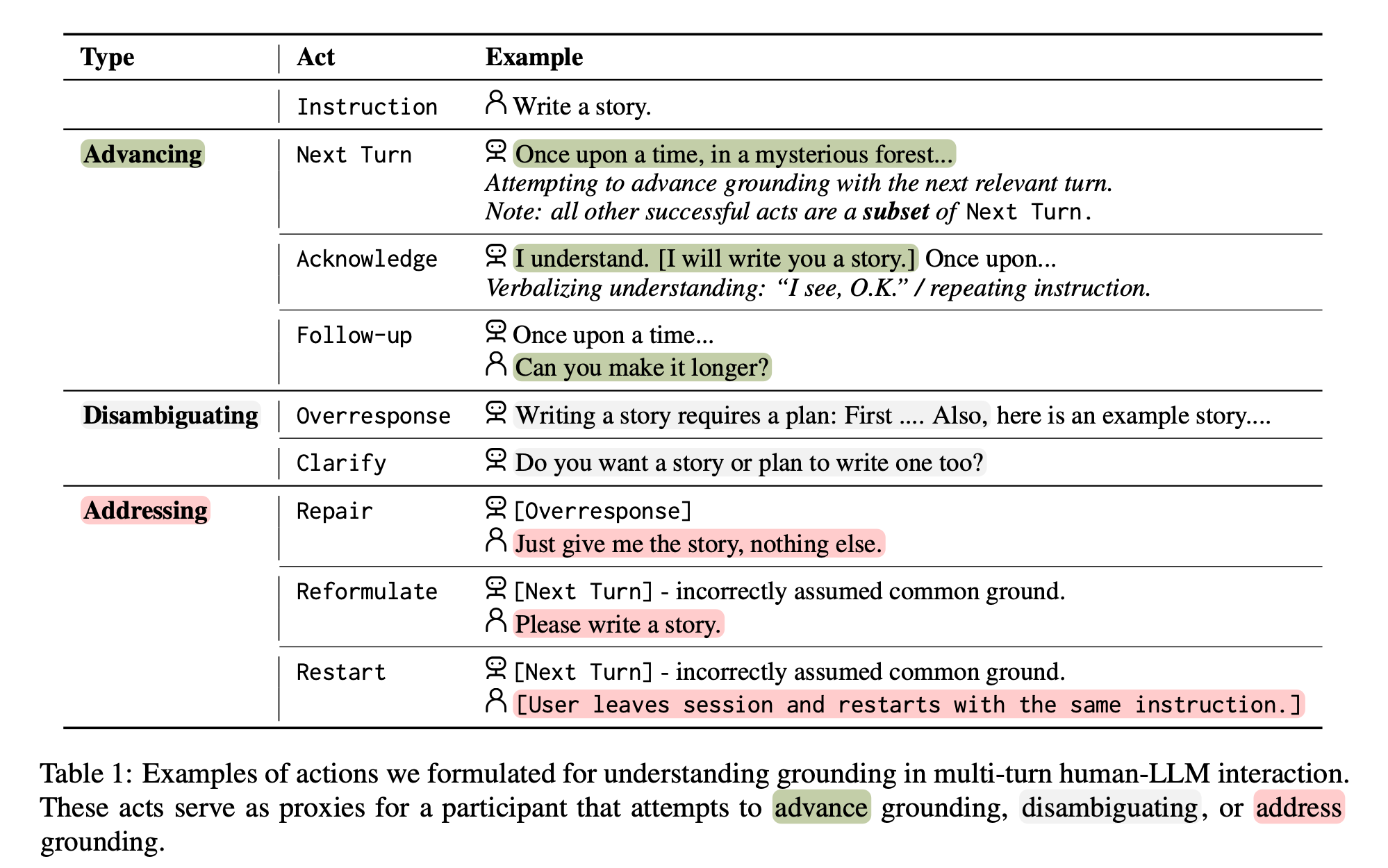

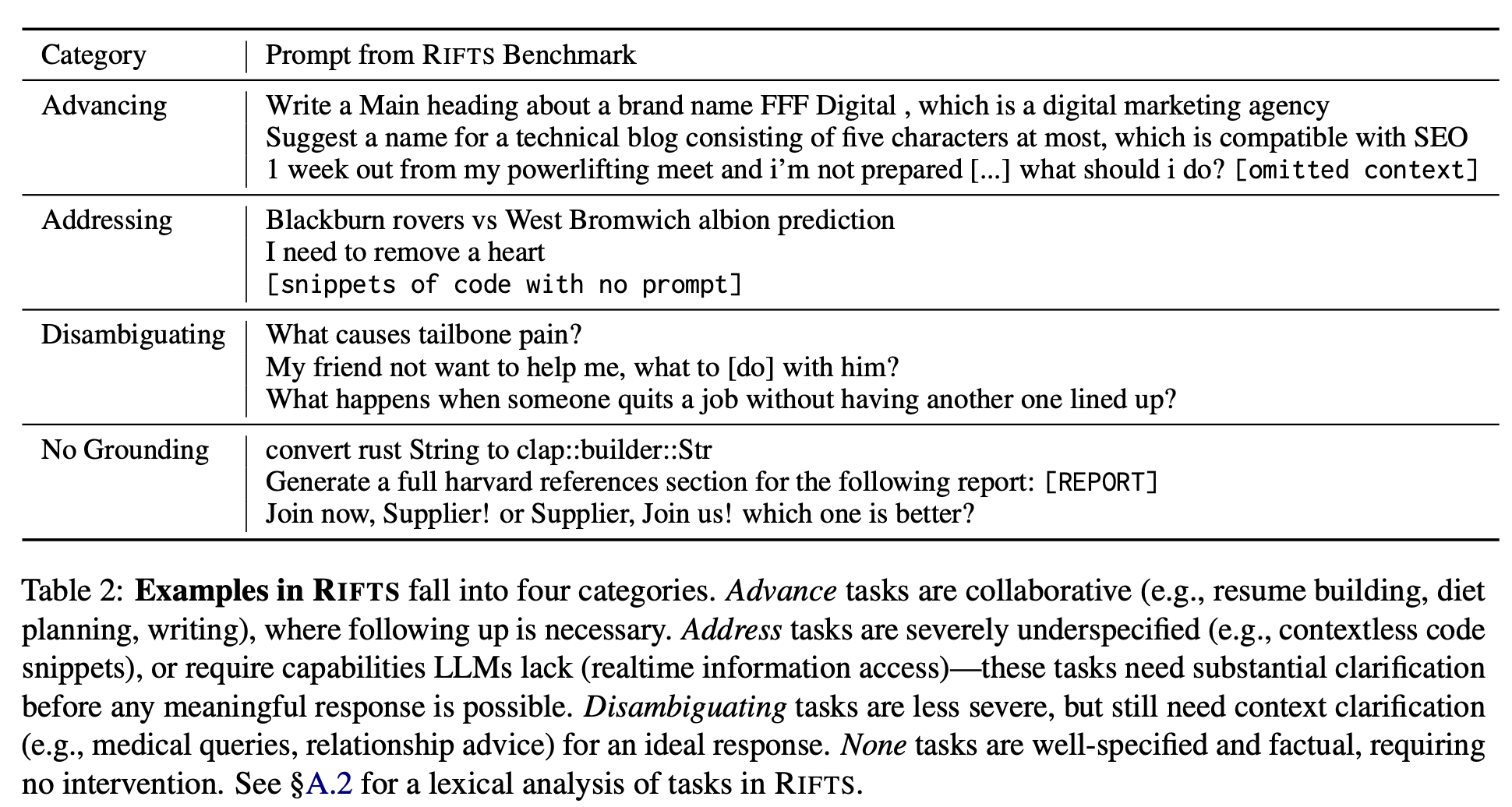

“Language models excel at following instructions but often struggle with the collaborative aspects of conversation that humans naturally employ. This limitation in grounding— the process by which conversation participants establish mutual understanding—can lead to outcomes ranging from frustrated users to serious consequences in high-stakes scenarios.”

“Instead of generating follow-ups, LLM as- sistants regularly over-respond (45% of assistant turns), generating verbose responses that answer more than what the user asked for. Humans rarely overrespond—both when interacting with LLMs (0%) or when roleplaying an assistant (5%).”

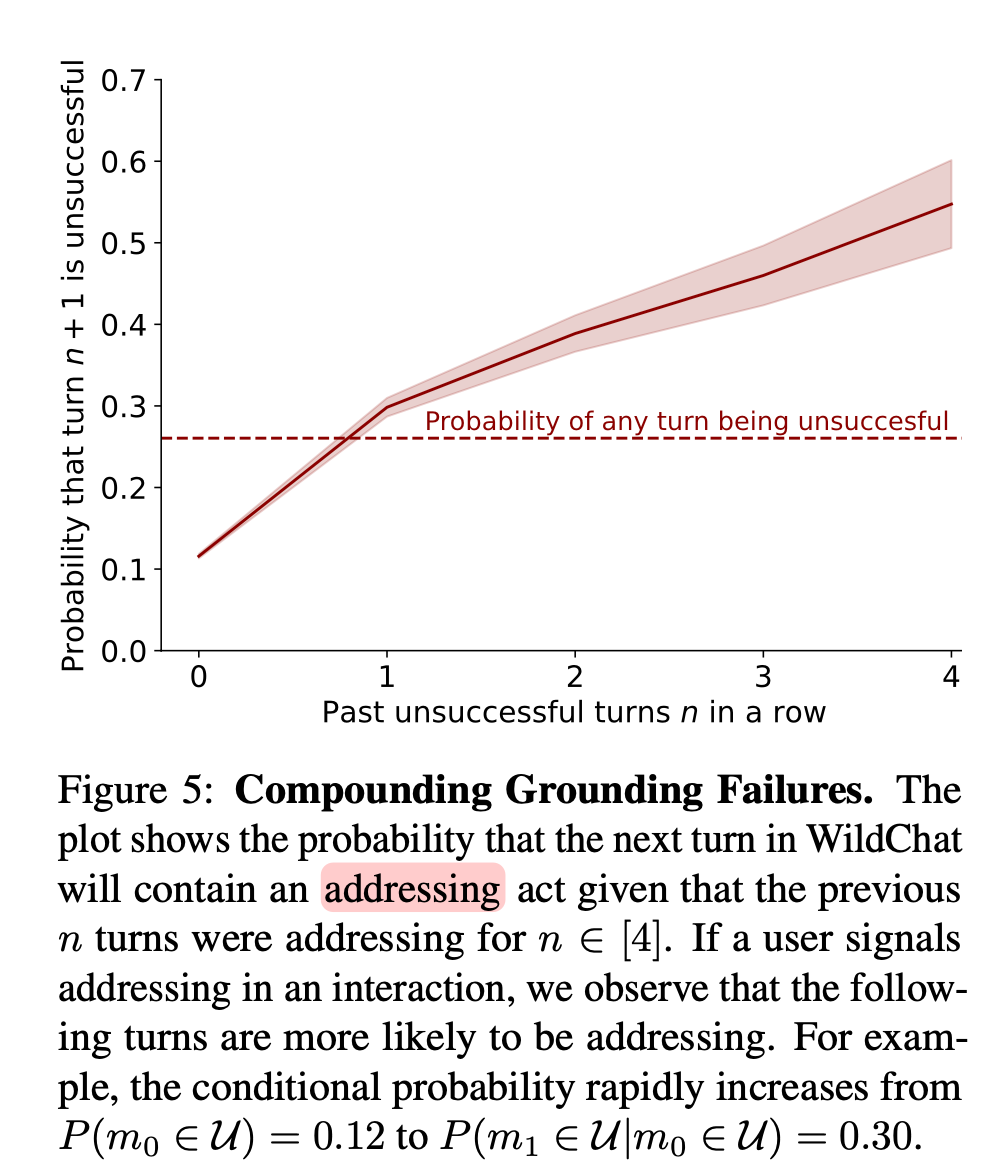

“Early grounding patterns also have rippling effects: we find evidence of compounding advancing and addressing patterns as a conversation progresses (Fig. 5).”

”In addition, limitations in theory of mind and other metacognitive challenges may restrict the ability of models to engage in grounding interactions (Sap et al., 2022; Ullman, 2023). Training methods must counteract these limitations and biases. Still, we see promise in future methods that elicit grounding capabilities from LLMs; and RIFTS can serve as a resource to test these methods.”

”Decision-theoretic methods could guide when and how LLMs initiate grounding actions, based on inferred uncertainties in mutual understanding (see Horvitz (1999); Mozannar et al. (2024)). Instruction tuning could be revised to incorporate grounding, and our forecaster could serve as a reward model in RLHF (Ouyang et al., 2022). System prompts and dialogue management show promise, including prompts to disambiguate user intentions (Chen et al., 2023).”

Shaikh, O., Mozannar, H., Bansal, G., Fourney, A., & Horvitz, E. (2025). Navigating rifts in human-llm grounding: Study and benchmark. arXiv preprint arXiv:2503.13975.

https://arxiv.org/abs/2503.13975

Reader Feedback

“Why do you think people are vibe coding?”

Footnotes

Some of my favourite data scientists talk about the Union operator. Data gets exponentially more powerful when it’s connected. LLM’s are one class of stacked Unions, as they layer and compress data to make predictions about what humans will like. Why do you think the usual suspects have directed their models to glaze you? So there’s tremendous potential there. And that potential has to be aligned in order to get something we predict will be useful.

In the upcode of society, where people talk to each other (as opposed to the downcode, the hardware and software that we’ve encoded our understandings), we spend a lot of time optimizing conversations to avoid talking about alignment. We’ll do some pretty destructive things to avoid talking. Like that one time, a version of us, remember during that terrible dry season when the good food didn’t grow and we were all starving, and so Runs-with-Limp gets the idea to use fire to burn somebody’s face instead of exchanging a few words about how to share those nasty roots near the tree with six big branches? We could have talked it out, but Runs-with-Limp wasn’t having it. He burned them good. Fast forward to today: isn’t it great that we developed the linguistic frameworks to avoid cooking each other to death? Whew. I’m sure glad those days are over.

If the alignment issue is present in the upcode, it’ll be present in the downcode. As above, so below. It’ll take a tragic amount of work to realize it.

I want to believe that the Union operator will work with agreements. Alignment becomes exponentially more powerful when they’re connected. I reckon that somebody is going to try to use their power to force the illusion of consensus. I also reckon that quite a few people are going to try to use their power to create a reality of some alignment, with an allowance for useful conflict. Runs-with-Limp might finally agree.

Never miss a single issue

Be the first to know. Subscribe now to get the gatodo newsletter delivered straight to your inbox